I had a briefing with Bonitasoft CEO Miguel Valdes earlier this week to hear more about their 2021.1 release, announced today. Note that they have changed their version numbers to align with the year and release number relative to that year, something I’ve seen starting to happen with a number of vendors.

The most important part of this release, in my opinion, is their shift in what’s open source versus commercial. Like most open source vendors, Bonitasoft is built on an open source core engine and has a number of other open source capabilities, but creates proprietary commercial components that they license to paying customers in a commercial version of the system. In many cases, the purely open source version is great for technical developers who are using their own development tools, e.g., for data modeling or UI development, but it’s a non-starter for business developers since you can’t build and deploy a complete application using just the open source platform. Bonitasoft is shaking up this concept by taking everything to do with development — page/form/layout UI design, data object models, application descriptors, process diagrams, organization models, system connectors, and Git integration — and adding it to the open source version. In other words, instead of having one version of their Studio development environment for open source and another for commercial, everything is unified, including everything in the open source version necessary to develop and deploy process automation applications. Having a unified development environment also makes it easy to move a Bonitasoft application from the open source to the commercial version (and the reverse) without project redevelopment, allowing customers to start with one version and shift to the other as project requirements change.

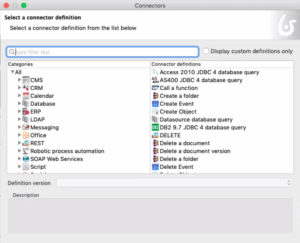

This is a big deal for semi-technical business developers, who can now use Bonita Studio to create complete applications on the same underlying platform as technical developers. Bonitasoft has removed anything that requires coding from Studio, recognizing that business developers don’t want to write code, and technical developers don’t use the visual development environment anyway. [As a Java developer at one of my enterprise clients once said when presented with a visual development environment, “yeah, it looks nice, but I’ll never use it”.] That doesn’t mean that these two types of developers don’t collaborate, however: technical developers are critical for the development of connectors, for example, which allow an application to connect to an external system. Once connectors are created to link to, for example, a legacy system, the business developers can consume those connectors within their applications. Bonitasoft provides connector archetypes that allow technical developers to create their own connectors, but what is missing is a way to facilitate collaboration between business and technical developers for specifying the functionality of a connector. For example, allowing the business developer to create a placeholder in an application/process and specify the desired behavior, which would then be passed on to the technical developer.

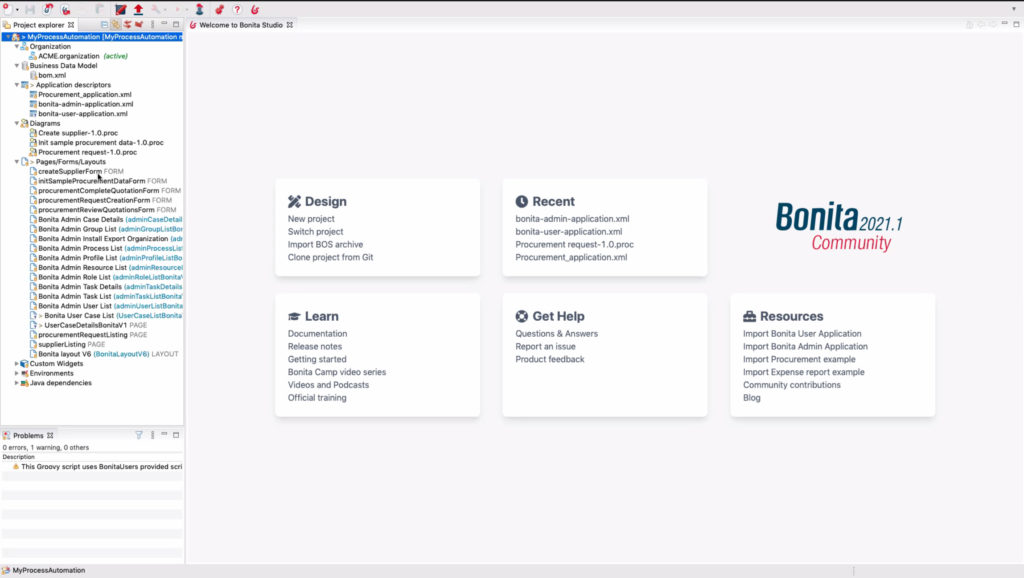

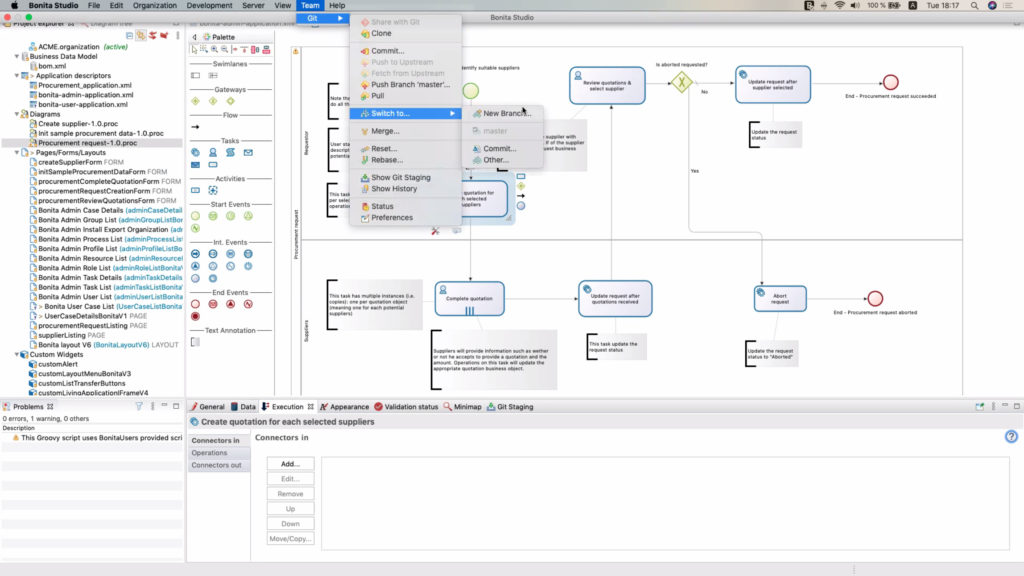

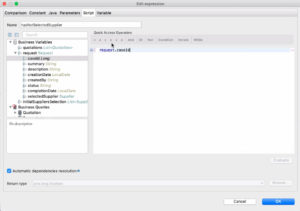

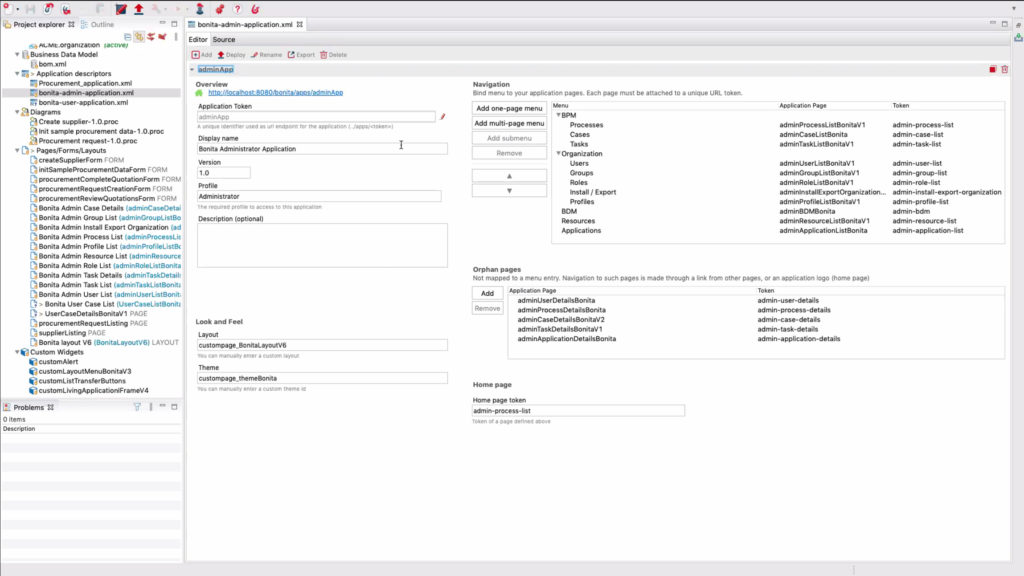

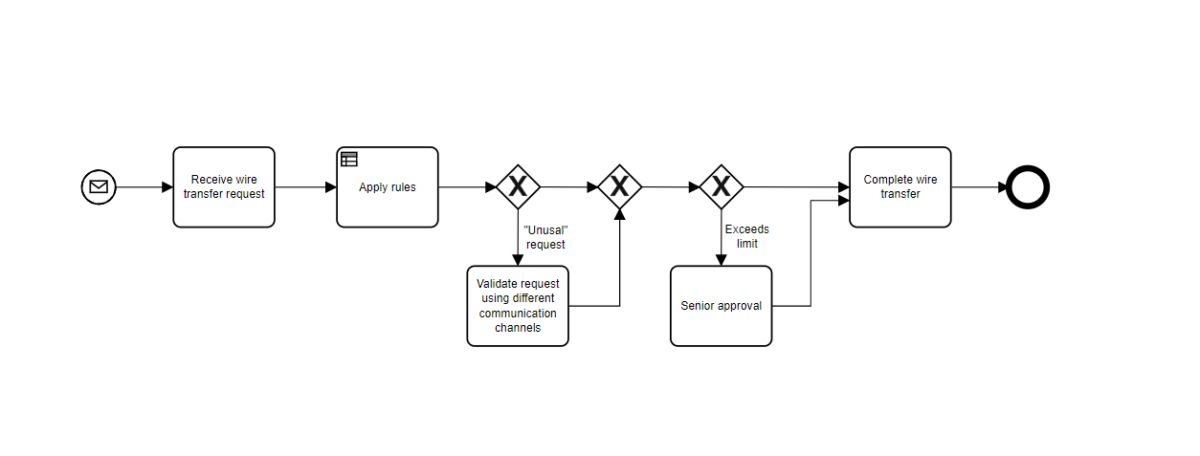

Miguel took me through a demo of Bonita Studio using only the open source version, showing their new starting point of MyProcessAutomation that includes sections for Organization, Business Data Model Applications, Diagrams, and Pages/Forms/Layouts. There are also separate sections for Custom Widgets and Environments: the latter is to be able to define separate environments for development, testing, production, etc., and was previously only in the commercial edition. It’s a very unified look and feel, and seems to integrate well between the components: for example, the expression editor allows direct access to business object variables that will be understandable to business developers, and they are looking at ways to simplify this further.

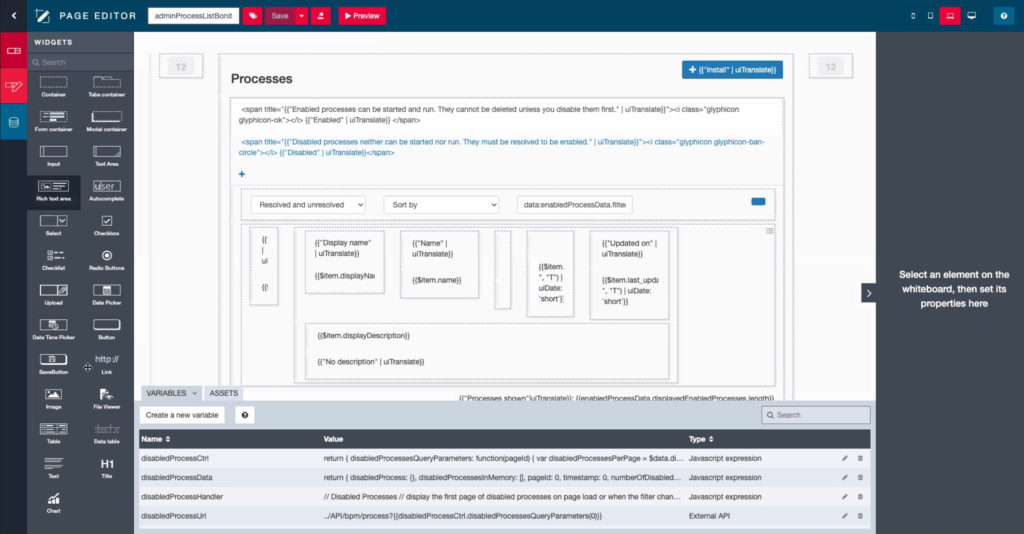

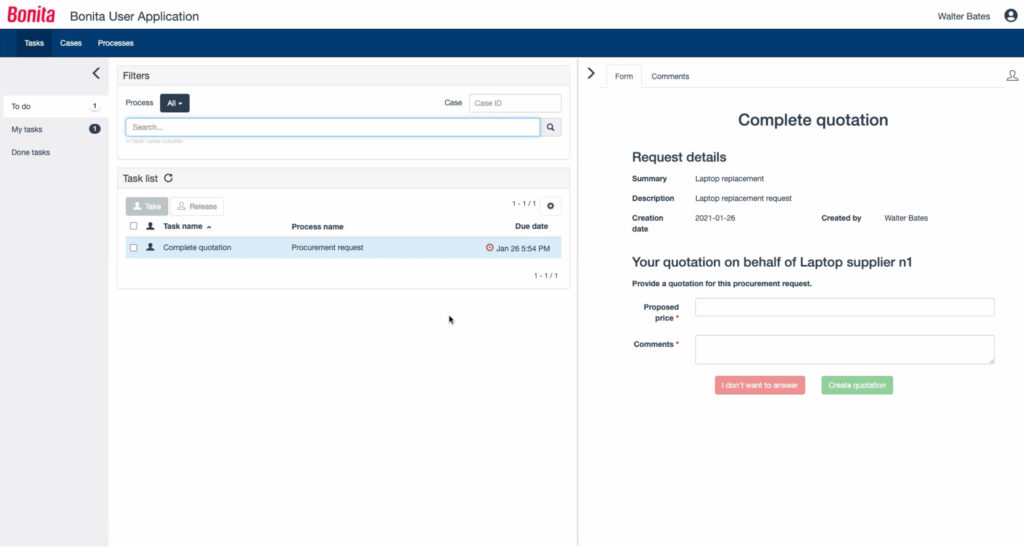

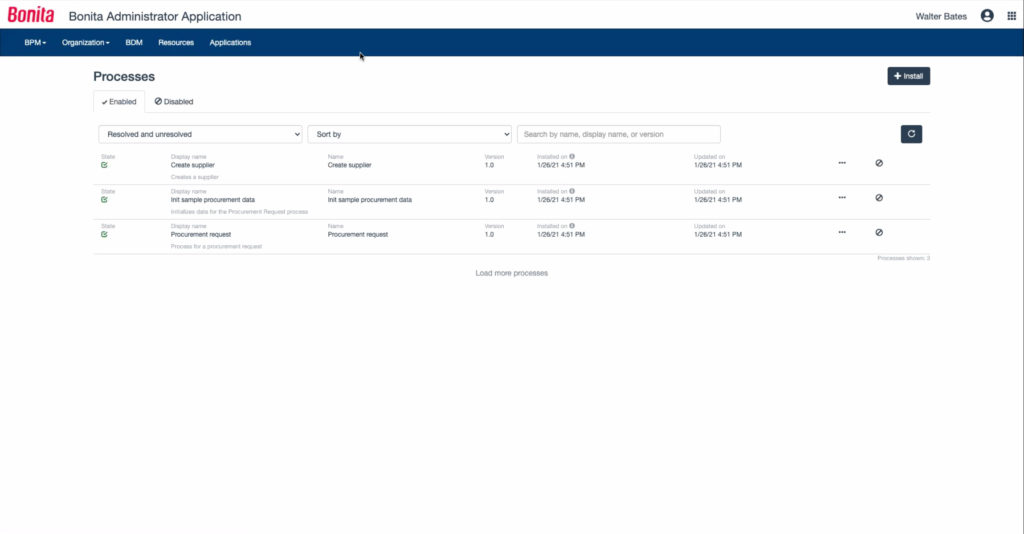

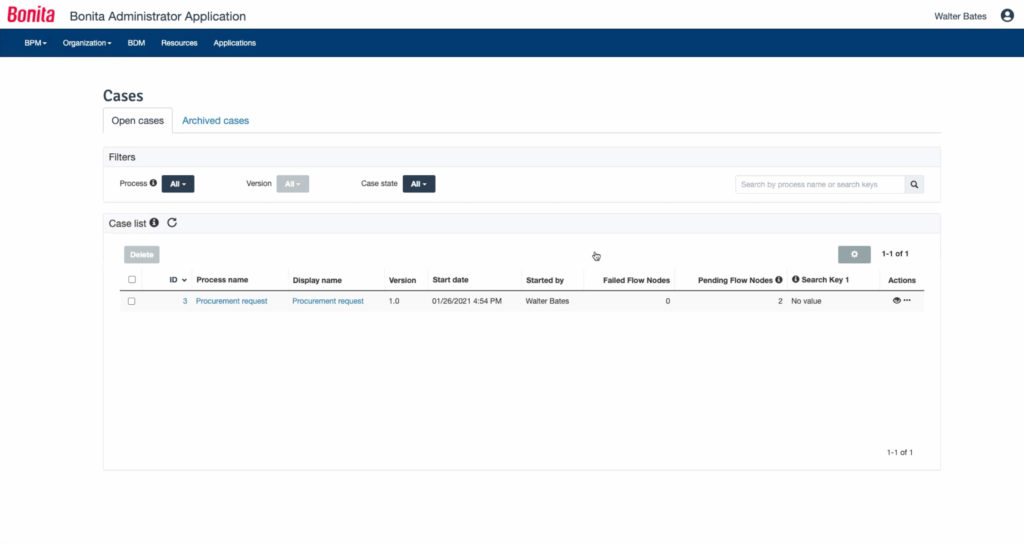

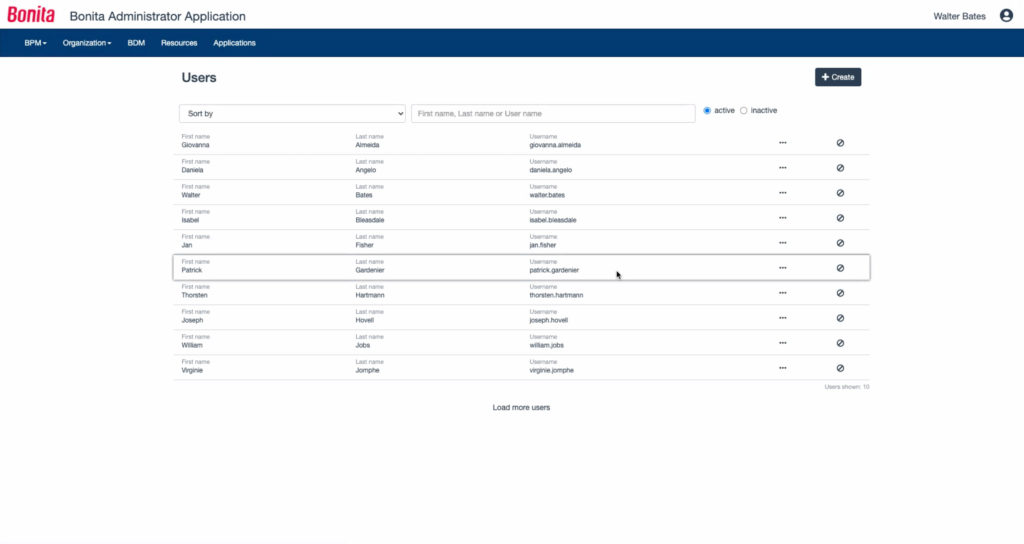

They are moving their UI away from Angular to Web Components, and are even using their own UI tools to create their new user and administrator portals. The previous Bonita Portal is now being replaced by two applications: one is a user portal that provides case and task list functionality, while the other is for administrators to monitor and repair any process instance problems. These two applications can be freely modified by customers to personalize and extend them within their own environment.

There are definitely things remaining in their commercial edition, with a focus on security (single sign-on), scalability (clustering, elasticity), monitoring, and automated continuous deployment. There are a few maintenance tools that are being moved from the open source to commercial version, and maintenance releases (bug fixes between releases) will be limited to commercial customers. They also have a new subscription model that helps with self-managed elastic deployments (e.g., Amazon Cloud); provides update and migration services for on-premise customers; and includes platform audits for best practices, performance tuning and code review. Along with this are some new packaged professional services offering: Fast Track for first implementation, on-premise to Bonita Cloud upgrade, and on-premise upgrades for customers not on the new subscription model.

The last thing that Miguel mentioned was an upcoming open source project that they are launching related to … wait, that might not have been for public consumption yet. Suffice to say that Bonitasoft is disrupting the open source process automation space to be more inclusive of non-technical developers, and we’ll be seeing more from them along these lines in the near future.