TIBCO had a bit of a hiatus on their conference while they were being acquired, but are back in force this week in Las Vegas with TIBCO NOW 2016. The theme is “Destination: Digital” with a focus on innovation, not just optimization, and the 2,000 attendees span TIBCO’s portfolio of products. You can catch the live stream here, which covers at least the general sessions each morning.

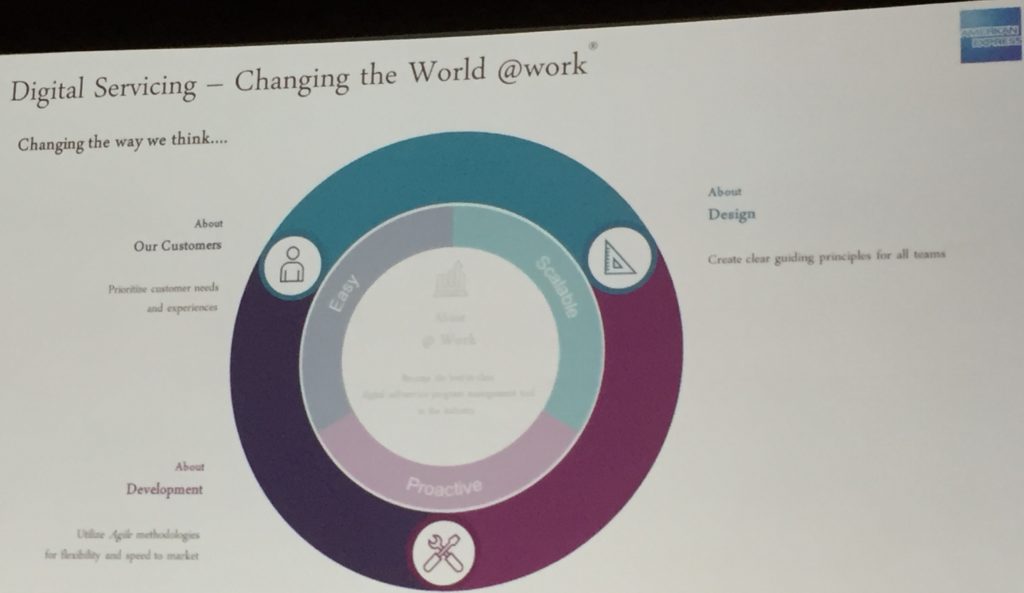

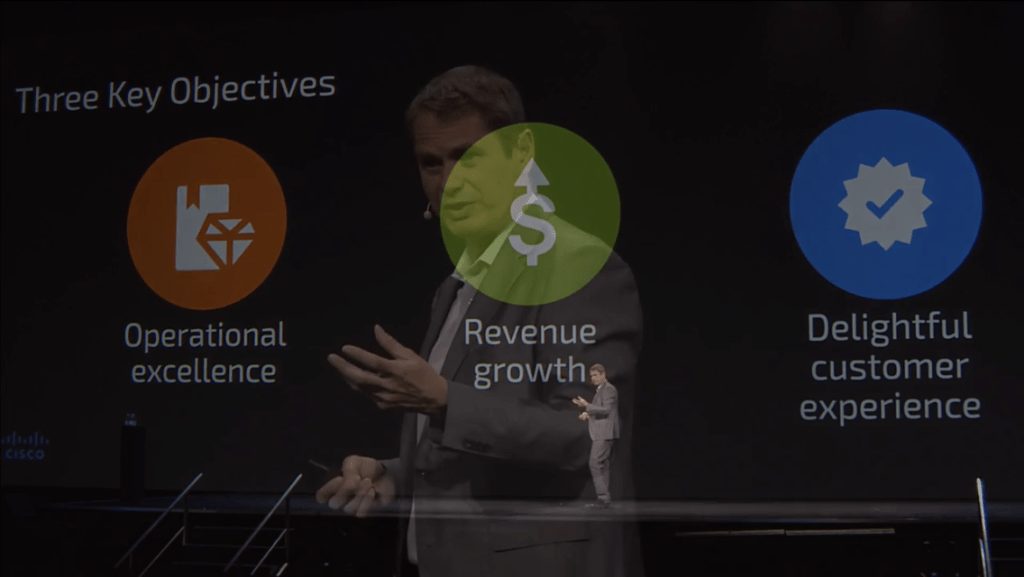

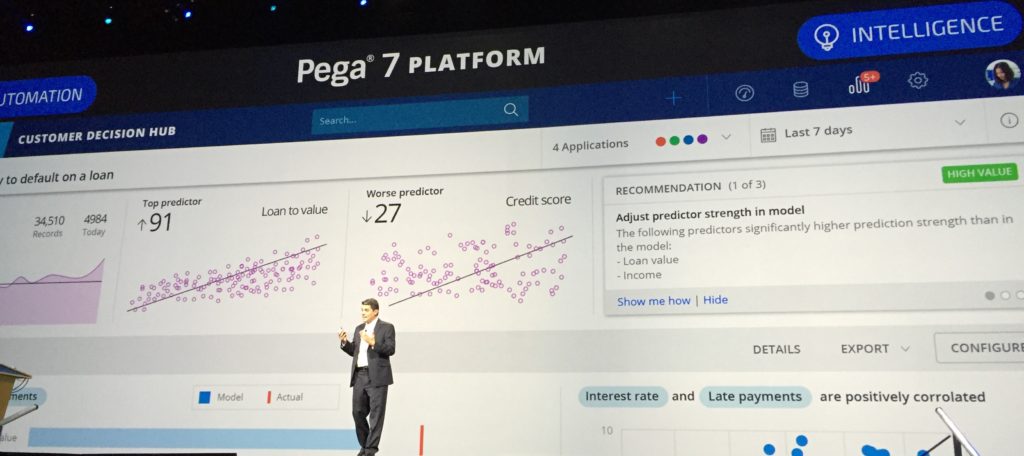

CMO Thomas Been opened the day by positioning TIBCO as a platform for digital transformation, then was joined by CEO Murray Rode. Rode talked about TIBCO’s own transformation over the last 18 months since the last conference, and how their customers are using TIBCO technology for real-time operations, analyzing and predicting the consumers’ needs, and enhancing the customer experience in this 4th industrial revolution that we’re experiencing. He used three examples to illustrate the scope of digital business transformation:

CMO Thomas Been opened the day by positioning TIBCO as a platform for digital transformation, then was joined by CEO Murray Rode. Rode talked about TIBCO’s own transformation over the last 18 months since the last conference, and how their customers are using TIBCO technology for real-time operations, analyzing and predicting the consumers’ needs, and enhancing the customer experience in this 4th industrial revolution that we’re experiencing. He used three examples to illustrate the scope of digital business transformation:

- A banking customer applies and is approved for a loan through the bank’s mobile app, without documents and signatures

- A consumer’s desires are predicted based on their behavior, and they are offered the right product at the right time

- A customer’s order (or other interaction with a business) is followed in real-time to enhance their experience

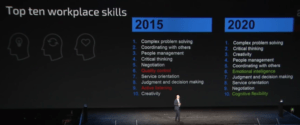

Although TIBCO has always been about real-time, he pointed out that real-time has become the new norm: consumers don’t want to wait for information or responses, and the billions of interconnected smart devices are generating events all the time. The use of TIBCO’s software is shifting from the systems of record — although that is still their base of strength — to the systems of engagement: from the core to the edge. That means not only different types of technologies, but also different development and deployment methodologies. Their goals: interconnect everything, and augment intelligence; this seems to also represent the two main divisions for their products.

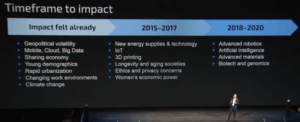

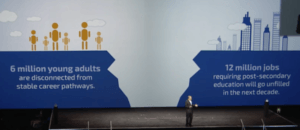

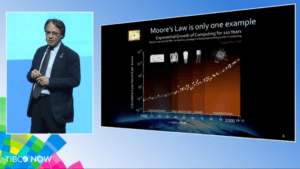

That set the stage for Ray Kurzweil, the author and futurist, who spoke about the revolution in artificial intelligence-driven innovation supported by the exponential growth in computing capabilities. The drastically dropping price performance ratio of computing is what is enabling innovation: in some cases, innovation doesn’t occur on a broad scale if it’s not cost effective. He had lots of great examples of how innovation has occurred and will continue to evolve in the future, especially around human biology, finishing up with Thomas Been joining him on stage for a conversation about Kurzweil’s research as well as the opportunities facing TIBCO’s customers. I didn’t put most of the detail in here; check for a replay on the live stream.

That set the stage for Ray Kurzweil, the author and futurist, who spoke about the revolution in artificial intelligence-driven innovation supported by the exponential growth in computing capabilities. The drastically dropping price performance ratio of computing is what is enabling innovation: in some cases, innovation doesn’t occur on a broad scale if it’s not cost effective. He had lots of great examples of how innovation has occurred and will continue to evolve in the future, especially around human biology, finishing up with Thomas Been joining him on stage for a conversation about Kurzweil’s research as well as the opportunities facing TIBCO’s customers. I didn’t put most of the detail in here; check for a replay on the live stream.

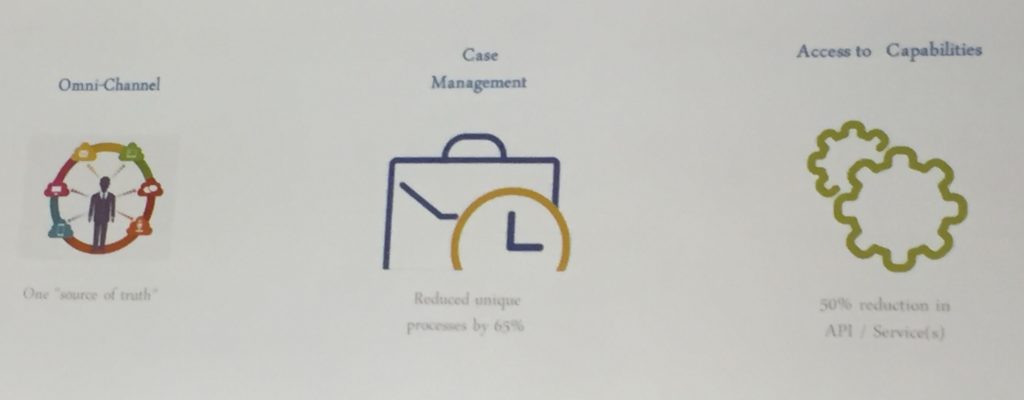

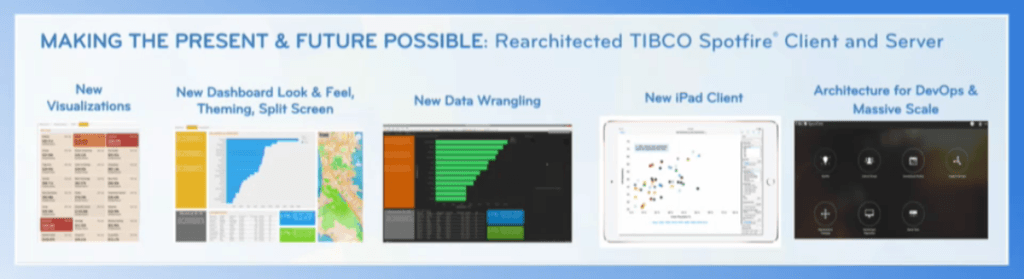

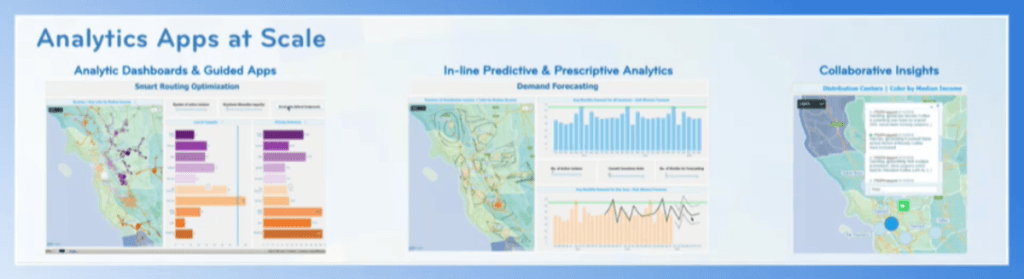

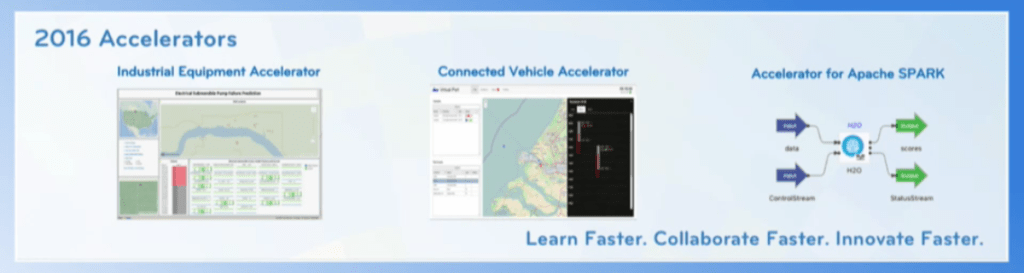

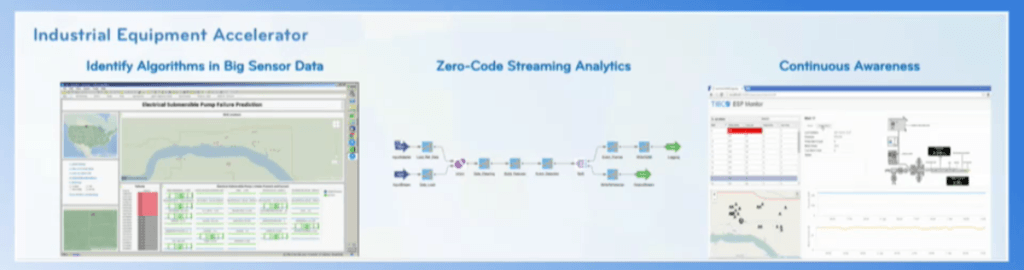

Matt Quinn, TIBCO’s CTO, took over with a product overview. In this keynote, he looked at the “interconnect everything” products, leaving the “augment intelligence” side of the portfolio for tomorrow’s keynote. They’ve set some core principles for all product development: cloud first (including on-premise and hybrid, as well as public cloud), ease of use (persona-based UX, industry solutions, and support community), and industrialization (cross-product integration, more open DevOps, and IoT). He expanded the idea of “interconnect everything” to “interconnect everything, everywhere”, and brought in VP of engineering Randy Menon to talk about their cloud platform strategy specifically as it relates to integration. As Quinn mentioned, he talked about how TIBCO has always built great products for the core, or “products for the CIO” as he put it, but that they are now looking at addressing different audiences. He went through some of the new functionality in their interconnection portfolio, include enhancements to ActiveMatrix BusinessWorks, ActiveMatrix BPM (now including case management and more flexible UI building), TIBCO MDM, and FTL messaging. He also introduced and showed demos of BusinessWorks Container Edition for cloud-native integration, supporting a number of standard cloud container services; TIBCO Cloud Integration, allowing iPaaS use cases to be enabled using a point-and-click environment; and Microflows using Node.js. He talked about their Mashery acquisition and what’s coming up in the API management product with real-time APIs, richer visual analytics leveraging Spotfire, and a cloud-native hybrid gateway. Combined with the other cloud products, this provides an end-to-end environment for creating and deploying cloud APIs. But their technology advances aren’t just about developers: it’s also for “digital citizens” who want to integrate and automate a variety of cloud tools using Simplr, which allows for simple workflows and forms. Nimbus Maps, a slimmed-down version of Nimbus, is also a tool for business people who want to do some of their own process documentation.

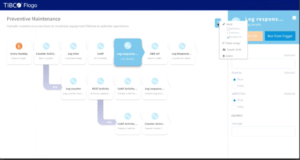

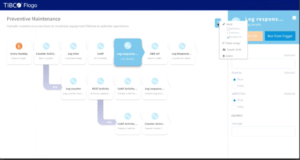

Rajeev Kozhikkattuthodi, director of product marketing, came up to announce Project Flogo, a lightweight IoT integration product, which they intend to make open source. It can be used to create simple workflows using a Golang-based engine that integrate with a variety of devices, a design bot in Slack and an interactive debugger; the runtime is 20-50 times smaller than similar development environments. It’s not released yet but he showed a brief demo and it’s apparently on the show floor.

Rajeev Kozhikkattuthodi, director of product marketing, came up to announce Project Flogo, a lightweight IoT integration product, which they intend to make open source. It can be used to create simple workflows using a Golang-based engine that integrate with a variety of devices, a design bot in Slack and an interactive debugger; the runtime is 20-50 times smaller than similar development environments. It’s not released yet but he showed a brief demo and it’s apparently on the show floor.

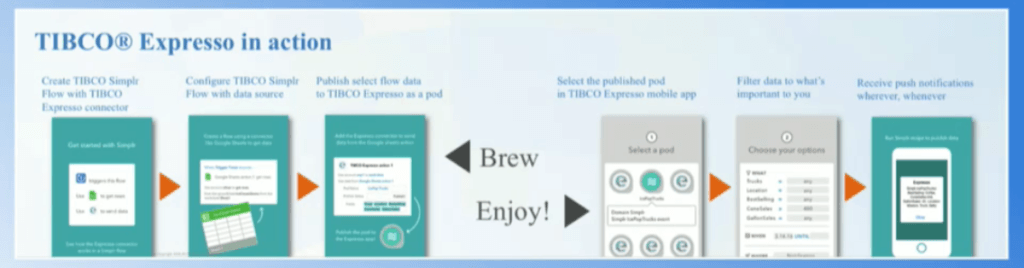

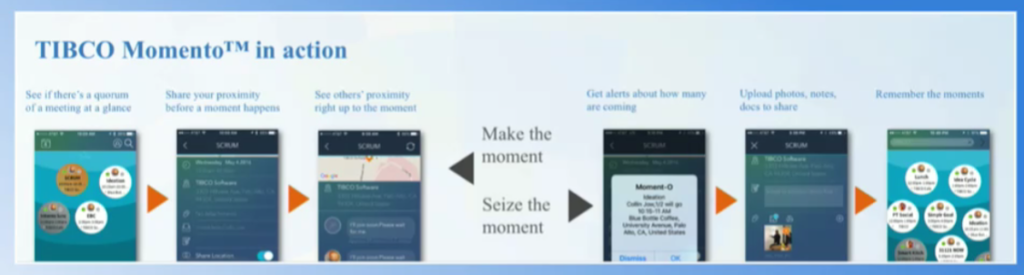

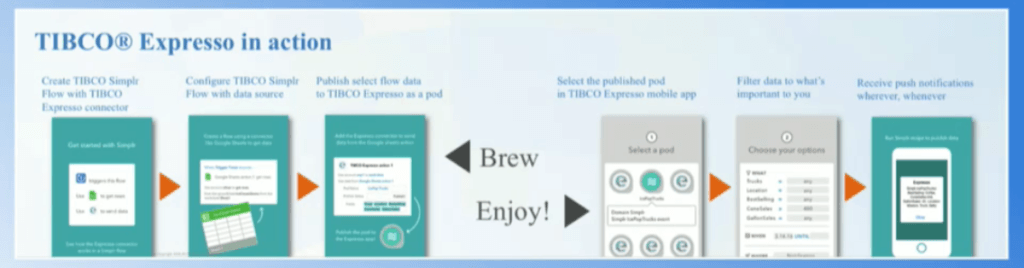

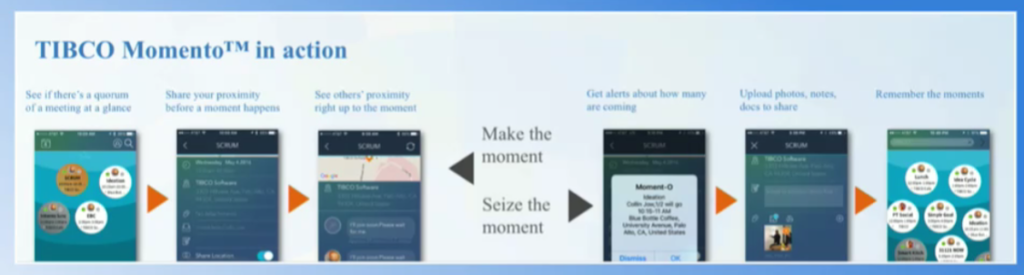

Quinn returned to mention a few other products — TIBCO Expresso; Momento; and their IoT innovations — before turning over to Raj Verma, EVP of worldwide sales to talk about their customers’ journey during the purchasing process. With 10,000+ customers and $1B in revenue, TIBCO is big but has room to grow, and a better experience during the purchase, installation and scaling of TIBCO products would help with that. They are starting to roll out some of this, which includes much more self-service for product information and downloaded trials, plus enhancements to the TIBCO community to include more training materials and support; standardized pricing for product suites; and online purchasing. Although there is still a significant field sales force to help you along, it’s possible to do much more directly, and they’re enhancing their partner channel (which Verma admitted has some significant problems in the past) if you have already have a trusted service provider. A much more customer-focused approach to sales and implementation, which was certainly required to make them more competitive.

A marathon 3-hour general session, with a lot of good content. I’m looking forward to the rest of the conference.

I’ll be speaking on a panel this afternoon on the topic of digital business, drop by and say hi if you’re at the conference.