Hacker and security consultant Kevin Mitnick gave today’s opening keynote at DST’s ADVANCE 2016 conference. Mitnick became famous for hacking into a lot of places that he shouldn’t have been, starting as a phone-phreaking teenager, and spending some time behind bars for his efforts; these days, he hacks for good, being paid by companies to penetrate their security and identify the weaknesses. A lot of his attacks used social engineering in addition to technical exploits, and that was a key focus of his talk today, starting with the story of how Stanley Rifkin defrauded the bank where he worked of $10.2M by conning the necessary passwords and codes out of employees.

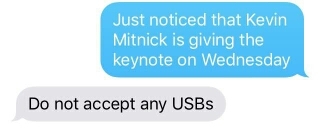

Hacking into systems using social engineering is often undetectable until it’s too late, because the hacker is getting in using valid credentials. People are strangely willing to give up their passwords and other security information to complete strangers with a good story, or unintentionally expose confidential information on peer-to-peer networks, or even throw out corporate paperwork without shredding. Not surprisingly, Mitnick’s company has a 100% success rate of hacking into systems if they’re permitted to use social engineering in addition to technical hacks; the combination of internal information and technical vulnerabilities is deadly. He walked us through how this could be done by looking just at metadata about a company, its users and their computers in order to build a target list and likely attack vector. He also discussed hacks that can be done using a USB stick, such as installing a rootkit or keylogger, reminding me of a message exchange that I had a couple of days ago with a security-conscious friend:

Mitnick demonstrated how to create a malicious wifi hotspot using WifiPineapple to hijack a connection and capture information such a login credentials, or trigger an update (such as Adobe Flash Player) that actually installs a fake update instead, gaining complete access to the computer. He pointed out that you can avoid these types of attacks by using a VPN every time you connect to a non-trusted wifi hotspot.

He demonstrated an access (HID) card reader that can read a card from three feet away, allowing the card and site ID to be read from the card, then played back to gain physical access to a building as if he had the original card. Even high-security HID cards can be read with a newer device that they’ve created.

He described how phishing attacks can be used in conjunction with cloned IVR systems and man-in-the-middle attacks, where an unsuspecting consumer calls what they think is their credit card company’s number, but that call is routed via a malicious system that tracks any information entered on the keypad, such as credit card number and zip code.

Next, he showed the impact of opening a PDF with a malicious payload, where an Acrobat vulnerability can be exploited to insert malware on your computer. Java applets can use the same type of approach, making you think that the applet is signed by a trusted source.

Using an audience volunteer, he showed how online tracing sites can be used to search for a person, retrieving their SSN, date of birth, address, phone numbers and mother’s maiden name: more than enough information to be able to call in to any call center and impersonate that person.

Although he demonstrated a lot of technical exploits that are possible, the message was that many of these can be avoided by educating people, and testing them on their compliance to the procedures necessary to thwart social engineering attacks. He referred to this as the “human firewall”, and had a lot of good advice on how to strengthen it, such as advising people to use Google Docs to open untrusted attachments, and using technology to protect information from internal people when they don’t need to see it.

Lots of great — and scary — demos of ways that you can be hacked.

This is the last day for ADVANCE 2016; I might make it to a couple of sessions later today, then we have a private concert with Heart tonight.