I’m back at DST‘s annual AWD ADVANCE user conference, where I’ll be speaking this afternoon on microservices and component architectures. First, however, I’m sitting in on the opening keynote where John Vaughn kicked things off, then passed off to Steve Hooley for a market overview. He pointed out that we’re in a low-growth environment now, with uncertain markets, making it necessary to look at cash conservation and business efficiencies as survival mechanisms. Since most of DST’s AWD customers are financial services, he talked specifically about the disruption coming to that industry, and how current companies have to drive down costs to be positioned to compete in the new landscape. Only a few minutes into his talk, Hooley mentioned blockchain, and how decentralized trust and transactions have the potential to turn financial services on its ear: in other words, the disruptions are technological as well as cultural.

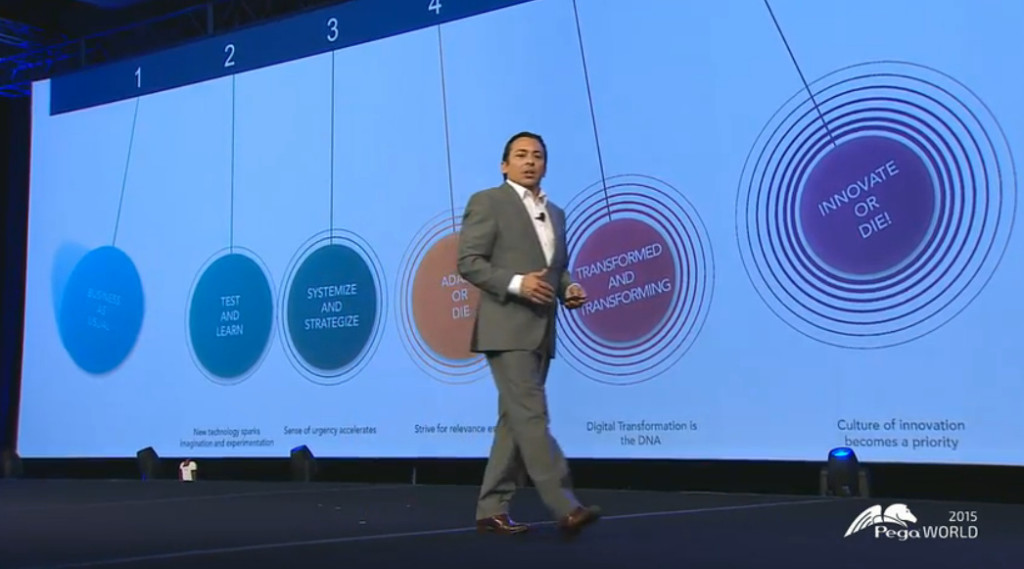

He turned things over to the main keynote guest speaker, Peter Sheahan, author of several business innovation books as well as head of Karrikins Group. Sheahan talked about finding opportunity in disruption rather than fighting it. He presented four strategies for turning the challenge of disruption into opportunity: move towards the disruption; focus on higher order opportunities; question assumptions; and partner like you mean it. These all depend on looking beyond the status quo to identify where the disruption is happening to drive recognition of the opportunities, not just trying to do the same thing that you’re doing now, just better and faster. Some good case studies, such as Burberry — where the physical stores’ biggest competition is their own online shopping site, forcing them to create unique in-store experiences — with a focus on how the convergence of a number of disruptive forces can result in a cornucopia of opportunities. It’s necessary to look at the higher order opportunities, orienting around outcomes rather than processes, and not spend too much time optimizing lower-level activities without looking at how the entire business model could be disrupted.

A dynamic and inspiring talk to kick off the conference. Not sure I’ll be attending many more sessions before my own presentation this afternoon since I’m doing some last-minute preparations, although there are some pretty interesting ones tempting me.