Collaborative decisions: coordinating automated and human decision-making. Alan Fish, FICO

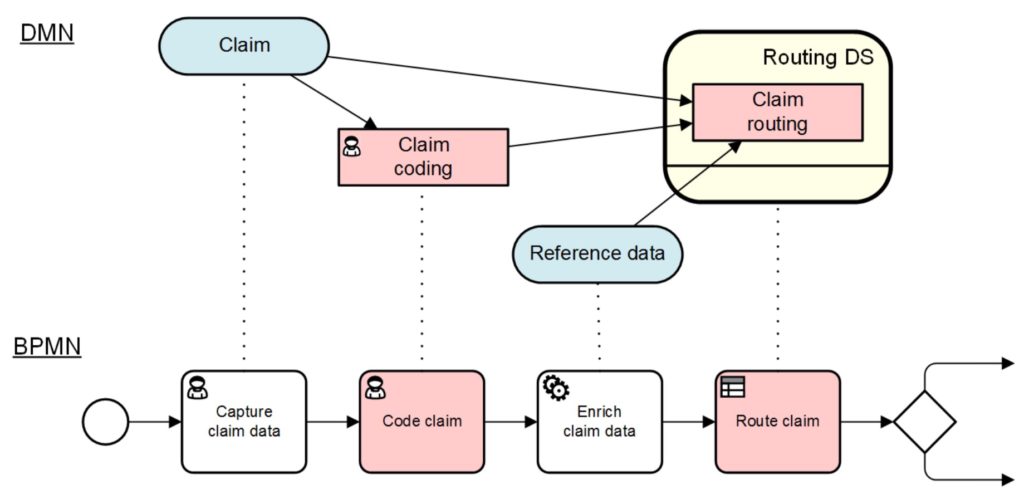

Alan Fish presented on the coordination of decisions between automation, individuals and groups. He considered how DMN isn’t enough to model these interactions, since it doesn’t allow for modeling certain characteristics; for example, partitioning decisions over time is best done with a combination of BPMN and DMN, where temporal dependencies can be represented, while combining CMMN and DMN can represent the partitioning decisions between decision-makers.

He also looked at how to represent the partition between decisions and meta-decisions — which is not currently covered in DMN — where meta-decisions may be an analytical human activity that then determines some of the rules around how decisions are made. He defines an organization as a network of decision-making entities passing information to each other, with the minimum requirement for success based on having models of processes, case management, decisions and data. The OMG “Triple Crown” of DMN, BPMN and CMMN figure significantly in his ideas on a certain level of organizational modeling, and the success of the organizations that embrace them as part of their overall modeling and improvement efforts.

He sees radical process reengineering as being a risky operation, and posits that doing process reengineering once then constantly updating decision models to adapt to changing conditions. An interesting discussion on organizational models and how decision management fits into larger representations of organizations. Also some good follow-on Q&A about whether to consider modeling state in decision models, or leaving that to the process and case models; and about the value of modeling human decisions along with automated ones.

Making the Right Decision at the Right Time: Introducing Temporal Reasoning to DMN. Denis Gagné, Trisotech

Denis Gagné covered the concepts of temporal reasoning in DMN, including a new proposal to the DMN RTF for adding temporal reasoning concepts. Temporal logic is “any system of rules and symbolism for representing, and reasoning about, propositions qualified in terms of time”, that is, representing events in terms of whether they happened sequentially or concurrently, or what time that a particular event occurred.

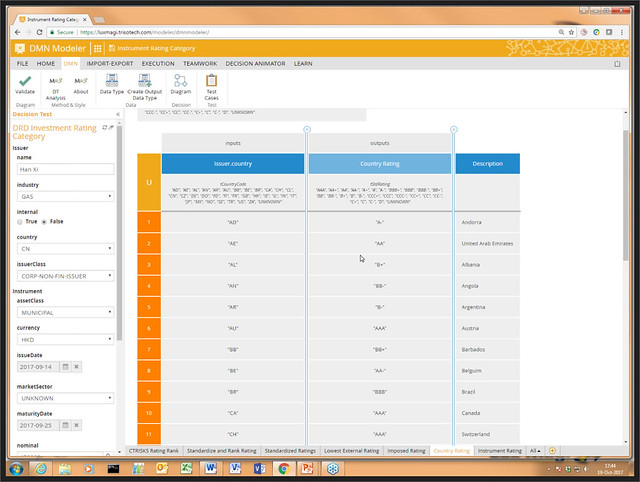

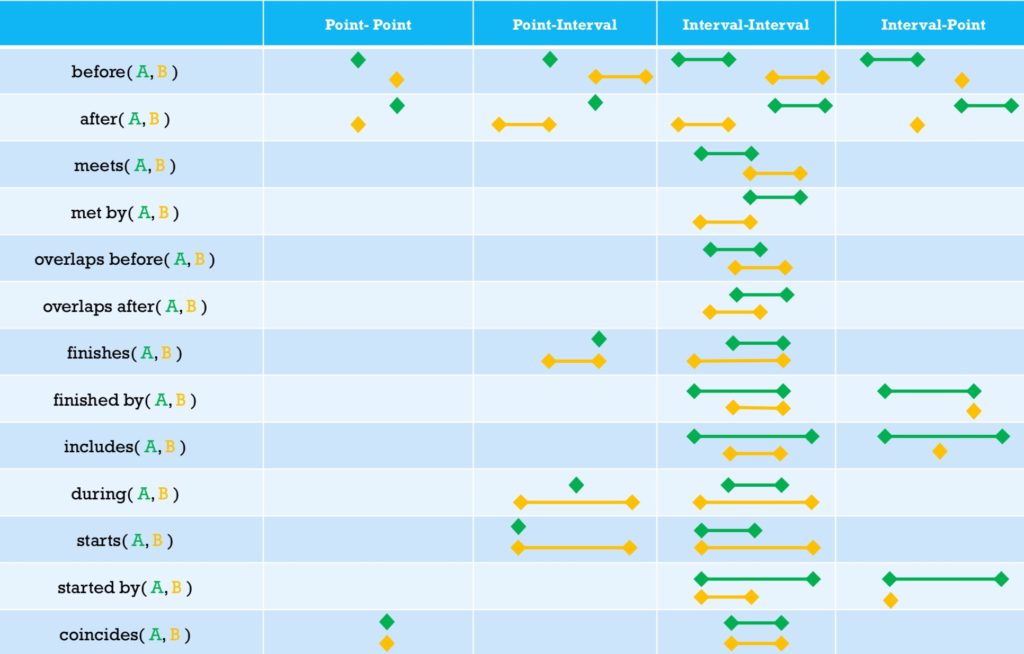

The proposal will be for an extension to FEEL — which already has some basic temporal constructs with date and time types — that provides a more comprehensive representation based on Allen’s interval algebra and Zaidi’s point-interval logic. This would have built-in functions regarding intervals and points, with two levels of abstraction for expressiveness and business friendliness, allowing for DMN to represent temporal relationships between points, between points and intervals, and between intervals.

The proposal also includes a more “business person common sense” interpretation for interval overlaps and other constructs: note that 11 of the possible interval-interval relationships fall into this category, which makes this into a simpler before/after/overlap designation. Given all of these representations, plus more robust temporal functions, the standard can then allow expressions such as “interval X starts 3 days before interval Y” or “did this happen in September”.

This is my first time at DecisionCAMP (formerly RulesFest), and I’m totally loving it. It’s full of technology practitioners — vendors, researchers and consultants — who more interested in discussing interesting ways to improve decision management and the DMN standard rather than plugging their own products. I’m not as much of a decision management expert as I am in process management, so great learning opportunities for me.

Bruce Silver, also a huge contributor to BPMN and DMN standards, and author of the BPMN Method & Style books and now the DMN M&S, presented an application for buying a stock at the right time based on price patterns. For investors who time the market based the pricing, the best way to do this is to look at daily min/max trends and fit them to one of several base type models. Bruce figured that this could be done with a decision table applied to a manipulated version of the data, and automated this for a range of stocks using a one-year history, processing in Excel, and decision services in the Trisotech cloud. This is a practical example of using decision services in a low-code environment by non-programmers to do something useful. His demo showed us the decision model for doing this, then the data processing (smoothing) done in Excel. However, for an application that you want to run every day, you’re probably not going to want to do the manual import/export of data, so he showed how to automate/orchestrate this with

Bruce Silver, also a huge contributor to BPMN and DMN standards, and author of the BPMN Method & Style books and now the DMN M&S, presented an application for buying a stock at the right time based on price patterns. For investors who time the market based the pricing, the best way to do this is to look at daily min/max trends and fit them to one of several base type models. Bruce figured that this could be done with a decision table applied to a manipulated version of the data, and automated this for a range of stocks using a one-year history, processing in Excel, and decision services in the Trisotech cloud. This is a practical example of using decision services in a low-code environment by non-programmers to do something useful. His demo showed us the decision model for doing this, then the data processing (smoothing) done in Excel. However, for an application that you want to run every day, you’re probably not going to want to do the manual import/export of data, so he showed how to automate/orchestrate this with  Edson Tirelli of Red Hat, Bruce Silver’s co-author on the above-mentioned DMN Cookbook, finished this section of DMN presentations with a combination of blockchain and DMN, where DMN is used to define the business language for calculations within a smart contract. His demo showed a smart land registry case, specifically a transaction for selling a property involving a seller, a buyer and a settlement service created in DMN that calculates taxes and insurance, with the purchase being executed using cryptocurrency. He mentioned

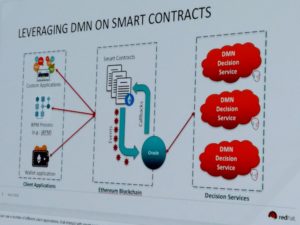

Edson Tirelli of Red Hat, Bruce Silver’s co-author on the above-mentioned DMN Cookbook, finished this section of DMN presentations with a combination of blockchain and DMN, where DMN is used to define the business language for calculations within a smart contract. His demo showed a smart land registry case, specifically a transaction for selling a property involving a seller, a buyer and a settlement service created in DMN that calculates taxes and insurance, with the purchase being executed using cryptocurrency. He mentioned