With the workshops finished yesterday, we kicked off the main BPM 2019 program with a welcome from co-organizers Jan Mendling and Stefanie Rinderle-Ma, and greetings from the steering committee chair Mathias Weske. We heard briefly about next year’s conference in Sevilla, and 2021 in Rome — I’m already looking forward to both of those — then remarks from WU Rectorate (and Vice-Rector Digitalization) Tatjana Oppitz on the importance of BPM in transforming businesses. This is the largest year for this academic/research BPM conference, with more than 400 submissions and almost 500 attendees from 50 countries, an obvious indication of interest in this field. Also great to see so many BPM vendors participating as sponsors and in the industry track, since I’m an obvious proponent of two-way collaboration between academia and practitioners.

The main keynote speaker was Kalle Lyytinen of Case Western Reserve University, discussing digitalization and BPM. He showed some of the changes in business due to process improvement and design (including the externalization of processes to third parties), and the impacts of digitalization, that is, deeply embedding digital data and rules into organizational context. He went through some history of process management and BPM, with the goals focused on maximizing use of resources and optimization of processes. He also covered some of the behavioral and economic views of business routines/processes in terms of organizational responses to stimuli, and a great analogy (to paraphrase slightly) that pre-defined processes are like maps, while the performance of those processes forms the actual landscape. This results in two different types of approaches for organized activity: the computational metaphor of BPM, and the social/biological metaphor of constantly-evolving routines.

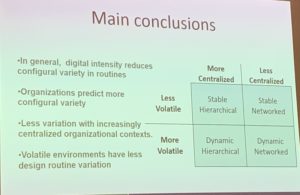

He defined digital intensity as the degree to which digitalization is required to perform a task, and considered how changes in digital intensity impact routines: in other words, how is technology changing the way we do things on a micro level? Lyytinen specializes in part on the process of designing systems (since my degree is in Systems Design Engineering, I find this fascinating), and showed some examples of chip design processes and how they changed based on the tools used.

He discussed a research study and paper that he and others had performed looking at the implementation of an SAP financial system in NASA. Their conclusion is that routines — that is, the things that people do within organizations to get their work done — adapted dynamically to adjust to the introduction of the IT-imposed processes. Digitalization initially increases variation in routines, but then the changes decrease over time, perhaps as people become accustomed to the new way of doing things and start using the digital tools. He sees automation and workflow efficiency as an element of a broader business model change, and transformation of routines as complementary to but not a substitute for business model change.

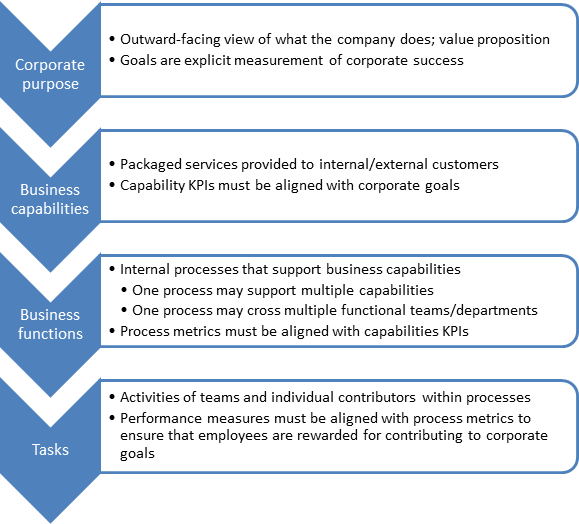

The design of business systems and models needs to consider both the processes provided by digitalization (BPM) and the interactions with those digital processes that are made up by the routines that people perform.

There was a question — or more of a comment — from Wil van der Aalst (the godfather of process mining) on whether Lyytinen’s view of BPM is based on the primarily pre-defined BPM of 20 years ago, and if process mining and more flexible process management tools are a closer match to the routines performed by people. In other words, we have analytical techniques that can then identify and codify processes that are closer to the routines. In my opinion, we don’t always have the ability to easily change our processes unless they are in a BPM or similar system; Lyytinen’s SAP at NASA case study, for example, was very unlikely to have very flexible processes. However, van der Aalst’s point about how we now have more flexible ways of digitally managing processes is definitely having an impact in encoding routines rather than forcing the change of routines to adapt to digital processes.

There was also a good discussion on digital intensity sparked by a question from Michael Rosemann, and how although we might not all become Amazon-like in the digital transformation of our businesses, there are definitely now activities in many businesses that just can’t be done by humans. This represents a much different level of digital intensity from many of our organizational digital process, which are just automated versions of human routines.