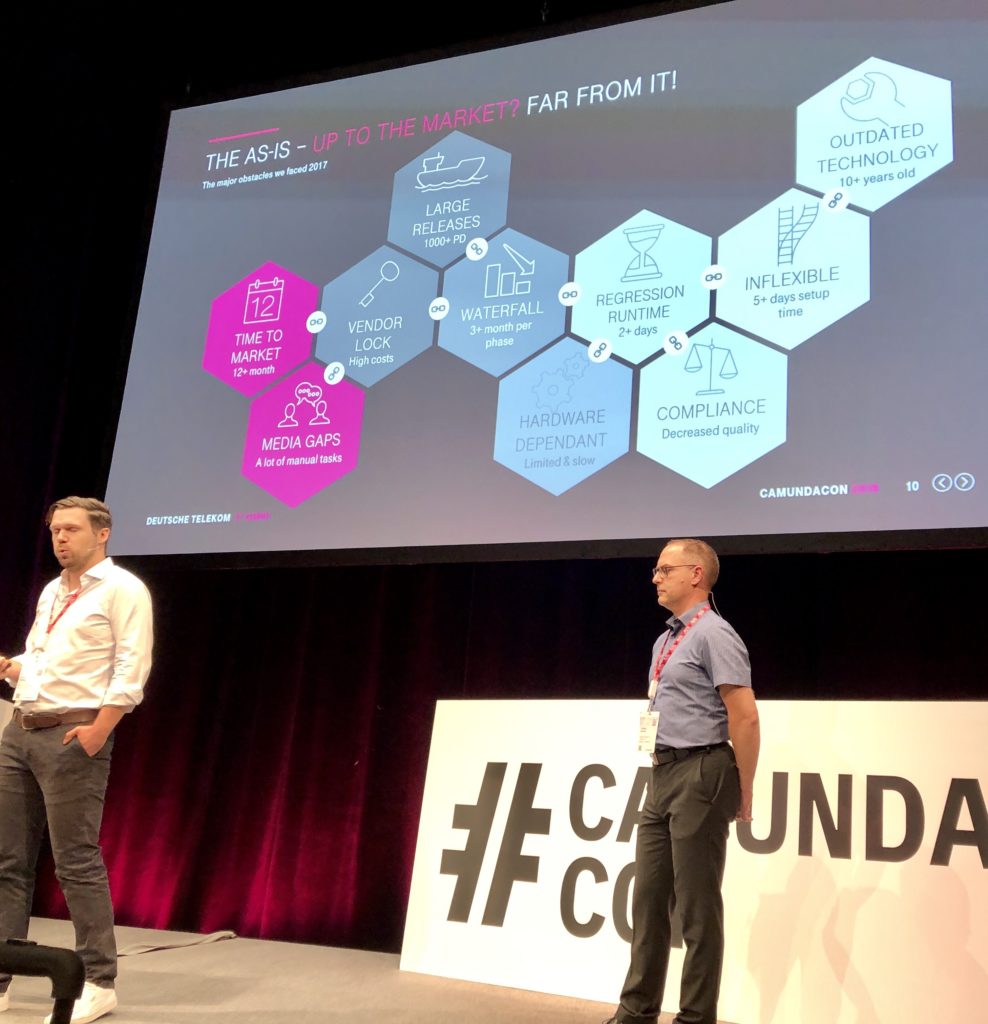

It’s definitely webinar season! I’ve seen a lot of webinar invitations pass by recently, and I’ll be speaking on a couple in the coming weeks. Today, I listened in on a webinar about the Camunda Cloud public beta, with Daniel Meyer (Camunda CTO) discussing their drivers for creating it, and Immanuel Monma providing a demo. I heard about the Camunda Cloud at CamundaCon last September, and it’s good to see that they’re launching it so soon.

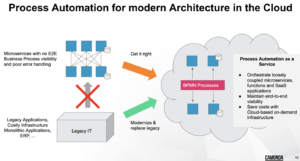

Meyer spoke about using cloud-based process automation for modernizing legacy infrastructure, and the requirements that they had for re-inventing process automation for the cloud:

- Externalize processes from business applications (this isn’t really new, since it’s been a driver for BPM systems all along).

- Maximize developer productivity by allowing them to work within their programming language of choice.

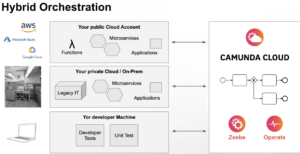

- Support hybrid orchestration with both cloud and on-premise applications, and across multiple public cloud platforms.

- Native BPMN execution.

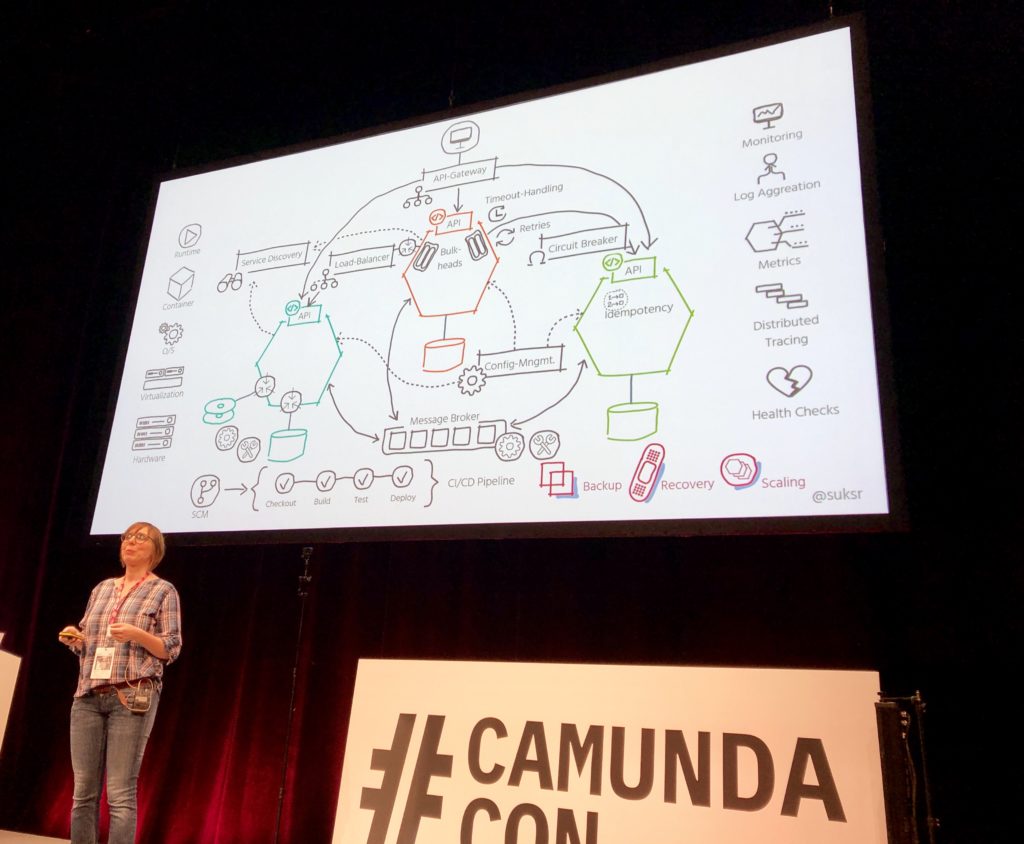

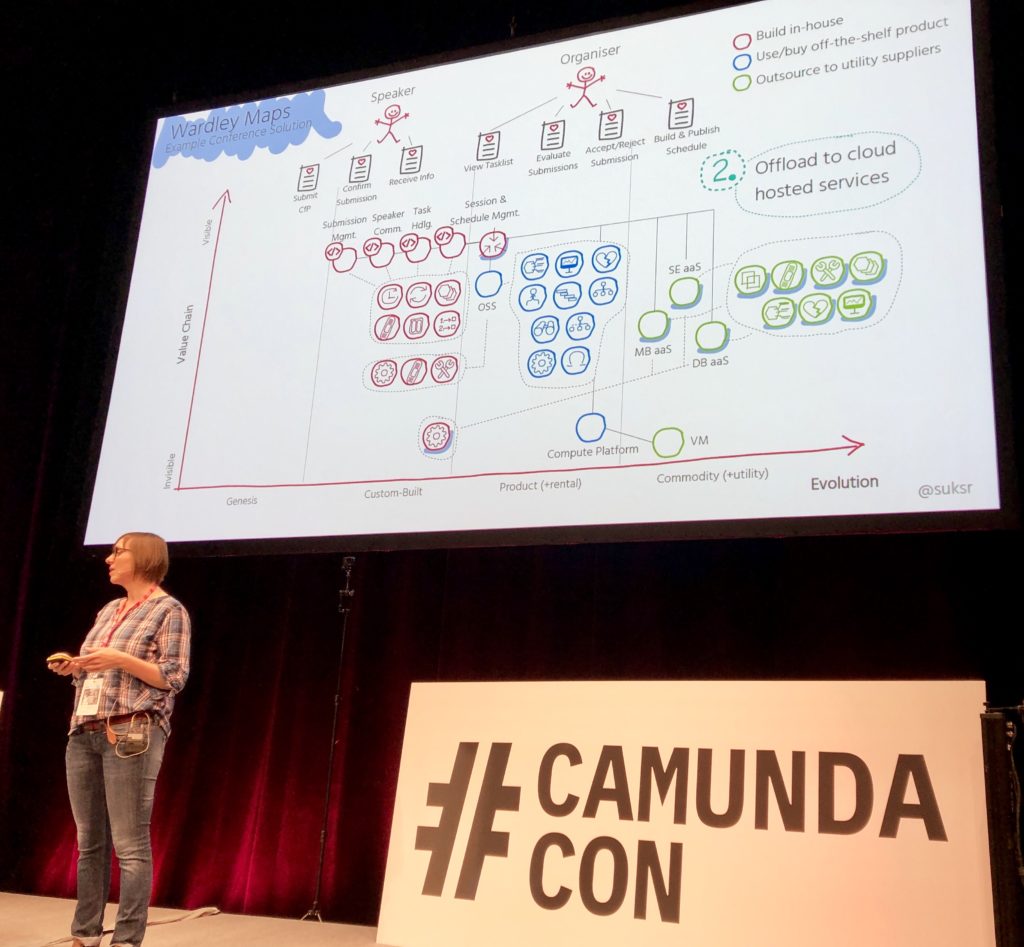

- Cloud scalability and resilience.

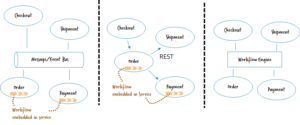

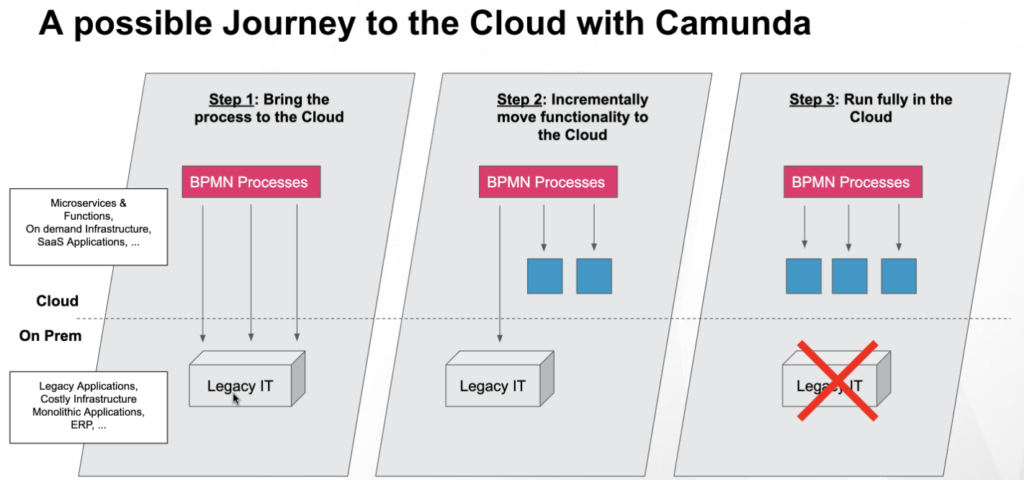

This is where their Zeebe workflow engine comes in, which is at the core of Camunda Cloud. By supporting hybrid orchestration, Camunda Cloud allows for a gradual migration of legacy on-premise IT by first externalizing the processes, then migrating some of the legacy functionality to cloud-based microservices while still supporting direct contact with the legacy IT, then gradually (if possible) migrating all of the legacy functionality to the cloud. This gains the advantage of both a microservices architecture for modularity and scalability, and process orchestration to knit things together for loose coupling with end-to-end visibility.

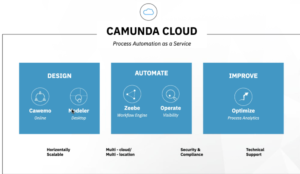

The live demo showed the interaction between Zeebe and Operate, the two main execution components of Camunda Cloud, plus Cawemo for collaborative modeling of the processes (althought the process could have just been modeled in the Zeebe modeler). Monma walked us through how to create, deploy and execute a simple BPMN process in Camunda Cloud; watching the webinar replay would be a great place to start if you want to play around with the beta. Note that aside from creating the BPMN model in Cawemo, which may involve business people, this is a technical developer toolset for service orchestration and automated processes at this point. You can plug into their Zeebe Slack community or forum to interact with other developers who are trying things out.

Meyer returned with the product roadmap, then handled questions from attendees. Right now, Camunda Cloud is a free public beta although there are some limitations; they will be launching the GA version shortly (he said “hopefully within the next month”) that will allow better control over clusters plus have SLA-based technical support. They are also adding human workflow with a tasklist, providing both an API and a simple out of the box UI, which will also push the addition of the human task type in the Zeebe BPMN coverage. They will be adding analytics via a cloud version of Optimize. The Camunda components are running in their cloud, which is currently running in Google Cloud and an automated Kubernetes structure; in the future, they will expand this to run in multiple (geographic) regions to better support applications in different regions. They may consider running on different cloud platforms, although since this is hidden from the Camunda Cloud customers, it may not be necessary. A number of other good questions on hybrid orchestration, the use of RPA, and how the underlying event-streaming distributed architecture of Zeebe provides for vastly greater scalability than most BPM systems.

You’ll be able to see the webinar replay (typically without registration) on the webinar information page as soon as they publish it.