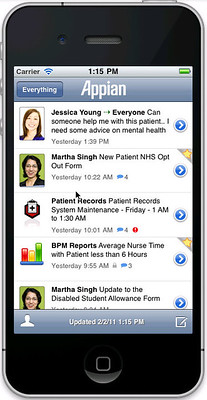

Murray Rode, COO of TIBCO, started the analyst briefings with an overview of technology trends (as we heard this morning, mobile, cloud, social, events) and business trends (loyalty and cross-selling, cost reduction and efficiency gains, risk management and compliance, metrics and analytics) to create the four themes that they’re discussing at this conference: digital customer experience, big data, social collaboration, and consumerization of IT. TIBCO provides a platform of integrated products and functionality in five main areas:

- Automation, including messaging, SOA, BPM, MDM, and other middleware

- Event processing, including events/CEP, rules, in-memory data grid and log management

- Analytics, including visual analysis, data discovery, and statistics

- Cloud, including private/hybrid model, cloud platform apps, and deployment options

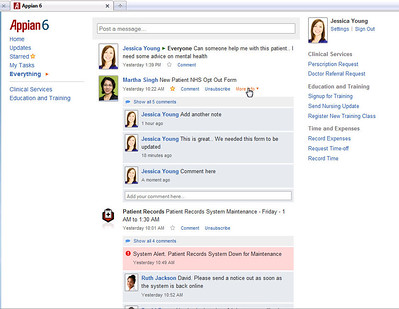

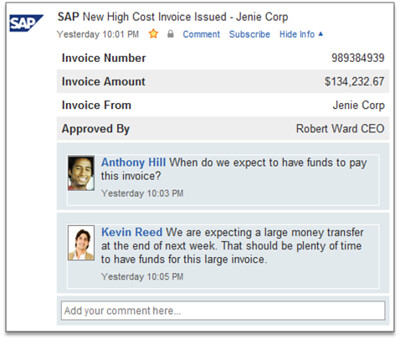

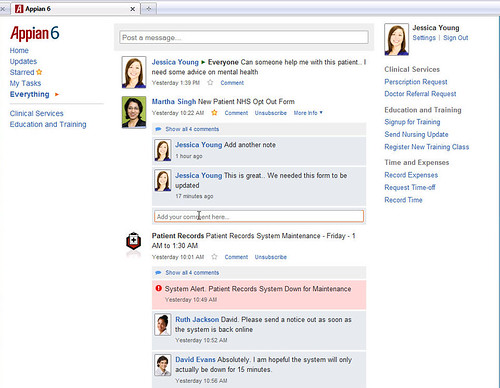

- Social, including enterprise social media, and collaboration

A bit disappointing to see BPM relegated to being just a piece of the automation middleware, but important to remember that TIBCO is an integration technology company at heart, and that’s ultimately what BPM is to them.

Taking a look at their corporate performance, they have almost $1B in revenue for FY2011, showing growth of 44% over the past two years, with 4,000 customers and 3,500 employees. They continue to invest 14% of revenue into R&D with a 20% increase in headcount, and significant increases in investment in sales and marketing, which is pushing this growth. Their top verticals are financial services and telecom, and while they still do 50% of their business in the Americas, EMEA is at 40%, and APJ making up the other 10% and showing the largest growth. They have a broad core sales force, but have dedicated sales forces for a few specialized products, including Spotfire, tibbr and Nimbus, as well as for vertical industries.

They continue to extend their technology platform through acquisitions and organic growth across all five areas of the platform functionality. They see the automation components as being “large and stable”, meaning we can’t expect to see a lot of new investment here, while the other four areas are all “increasing”. Not too surprising considering that AMX BPM was a fairly recent and major overhaul of their BPM platform and (hopefully) won’t need major rework for a while, and the other areas all include components that would integrate as part of a BPM deployment.

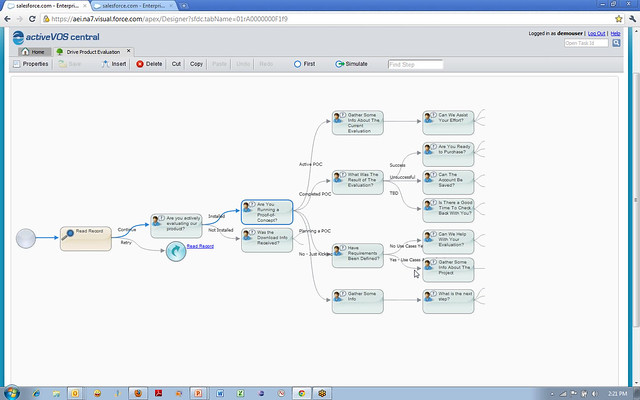

Matt Quinn then reviewed the technology strategy: extending the number of components in the platform as well as deepening the functionality. We heard about some of this earlier, such as the new messaging appliances and Spotfire 5 release, some recent releases of existing platforms such as ActiveSpaces, ActiveMatrix and Business Events, plus some cloud, mobile and social enhancements that will be announced tomorrow so I can’t tell you about them yet.

We also heard a bit more on the rules modeling that I saw before the sessions this morning: it’s their new BPMN modeling for rules. This uses BPMN 1.2 notation to chain together decision tables and other rule components into decision services, which can then be called directly as tasks within a BPMN process model, or exposed as web services (SOAP only for now, but since ActiveMatrix is now supporting REST/JSON, I’m hopeful for this). Sounds a bit weird, but it actually makes sense when you think about how rules are formed into composite decision services.

There was a lot more information about a lot more products, and then my head exploded.

Like others in the audience, I started getting product fatigue, and just picking out details of products that are relevant to me. This really drove home that the TIBCO product portfolio is big and complex, and this might benefit from having a few separate analyst sessions with some sort of product grouping, although there is so much overlap and integration in product areas that I’m not sure how they would sensibly split it up. Even for my area of coverage, there was just too much information to capture, much less absorb.

We finished up with a panel of the top-level TIBCO execs, the first question of which was about how the sales force can even start to comprehend the entire breadth of the product portfolio in order to be successful selling it. This isn’t a problem unique to TIBCO: any broad-based platform vendor such as IBM and Oracle have the same issue. TIBCO’s answer: specialized sales force overlays for specific products and industry verticals, and selling solutions rather than individual products. Both of those work to a certain extent, but often solutions end up being no more than glorified templates developed as sales tools rather than actual solutions, and can lead to more rather than less legacy code.

Because of the broad portfolio, there’s also confusion in the customer base, many of whom see one TIBCO product and have no idea of everything else that TIBCO does. Since TIBCO is not quite the household name like IBM or Oracle, companies don’t necessarily know that TIBCO has other things to offer. One of my banking clients, on hearing that I am at the TIBCO conference this week, emailed “Heard of them as a player in the Cloud Computing space. What’s different or unique about them vs others?” Yes, they play in the cloud. But that’s hardly what you would expect a bank (that uses very little cloud infrastructure, and likely does have some TIBCO products installed somewhere) to think of first when you mention TIBCO.