It’s the first day of bpmNEXT, the conference for BPM visionaries and free thinkers to get together, share ideas, show their cool new stuff, meet new friends and get reacquainted with old ones. This is an opportunity for technologists (primarily senior technical people from BPM vendors) to give demos in a very structured format, but it’s not really a place for customers: more like the BPM Think Tanks of old. Organized by Bruce Silver and Nathaniel Palmer, themselves both long-time contributors to the industry, with content provided by a lot of people who are loud and proud about their technology.

That very structured format, in case you haven’t read about or attended bpmNEXT before, is a strictly limited Ignite-style presentation followed by a live demo. This limits the amount of time that presenters can spend showing slides and forces them to get to the good stuff.

You can see the the agenda here, and we started out the first day with a few keytnoes from industry thought leaders before getting to the demo presentations. I’ll cover those in this post, then do individual posts for each section of demos (usually three in a section). These will be rough notes since there’s a lot of information that goes by quickly; you’ll be able to see video of all of the sessions, most likely on the bpmNEXT YouTube channel (where you can also see previous years’ sessions).

The Future of Process in Digital Business, Jim Sinur

Jim Sinur, a long-time Gartner analyst who is now with Aragon Research, spoke about trends in digital businesses. Most of this was a plug for Aragon and their research reports that seemed focused on customer organizations, which doesn’t seem like a good fit with this audience where most of the people in the room are well-versed in these technologies and how to apply them in real life.

I’d really like to see more conversational sessions rather than presentations for the keynotes, or at least content that is more directly relevant to the audience.

A New Architecture for Automation, Neil Ward-Dutton

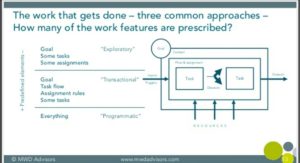

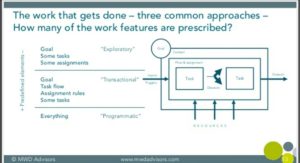

Neil Ward-Dutton, who heads up MWD Advisors, presented a distillation of the conversations that they’re having with customer organizations, starting with the difficult choices that they have to make in terms of which technologies to choose: for example, when RPA vendors tell them that they don’t need BPM any more. he went through some insights into the technologies that are impacting CIOs’ strategic decisions — no surprises there — then presented a schematic model for how work happens in organizations as a basis for understanding how different technologies impact different parts of their work. The framework categorizes work into exploratory, transactional and programmatic, and he walked through what each of those types defines up front, and how the technologies are used within that. Good view of how to help organizations think about their work and how to develop automation strategies that address different work styles and applications.

Neil Ward-Dutton, who heads up MWD Advisors, presented a distillation of the conversations that they’re having with customer organizations, starting with the difficult choices that they have to make in terms of which technologies to choose: for example, when RPA vendors tell them that they don’t need BPM any more. he went through some insights into the technologies that are impacting CIOs’ strategic decisions — no surprises there — then presented a schematic model for how work happens in organizations as a basis for understanding how different technologies impact different parts of their work. The framework categorizes work into exploratory, transactional and programmatic, and he walked through what each of those types defines up front, and how the technologies are used within that. Good view of how to help organizations think about their work and how to develop automation strategies that address different work styles and applications.

Although a lot of his presentation was aimed at a general audience that could include customers, Neil finished up with a bit on next moves for vendors and technologists as the technology market changes: there are a lot of mergers and acquisitions going on, and older technologies are being replaced with newer ones in specific instances. He had some recommendations about rearchitecting products and adding value, shifting from one-size-fits-all products to collections of independent runtime services in order to support cloud architectures (especially elastic computing requirements) and provide more flexibility in product offerings.

Neil Ward-Dutton, who heads up MWD Advisors, presented a distillation of the conversations that they’re having with customer organizations, starting with the difficult choices that they have to make in terms of which technologies to choose: for example, when RPA vendors tell them that they don’t need BPM any more. he went through some insights into the technologies that are impacting CIOs’ strategic decisions — no surprises there — then presented a schematic model for how work happens in organizations as a basis for understanding how different technologies impact different parts of their work. The framework categorizes work into exploratory, transactional and programmatic, and he walked through what each of those types defines up front, and how the technologies are used within that. Good view of how to help organizations think about their work and how to develop automation strategies that address different work styles and applications.

Neil Ward-Dutton, who heads up MWD Advisors, presented a distillation of the conversations that they’re having with customer organizations, starting with the difficult choices that they have to make in terms of which technologies to choose: for example, when RPA vendors tell them that they don’t need BPM any more. he went through some insights into the technologies that are impacting CIOs’ strategic decisions — no surprises there — then presented a schematic model for how work happens in organizations as a basis for understanding how different technologies impact different parts of their work. The framework categorizes work into exploratory, transactional and programmatic, and he walked through what each of those types defines up front, and how the technologies are used within that. Good view of how to help organizations think about their work and how to develop automation strategies that address different work styles and applications. The

The