I’m a few weeks late completing my report on the Camunda Community Day. The first part was on the community contributions and sessions, while the second half documented here is about Camunda showing new things that could be used by the community developers in the audience.

First up was Vladimirs Katusenoks, core developer on BPMN.io, with a presentation on bpmn-js: how it works, and how to extend it with custom functionality such as adding color to BPMN diagrams, which is a permitted extension to BPMN XML. His live coding presentation showed changing the colour of a shape background, either statically in code for the element class or by adding a colour picker to an individual element context palette; this was based on the bpmn-js core BPMN functionality, using bpmn-moddle to read/write into the metamodel and diagram-js to render it. There are a number of other bpmn-js examples on Github.

Next, Felix Müller discussed KPI management, expanding on his August blog post on the topic. KPI management is based on quantitative indicators for process cycle-time improvement, including cycle time and overdue time, plus definitions of the time period, unit of measure and calculation method. In Camunda, KPIs are defined in the Modeler, then monitored in Cockpit. He showed how to use the concept of element templates (that extend core definitions) to create custom fields on collaboration object (process) or individual tasks, e.g., KPI unit (hours, days, minutes) and KPI threshold (number). In Cockpit, this appears as a new tab for KPI Overview, showing a list of individual instances and target/current/average duration, plus an indicator of overdue status of the instance and any contained tasks; there is also a decorator bubble on the top right of the task on the process model to show the number of overdue instances on the aggregate model, or overdue status as a check mark or exclamation on individual models. The Cockpit modifications were done by creating a plug-in to display KPI statistics, which queries and calculates on the fly – a potential performance problem that might be improved through pre-aggregation of statistics. He also demonstrated how to modify this basic KPI model to include an expected duration as well as maximum duration. A good start, although I think there’s a lot more that’s needed here.

Thorsen Lindhauer, a Camunda core BPM developer, discussed how to contribute to the Camunda open source community, both at camunda.org (engine and desktop modeler, same as the commercial product) and bpmn.io (JS tools). Possible contributions include answering questions on forums; logging error reports; documenting ideas for new functionality; and working on code. Code contributions typically start by having a forum discussion about planned new functionality, then a decision is made on whether it will be core code (higher quality standards since it will become part of the commercial product, and will eventually be maintained by Camunda) versus a community extension; this is followed by ongoing development, merge and release cycles. Camunda is very supportive of community contributions, even if they don’t become part of the core product: community involvement is critical to the health of any open source project.

The last presentation of the community day was Daniel Meyer discussing the product roadmap. The next release, 7.6, will be on November 30 – they have a strict twice-yearly release cycle. This release includes updates to DMN, CMMN, BPMN, rolling updates, Cockpit features, and UI/UX in web apps; I have captured a few notes here but see the linked roadmap for a more complete and accurate description and the online documentation as it is rolled out.

- DMN:

- Simpler decision table editing with drop-down lists of comparison/range operators instead of having to remember FEEL or Juel syntax

- Ability to add list of selection values (advanced mode still exists for full flexibility)

- Decisions with literal expressions

- DMN engine performance 4-6x faster

- Support for decision requirements diagrams/graphs (DRD/DRG) that can link decision tables; visualization in Modeler and Cockpit are not there yet but the structures are supported – in my experience, this is typical of Camunda, which builds and releases the engine capabilities early then follows with the visualization, allowing for a quicker start for executable diagrams

- CMMN:

- Modeler now completely models CMMN including technical attributes such as listeners

- Cockpit (visualization still incomplete although we saw a brief view) will allow linking models of same or different types

- Engine feature and functionality improvements

- Rolling updates allow Camunda process engine to be updated without shutdown: guaranteed backwards compatibility of database schema to allow database to be updated first, then roll updates of engines by taking each offline individually and allowing load balancer to reroute sessions.

- BPMN:

- BPMN conditional event supported

- Improved modeling including labels, collapsing/expanding subprocesses to switch between view types, and field injections in property panel.

- Cockpit:

- More flexible/granular human task monitoring

- New welcome page with links to apps (Cockpit, Tasklist, Admin), user profile, and frequent links

- Batch operations (cancel, suspend, etc.) based on batch action capability built for instance migration

- CMMN and DMN DRD visualization

Daniel discussed some other minor improvements based on customer feedback, plus plans for 2017, including a web modeler for collaborative BPMN, CMMN and DMN modeling via a SaaS offering and a future on-premise version. They finished the day with a poll and community feedback to establish priorities for future versions.

I stayed on for the second day, which is actually a separate conference: BPMCon for Camunda’s enterprise (commercial) customers. Rather, I stayed on for Neil Ward-Dutton’s keynote, then ducked out for most of the rest of day, which was in German. Neil’s keynote included results from workshops that he has done with executives on digital transformation, and how BPM can be used to create the bridges between the diverse parts of a digital business (internal to external, automated to people-centric), while tracking and coordinating the work that flows between the different areas.

Disclaimer: Camunda paid my travel expenses to attend both conference days. I was not compensated in any way for attending or for writing this post, and the opinions here are my own.

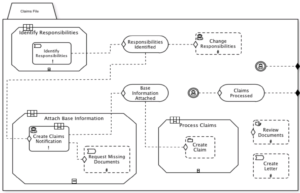

I recently wrote a paper on how case management technology can be used in insurance claims processing, sponsored by DST (but not about their products specifically). From the paper overview:

I recently wrote a paper on how case management technology can be used in insurance claims processing, sponsored by DST (but not about their products specifically). From the paper overview: