There has been much speculation in the BPM world about Software AG’s online BPM community, originally dubbed AlignSpace, or as it has been recently renamed, ARISalign. Originally launched in a private beta months ago, those of us on the outside have been anticipating a look at how they plan to “combine social networking tools with intuitive tools for process design and modeling [to] collaborate effectively to create and improve processes”.

A few weeks ago, prior to the official beta release, I had a chance for a briefing with Thomas Stoesser of Software AG for a closer look, and I’ve been playing around with it myself since the beta opened. With ARISalign, they’re providing tools for collaboratively capturing business processes in an early process discovery stage, and also providing an open BPM community for anyone to participate, not just ARIS and webMethods users. In the future, they’re also planning for a marketplace for BPM-related products and services, although that’s not in the current offering.

Logging in to ARISalign, you see a home dashboard that shows a feed of updates on your projects, groups, discussions and networks, plus a message center and a list of your current projects. There’s also a Facebook-like status feature, although I’m not sure that I’d use this feature since it’s unlikely to be my primary social network – I don’t even do Facebook status updates any more since I started Twittering.

Logging in to ARISalign, you see a home dashboard that shows a feed of updates on your projects, groups, discussions and networks, plus a message center and a list of your current projects. There’s also a Facebook-like status feature, although I’m not sure that I’d use this feature since it’s unlikely to be my primary social network – I don’t even do Facebook status updates any more since I started Twittering.

Projects are how process artifacts are organized in ARISalign, with a project including a number of components:

- A whiteboard, similar in appearance to Lombardi Blueprint and other process discovery tools, that allows users to add “stages”, then activities that belong to each stage.

- Any number of process maps that can be linked to, but not generated from, the activities on the whiteboard.

- A discussion forum, which provides a simple threaded discussion board within the project.

- A library of related files/documents that can be uploaded as background or reference materials. Currently, the library can only contain uploaded content, not links to content that is hosted elsewhere; links would have to be added in a discussion thread.

If you like the project framework but don’t plan to add process models, then a group has all the same features as a project except for the whiteboard and process maps: you can use it if you want only a discussion forum, library and timeline shared between a group of people.

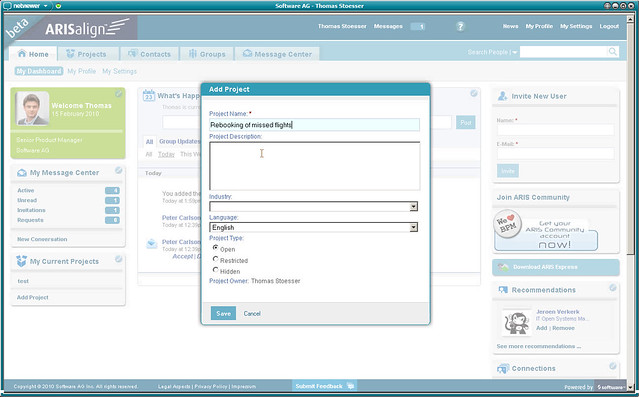

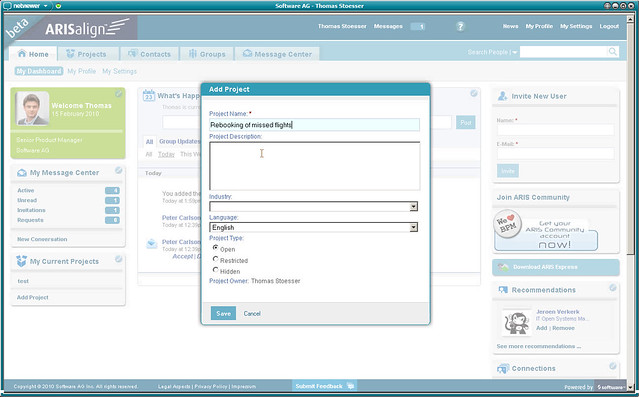

Creating a project requires only specifying a project name: everything else is optional or has some reasonable defaults. You can add a description, and select industry and language from predefined lists, although these are used as project search metadata only and don’t change the form of the project in any way. You can also select the access control for viewing the project, confusingly called “Project Type”, as open (visible to all), restricted (anyone can see the project in a search list, but not the details or content) or hidden (not visible to non-members, even in search results). All projects require that you join the project in order to participate, which may or may not require a process administrator’s approval.

There are three roles that a member can be assigned for a specific project:

- Project administrator, including the project owner/creator, which allows all functions including administering members, changing user roles, and archiving and renaming content.

- Project contributor, which allows working with tools and adding content.

- Project reviewer, which allows viewing content, participating in discussions and adding comments, but not changing content such as process models.

Unfortunately, there is no way to change the project owner from the original creator, although this is in the future plans, as is the idea of creating project templates for faster startup.

For an existing project, members will often want to start on the project dashboard where they can view a feed of all activity on the project (echoing the personal dashboard for a user, which shows activities for a user, their projects and their network). Similar to functionality recently added to Facebook, a user can hide specific people, models and activities on the dashboard, which creates a filter of only their view, not everyone’s view of the project dashboard.

To get started with process modeling, however, you’ll start on the project’s whiteboard tab, a near-real-time collaborative process discovery tool. High-level process steps, or stages, are added, then activities added below each stage: a process discovery paradigm for non-process-oriented users to just list the steps that are involved in the process. All project members can see each other’s changes as they occur, and can invite additional project members directly from the whiteboard view. Activities can be assigned properties, including comments by project reviewers; activities with comments show a pencil icon on the activity so that others know that comments exist. In the future, activities will also be able to have attachments; currently, attachments can only be added to the project library.

To get started with process modeling, however, you’ll start on the project’s whiteboard tab, a near-real-time collaborative process discovery tool. High-level process steps, or stages, are added, then activities added below each stage: a process discovery paradigm for non-process-oriented users to just list the steps that are involved in the process. All project members can see each other’s changes as they occur, and can invite additional project members directly from the whiteboard view. Activities can be assigned properties, including comments by project reviewers; activities with comments show a pencil icon on the activity so that others know that comments exist. In the future, activities will also be able to have attachments; currently, attachments can only be added to the project library.

The whiteboard view also allows adding goals and KPIs, although these are purely informational and can’t (yet) be applied to any process models created within that project. In the future, there may be value in considering how KPIs can be linked to the process models and exported for use in other tools.

Unlike some other process discovery tools, the whiteboard view does not auto-generate a process model – apparently there was quite a bit of internal design conversation over whether to do this or not – but one or more process models can be added to the project. Adding and editing a process model creates a split screen view with the whiteboard and the process model; activities can be dragged from the whiteboard to the process model, which creates a linkage between the activity in those two locations, such that highlighting the activity on the whiteboard also highlights it on the process model, and vice versa.  A whiteboard activity may be linked to more than one process model, so changes to the activity are not promoted to the process model. There can also be whiteboard activities that don’t end up on any process model. I’m not sure that I’m on board with this method; first of all, I would like to see a way to auto-generate a process model from the whiteboard, and I also think that if something is in the whiteboard view, it needs to be on a process model somewhere: otherwise, why is it in the whiteboard view at all? It appears that the reasoning behind this is that the process model is intended to be an executable process model, such that only the things that might end up in a BPMS would be included, whereas the whiteboard model includes purely manual tasks. Multiple processes from one whiteboard appears to make sense so that non-process people don’t have to think about what are distinct processes, but on second glance, I’m not sure that’s the right way to go.

A whiteboard activity may be linked to more than one process model, so changes to the activity are not promoted to the process model. There can also be whiteboard activities that don’t end up on any process model. I’m not sure that I’m on board with this method; first of all, I would like to see a way to auto-generate a process model from the whiteboard, and I also think that if something is in the whiteboard view, it needs to be on a process model somewhere: otherwise, why is it in the whiteboard view at all? It appears that the reasoning behind this is that the process model is intended to be an executable process model, such that only the things that might end up in a BPMS would be included, whereas the whiteboard model includes purely manual tasks. Multiple processes from one whiteboard appears to make sense so that non-process people don’t have to think about what are distinct processes, but on second glance, I’m not sure that’s the right way to go.

The more we dig into this, the more that I’m left with the feeling that this is a front-end for webMethods, not an ARIS extension, although the process modeling palette looks more like ARIS Express rather than the webMethods Designer. ARISalign is intended to be a purely business tool, so doesn’t expose web services calls or other technical complexities.

Process models can be exported to webMethods format, XPDL, or opened directly in ARIS Express, but there’s no round-tripping since importing the model back from ARIS Express requires uploading it as a different project. ARIS Express now supports “whiteboard” collaborative models, so the whiteboard can be exported and opened in ARIS Express as well as the process model. There are no offline capabilities; the only offline alternative would be to export to ARIS Express, then upload the changed models to a different project or take screen snaps of the ARIS Express changes and add as images to the project library to document offline changes. Neither of these is particularly attractive, so this may not be an option if you have to have offline access. There are plans to improve the ARIS Express integration in the future, possibly allowing a process model to be downloaded and locked for editing in ARIS Express, then re-uploaded in place.

There’s a view of all process models in a project, which allows those models to be managed (renamed, exported, deleted), but any editing of the models occurs in the split-screen view with the project whiteboard.

Aside from the project functionality, there are a number of social networking features for managing your profile and your connections. You can set different views of your profile for your network or for public display, and can view recommendations of people to whom you might want to connect based on company, industry and shared contacts. The Message Center is very Google Wave-like, with participants shown at the top, and allowing public or private reply to any part of the thread. This holds potential to become the conversation framework used within projects, to replace the current simple discussion groups. In general, the UI is quite nice (although some may not like that it was created with Adobe Flex), and has borrowed liberally from successful features of Facebook and other social networks. The navigation is quite flat, making it easy to find your way around in the interface.

Aside from the project functionality, there are a number of social networking features for managing your profile and your connections. You can set different views of your profile for your network or for public display, and can view recommendations of people to whom you might want to connect based on company, industry and shared contacts. The Message Center is very Google Wave-like, with participants shown at the top, and allowing public or private reply to any part of the thread. This holds potential to become the conversation framework used within projects, to replace the current simple discussion groups. In general, the UI is quite nice (although some may not like that it was created with Adobe Flex), and has borrowed liberally from successful features of Facebook and other social networks. The navigation is quite flat, making it easy to find your way around in the interface.

Software AG also showed off an ARISalign iPhone app at CeBIT, although it’s not generally available yet. I’m not sure I’d use this for much process modeling, although it would be useful for tracking what’s happening on projects, accepting invitations, participating in discussions and even looking at (or some light editing of) the whiteboard view.

Currently, ARISalign is available only as a hosted solution, and is hosted on the US version of Amazon Web Services. It’s architected so that on-premise hosting could be enabled in the future, although not in the current version. Software AG should consider having a version hosted on the EU AWS instance, since many organizations don’t want their information – even process models that don’t contain customer data – hosted in the US due to the privacy laws.

This is the first publicly-available version of ARISalign, and no one expects it to be perfect. How quickly Software AG can respond to users’ requests for new functionality – such as the inclusion of a marketplace for add-on applications and services – will be the real test of success, as I mentioned in my recent review of the IBM BlueWorks community.

There’s also the issue of merging the existing ARIS Community with ARISalign or at least cross-linking user accounts, which seems a logical step, but is not permitted by Germany privacy laws until Software AG and IDS Scheer officially become a single company, which could be several months still. The two sites may not end up merged; you can imagine the ARIS Community site being left with product support for ARIS and remain more actively managed, while the user-generated content such as discussions as well as the more generic tools be moved over to ARISalign. You can be sure that there will be some internal politics around this decision, too. Regardless, in the mean time, there’s a badge in the sidebar of each site linking to the other, encouraging you to sign up on the other site. That might, however, cause a bit of social networking fatigue for many business users.

Salesforce acquired Informavores for their process design and execution engine, and that became Visual Process Manager. This is primarily human-centric BPM; it’s not intended as a system-centric orchestration platform, since most customers already have extensive middleware for integration, usually on-premise and already integrated with their Force.com apps so don’t need that capability. That means that although a process step can call a web service or pretty much anything else within their existing Force.com platform, asynchronous web service calls are not supported; this would be expected to be done by that middleware layer.

Salesforce acquired Informavores for their process design and execution engine, and that became Visual Process Manager. This is primarily human-centric BPM; it’s not intended as a system-centric orchestration platform, since most customers already have extensive middleware for integration, usually on-premise and already integrated with their Force.com apps so don’t need that capability. That means that although a process step can call a web service or pretty much anything else within their existing Force.com platform, asynchronous web service calls are not supported; this would be expected to be done by that middleware layer.