Our second keynote on the first day of bpmNEXT 2019 is with long-time presenter Jim Sinur, looking at technology combinations that digitally deliver. Unlike his usual focus on future directions, he’s driving down into what technologies work for companies that are undergoing digital transformation. This is a great lead-in to what I’ll be talking about tomorrow morning, and I fully expect to be fine-tuning my presentation before then to incorporate ideas from Jim’s presentation as well as Nathaniel Palmer’s presentation that preceded it.

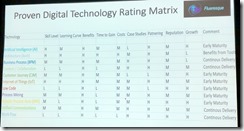

Digital business platforms – something bigger than a BPMS – provide the real pathway to digital transformation, combining a variety of technologies. The traditional BPMS products are strong in work/process management, but they also need proactive intelligence, integration, automation, IoT enablement and business functionality. He looks at technical streams and their benefits, ranging from computational technologies to consumer delivery channels. He had a draft version of a matrix that he’s working on that shows attributes for these different technologies, from skill level required to get started with the technology to the likelihood of the vendors in this category partnering with other category vendors successfully,

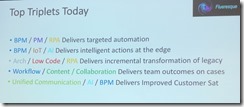

Digital business platforms – something bigger than a BPMS – provide the real pathway to digital transformation, combining a variety of technologies. The traditional BPMS products are strong in work/process management, but they also need proactive intelligence, integration, automation, IoT enablement and business functionality. He looks at technical streams and their benefits, ranging from computational technologies to consumer delivery channels. He had a draft version of a matrix that he’s working on that shows attributes for these different technologies, from skill level required to get started with the technology to the likelihood of the vendors in this category partnering with other category vendors successfully,  leading to a list of top productive pairs and triplets that we’re seeing in the market today: BPM and AI, for example, for processes with smart resources and actions; or architecture, low code and RPA for incremental transformation of legacy.

leading to a list of top productive pairs and triplets that we’re seeing in the market today: BPM and AI, for example, for processes with smart resources and actions; or architecture, low code and RPA for incremental transformation of legacy.

He finished up with how we will be leveraging the trends for marketplace collaboration between vendor products, and encouraging the vendors in the room (mostly everybody) to collaborate along the lines of his top pairs and triplets. In my opinion, this won’t necessarily being the vendors deciding to partner to offer joint solutions, but larger enterprises deciding to roll their own platforms using a combination of best-of-breed technologies that they select themselves: the vendors will need to make sure that their products can be sliced, diced and re-integrated in the way that the customers want.

Slide decks and videos of all presentations will be online within a day or two; I’ll come back and update all of the posts with links then.