David Ruiz, who founded ViewStar (an early document imaging and workflow package from the 1980’s that I remember well, and was eventually absorbed by Global360) is now with Ravenflow, which specializes in natural language processing for visual requirements definition.

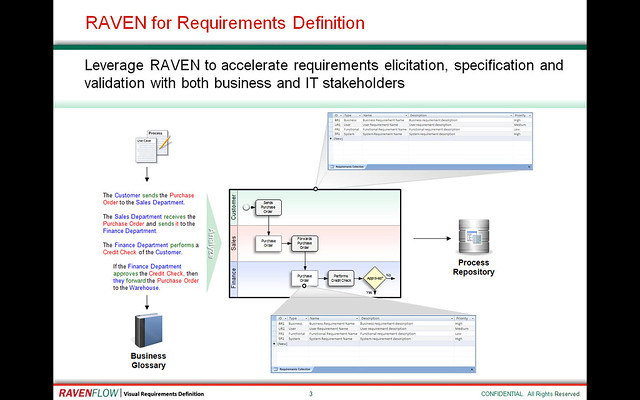

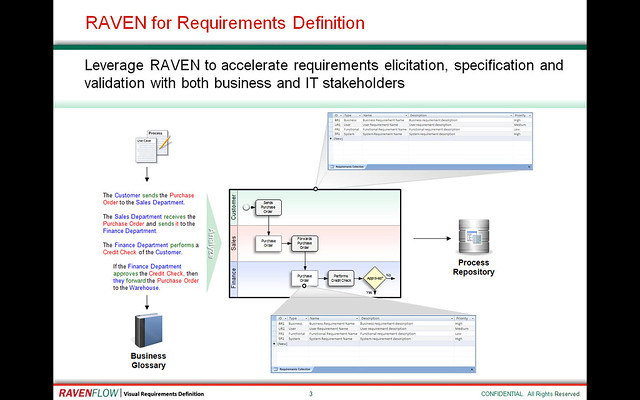

Their RAVEN engine is a natural language solution that they are applying to a couple of different solutions, one of them being a language-based solution to business process and requirements definition. Think about business analysts writing requirements documents, then the gap between those requirements documents and a process model; RAVEN is intended to analyze those written requirements and provide the visualization. RAVEN Cloud is a cloud-based version of that, built on Azure and Silverlight, designed for business people who need a quick process diagram.

Their RAVEN engine is a natural language solution that they are applying to a couple of different solutions, one of them being a language-based solution to business process and requirements definition. Think about business analysts writing requirements documents, then the gap between those requirements documents and a process model; RAVEN is intended to analyze those written requirements and provide the visualization. RAVEN Cloud is a cloud-based version of that, built on Azure and Silverlight, designed for business people who need a quick process diagram.

In short, RAVEN Cloud automatically generates a process diagram from plain English text. That’s pretty cool.

The RAVEN services include:

- Natural language text processing

- Basic lexicon, which is common for everyone using a common natural language; although they only support English at this time, changing out this lexicon service would allow support for other languages.

- Business glossary, which is optional, and specific to an organization or industry.

- Visualization; RAVEN Cloud generates a XML file that can be pushed to a cloud visualization service such as Visio, or mapped into a standardized format such as BPMN.

I did my Masters work in pattern recognition and image analysis, and I have a huge soft spot for recognition technology. I was not disappointed by the demo. You start out either with one of the standard text examples or by entering your own text to describe the process; you can use some basic text formatting to help clarify, such as lists, indenting and fonts. Then, you click the big red button, wait a few seconds, and voilà: you have a process map. Seriously.

I did my Masters work in pattern recognition and image analysis, and I have a huge soft spot for recognition technology. I was not disappointed by the demo. You start out either with one of the standard text examples or by entering your own text to describe the process; you can use some basic text formatting to help clarify, such as lists, indenting and fonts. Then, you click the big red button, wait a few seconds, and voilà: you have a process map. Seriously.

Once you have the map, you can re-orient for horizontal or vertical swimlanes, and can move connection points from one side of an element to another, but you can’t edit the basic topology of the map since that would break the synchronization between the text and the diagram. You can view the process terms and functions used to analyze the text, and highlight the actors, functions and objects that were analyzed in the narrative in order to create the process map.

You can do all of this without even signing up for the service: you only need to sign up if you want to export the process map. Currently, only JPG images are supported for export – useful for process documentation, but not directly useful for process automation – but editable formats are planned for the full release in the fall and later.

Although some purists will believe that you shouldn’t be describing processes, but just drawing them, the reality is that many complex application development projects still involve written requirements that include text descriptions of processes, which are then drawn by the analyst in Visio (or, shudder, PowerPoint), and then redrawn in the BPMS tool by a developer. If you can’t have model-driven development, then this at least replaces the step of the business analyst drawing a process model that has to be redone in another tool (without round-tripping) anyway. For the 50% of BA’s who Forrester claims can’t meet the cut as process analysts, this could help them to at least provide work of value on a process project.

David and I discussed what, to me, seemed to be a natural direction for this: looking at natural language processing to generate rules as well as process models, possibly based on an initiative such as RuleSpeak. I think that there’s a huge potential to take the natural language and parse out both process and rules from the description, which would be a really good starting point for ongoing automation of the process or rules independently, or both.

The public beta launched at the Web 2.0 Expo this week, with a subscription-based service to follow in the fall; by then, they will have beefed up their exporting capabilities, with Visio, BPMN 2.0, UML 2.0/SysML and Office document formats on their roadmap. You can try the beta for free now. They’re also considering the potential for companies to host the solution privately, with that organization’s own process examples instead of the standard ones in RAVEN Cloud; I think that this could (and should) be accomplished using private data on the public cloud version, although I know how touchy some companies get about hosting their own data.