I presented a webinar on business process management and case management today, hosted by Pegasystems. Great fun as always, and a ton of questions that we didn’t have time to answer. I captured a lot of them and will address them here; if you attended the webinar, Pega will also send out their responses as well as a link to the recording of the webinar. First of all, here’s my slides from the presentation:

Update: the webinar replay is here on the Pega site (registration required).

And here’s the Q&A. I’ve grouped together questions that I’ve responded to in a single answer.

What work is being done on the BPMN standard to improve support for case management?

What about modeling? What effect do you think this dynamic/structured divide has on modeling. Can you model structured processes in the same way as dynamic cases?

is there any standard for case management like BPM?

Do you work on Case Management Process Modeling of OMG Standardisation

Although you can use BPMN to model ad hoc processes, it doesn’t currently lend itself that well to modeling case management situations: it doesn’t include good support for the rich content required in most case management scenarios, nor for completely on-the-fly subprocess definition by a participant. BPM products that only support BPMN are going to struggle with representing cases as well as structured processes. I’m not sure what OMG is doing (if anything) to address CM within the BPMN standard in order to address this issue; the fact that they have issued an RFP for Case Management Process Modeling indicates that they’re going to do something; in my mind, it makes sense to consider some sort of extension to BPMN since there are so many processes that include aspects of both. I am not involved in that standards work.

Is “Case Management” just another name for Forrester’s “Human-Centric BPM” ?

Not really, or at least not based on their last definition of human-centric BPM. There are many structured BPM situations that involve a large number of human-facing steps; that doesn’t make them case management since they are neither dynamic nor collaborative. Forrester does have a recent report on dynamic case management that is separate from their BPM reports.

Case Management seems limited to user self-selected processes, that are pre-defined. Wouldn’t a truly dynamic case management system be guiding users based on current case context and customizing responses to the specific need, rather than simply insert pre-defined segment?

Absolutely. Although I showed the situation where someone could add pre-defined process fragments, I didn’t mean to imply that that’s the only method. Most of the time, the user has a pre-defined set of actions (which are more granular than process fragments) from which they can select; however, it is possible in most case management systems to allow a user to define actions and subprocesses on the fly.

Is it typical for someone other than the “case worker” to initiate or create a case, e.g. by submitting some sort of request directly into the CM application, or is it more typical for the case worker to create the case based on input via some other channel such as phone, email, etc.?

To what extent do organizations integrate the case with legacy systems – claims, investment etc.

I’ve grouped these two questions together because my response to the first one is “yes, but not only someone – it could be a trigger from another system”. In almost every situation, a case is created in response to an event; if that event occurs in another system, it could be used to create the case directly. Otherwise, an event such as a phone call could be used by someone other than the case worker to create the case, such as the CSR who took a call from a customer. Depending on the case management system, there may be further integration with other business systems in order to update information or trigger other events, or it may rely on the case worker to check those systems for additional actions and information.

If you’re trying to provide tools for front line service agents who take a wide variety of requests, including routine and knowledge based, is CM the best approach; linking in to BPM to support the routine workflows or is it better to have BPM. The main challenge is that the frontline worker could receive queries on certain query types very rarely.

Great question, to which the answer is “that depends”. That’s a design issue that would depend on the nature of the requests as well as the need to cross over between BPM and CM within those requests. For example, if the requests are independent from each other, you could spawn individual processes or cases depending on the type of request; whether the two different types are handled by one or two different systems could be completely transparent to the service agent. However, if a request could come in that need to be combined with or related to an earlier request, then CM would likely be the way to go.

Will presenters discuss measurability for individuals participating in the case, time and actions needed to close – and sense of ownership of the customer solution?

Just because a case is dynamic doesn’t mean that it’s not measured: keep in mind that a case is based on goals, and there should be KPIs associated with those goals that can be measured. For example, it may be important that a case be completed within a specific timeframe, although not important that any given action within the case be done within a specific time as long as it doesn’t jeopardize the case milestones. The reverse may also be true: a case could have no specific deadline since it is open-ended (as in managing a chronic care patient), although there may be deadlines and milestones on actions and subprocesses within the case. As for ownership, usually a case has a specific case manager who holds ultimate responsibility, even some of the actions are performed by other people, although that’s not always the case. In situations where there is not a single case manager, the identification and monitoring of KPIs becomes more important, with alerts being raised to someone who can take responsibility if required in order to achieve the case result.

BPM fits easily in a Quality Management System – Plan Do Check Act. How would Case Management fit into a QM system and repository?

I’m not a QM expert, but much of what I see of how QM is applied is through the development and application of fairly specific procedures. In the presentation, I spoke about structured subprocesses that could be invoked from a case in order to complete specific actions; these would obviously fit well within a QM framework. A structured PDCA model isn’t going to fit for most case management, although could be applied at a higher level since there is often some design of a framework or template for cases that is done, and KPIs against which you measure the success of the case.

If you had a process that was this complicated would you not rationalise it using something like six sigma?

This was related to the scenario that Emily Burns from Pegasystems presented, but I’ll address the more general issue of complexity and measurement, in part based on my previous response to the QM question. Six Sigma in particular is based on statistical measurement of processes, with a goal of reducing defects in the process. Although you could apply some of the Six Sigma measurement principles, in general, since you don’t have predefined processes, it’s difficult to make a lot of statistical calculations about those processes. Case management isn’t a replacement for process analysis: if you have a highly complex but structured process, then analyze it and implement it using more standard structured BPM techniques. Case management is for when, regardless of the amount of analysis that you do, it’s just not possible to model every possible pathway through the business process. That being said, there are situations where using case management for a while does end up producing some emergent processes: processes that weren’t understood to be predictable and structured until they were done enough times in a case management framework to see the patterns emerge.

since case is so dynamic, what is the best practice when designing system to handle CM?

how do you decide the granularity of a case ?

I’ve grouped these two together since they’re both involved with case design. As I mentioned in my previous response, CM is not a replacement for analysis: you still need to understand your business processes before you start designing your CM system. You will need to design a case framework that doesn’t restrict what the case managers can do, while collecting the information that is required in order to document and act upon the case. Things to design into your case will include an overall data model (which will determine the ability of people to find and monitor a specific case), any required actions or subprocesses that need to be executed at some point, and content that needs to be collected before the case can be completed. Other things to include will be case context (the information from other systems that may be used by the case manager in order to complete their work) as well as events between the case and other systems, both inbound and outbound. You will also want to set KPIs, milestones and related alerts or escalations on specific actions or the entire case. Emily will likely respond with more specifics on how they set out cases and subcases within Pega, but I suspect that you might find that your definition of case may shift once you start doing case management for a while. I had a chance to speak with the person from BAA who presented the BAA case study (the one that Emily showed at the end), and he said that they were in the process of rolling up the previous separate cases that they had for things such as passenger handling and luggage handling into a single case for each flight, with those as subcases.

What i understand from a case is that they are basically business scenarios. Can we assume that?

If you mean “business scenario” in the enterprise architecture sense, then a case and business scenario could be considered as equivalent in some situations, although business scenarios usually end up with some sort of structured process model defined. There are many common aspects, however, and I think that we can learn much about defining CM standards by looking at what has been done in EA.

To understand cases or to handle case management solutions, some extra tools are needed that handle things like case history, status etc., so, what generic list of tools do you think are needed from a holistic case management tool?

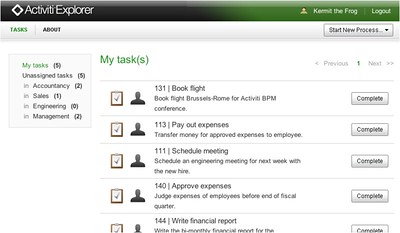

The list of tools and functionality is still emerging, and will continue to evolve over the next while, but Forrester’s report on dynamic case management has a useful diagram showing what to expect in a case management platform:

They also list the capabilities that would translate directly from BPMS in that part of the framework, such as human interaction, integration and analytics.

With a Case Management structure, is work typically or ever completed in the Case Management tool itself? If not, does the Case Management tool depend on the users updated the case periodically to indicate what stage they are in & how far they are toward completion, etc.?

Work is typically done both within the CM structure and in other systems. Since part of the expected functionality is integration between the CM system and other systems, there may be some degree of automated exchange of events and information between them, or users may be required to update the case directly with their progress in non-integrated systems. Since the case file serves as a permanent record of the case, it is often considered the system of record, not transient information such as a typical process instance might be: that means that updating the case isn’t just a matter of documenting what was done in other systems, but could be the only place in which that information is captured.

If you were on the call and have other questions, feel free to add them in the comments and I’ll respond.