We’re on the last of the four themes for TUCON, with Matt Quinn kicking off the session on the consumerization of enterprise IT. It’s a telling sign that many vendors now refer to their products as being like “Facebook/Twitter/iTunes/<insert popular consumer software here> for the enterprise” – enterprise app vendors definitely have consumer app envy, and TIBCO is no exception. As we saw in the earlier keynote session about tibbr, TIBCO is offering a lot of functionality that mimics (and extends) successful consumer software, and their providing Silver Mobile Server as a way to put all of that enterprise functionality that you build using TIBCO products out onto mobile devices. We saw a demo of a app that was built using Silver Mobile Server for submitting and managing auto insurance claims, and it looks like the platform is pretty capable both in terms of using the native device capabilities and linking directly to back-end processes.

They showed some new enhancements to Silver Fabric for private cloud provisioning and management, and discussed their public cloud applications (tibbr, Spotfire, Loyalty Lab) and public Silver Marketplace functionality. Today, they announced Silver Integrator, running on Silver Marketplace, providing enterprise-class integration services on the public cloud. We saw a brief demo of Silver Integrator: it launches a cloud version of the TIBCO Designer with some additional palettes for cloud connectors such as Facebook, Salesforce and REST services.

Being able to extend enterprise applications onto mobile devices and into the cloud are critical capabilities for consumerization of IT, and TIBCO (as well as other vendors) are offering those capabilities. The problem of adoption, however, is usually not about product capabilities, it’s about organizational culture: there is a lot of resistance to this trend not in the user community, but within IT. I saw a graphic and blog post by Dion Hinchcliffe of Dachis Group today about the major shifts happening in IT, and one thing that he wrote was especially impactful:

Never in my two decades of experience in the IT world have I seen such a disparity between where the world is heading as a whole and the technology approach that many companies are using to run their businesses.

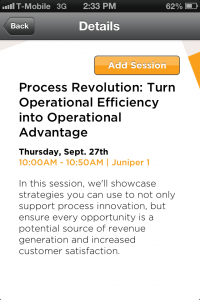

<p>Cloud, mobile, social, consumerization and big data: we’re all doing it in the consumer space, but IT departments are continuing to drag their feet at bringing this into the enterprise. The organizations that fail to embrace this are going to fall further behind in their ability to serve customers effectively and to innovate, and will suffer for it.</p> <p>Quinn wrapped up with a list of their product announcements, including two new policy-driven governance products as part of the ActiveMatrix suite: AMX Service Gateway for providing enterprise services outside the firewall, and AMX Policy Director for managing security, auditing and logging rules for services. He covered their AMX BPM 2.0 release announcement briefly, with new functionality for work assignment and scheduling, case management, and page flow debugging, plus some new capabilities in Nimbus Control and FormVine.</p> <p>That’s it for the keynotes, since the rest of today and all of tomorrow is breakout sessions where we can dig into the details of the products and how they’re being used by customers. I’ll be heading to the BPM product update this afternoon by Roger King and Justin Brunt, and probably also drop in on the tibbr product strategy to see more details of what we heard this morning.</p> <p>I’ve been asked to step in for a last-minute cancellation and will be <a href="https://column2.com/2012/09/tibco-tucon2012-you-say-you-want-a-process-revolution/">presenting tomorrow morning at 10am on the process revolution</a> that is moving beyond implementing BPM just for cost savings, but looking at new business process metrics such as maximizing customer satisfaction. If you’re here at TUCON, come on out to the session tomorrow morning.