Catching up on a few webinars that I missed live, I just reviewed the replay of ebizQ’s Integration Trends 2006. There’s a nice summary slide up front by David Kelly, an ebizQ analyst, where he talks about the current focus of most businesses (reducing costs, increasing flexibilty, becoming process-driven) and most IT departments (composite applications, modernizing legacy systems, moving to SOA and event-driven architecture). Certainly true for all of my customers.

The webinar was sponsored by PolarLake, makers of an ESB product, and it turns out that the CEO, Ronan Bradley, has a blog that includes some interesting stuff, such as his recent post that “exposes the myth” of run-time discovery of web services (he quotes and comments on a post on the same subject by Brenda Michelson). Alas, I’m unlikely to read his blog much since he chooses not to publish full feeds, and I find it too much of a hassle to click through to every blog on my list of daily reads.

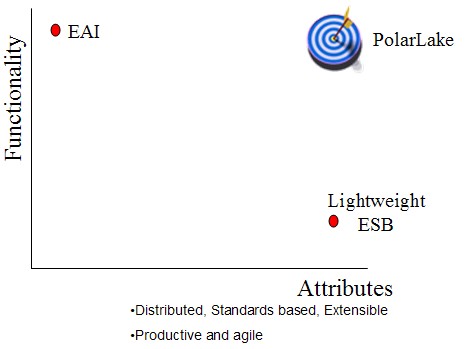

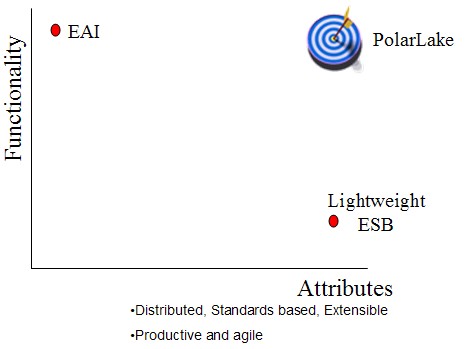

Anyway, back in the webinar, Ronan Bradley made a huge point about how ESB is not EAI, but I’m not sure that the lines are that clearly drawn. David Kelly later asked how he made that distinction, and it seems to be based on attributes such as “distributed, standards-based, extensible” and providing “productivity and agility”, but I don’t think that the lines are that clearly drawn between ESB and EAI: it’s more of a spectrum of capabilities than the black-and-white picture that Ronan puts forward. He depicts EAI as having functionality without these attributes, “lightweight ESB” as having the attributes but little functionality, and (of course) PolarLake as having both the functionality and the attributes.

Of course, what vendor doesn’t find a graph to publish where they’re in the upper-right quadrant? Gartner probably just calls it all integration-focused BPM anyway.

Regardless of any distinction between EAI and ESB, he does make an interesting point about how orchestration isn’t so simple as it is often portrayed, but requires “mediation” around the orchestration that may be many times larger and more complex than the orchestration itself: things such as complex data manipulation and translation beyond simple XSLT transformation, interaction with existing infrastructure and applications, and complex and recursive data transformation, enrichment and management.