Appian was recently doing a round of road-show conferences, and when they landed in my backyard, I decided to stop in for the day and see what was new. I missed Appian World this year and was looking forward to a bit of a product update as well as some of the local customer stories.

The day started with Edward Hughes, SVP of sales, giving us a high-level overview of Appian and their BPM platform-as-a-service and case management products (for the non-customers in the audience), as well as their shift to becoming more of a broad application development platform rather than just a BPMS. I’ve been seeing this trend with many BPM vendors over the past few years, and Appian has been repositioning this way for a year or two already. Using Appian as an application development platform allows applications to be developed independently of the deployment platform, both on server side (e.g., develop on the cloud version, deploy on premise) and for client interfaces on desktops or mobile devices. The messaging is that you can use their platform to create customer service applications “beyond CRM” to handle the entire customer journey, with a unified interface plus a consolidated view onto enterprise data using their Records function. He also talked about the Appian App Market, which is an expanded version of their Appian Forum, containing add-in components and complete applications from Appian and third parties.

Since it was a small room, the local customers introduced themselves and talked about their Appian experience and applications: 407 ETR with 10 apps integrated with their customer portals so that online actions (e.g., acquiring a new transponder) become Appian processes assigned to the 125 internal users; Manulife, the first Appian cloud customer back in 2008, now migrating their “legacy” Appian apps to the Tempo UI and serving 900 users for work/time tracking and records management in Marketing; and IESO with apps to register and manage information about energy companies participating in electricity markets. We also heard from some of the partners attending: TCS, Princeton Blue, and boutique contender Bits In Glass with 15+ Appian-trained people in Canada and the US. Bits In Glass used to do mostly code-level (Java) bespoke development, and have reduced their efforts and timelines to 1/3 of that using Appian’s model-driven development.

Next up was Michael Beckley, describing his new role as Chief Customer Officer (as well as CTO) as well as giving us a product update on the 7.11 quarterly release. Appian is seeing corporate IT budgets as 20% innovation and 80% maintenance, but they want to flip that ratio so that maintenance is much less expensive than the original build, freeing up time and energy for innovation. Most large enterprises aren’t going to get rid of custom applications, but they do need to make them faster to build and maintain, while enforcing strict security and providing a user-friendly interface for internal and external users. In theory, an integrated application development such as Appian provides all the pieces:user interface, reports, rules, collaboration, process, on-premise cloud, mobile, social, data, content, security, identity, and integration; in practice, most organizations end up doing something outside the model-driven development environment, although it can definitely improve their custom development efforts. Appian’s focus, as with many of the other BPMS vendors pivoting to become app dev vendors, is on providing a platform to build process-centric applications that get things done via automation, with people injected into the process where required.

Beckley gave us a hint of their growth strategy: they tend to build rather than buy in order to keep their technology pure, and since growth by acquisition inevitably requires a large (and underestimated) effort to integrate the new technology.

Here’s a quick list of the Appian 7.11 updates (some of these likely came before 7.11, but I haven’t had an update for a while):

- Three UI styles for Appian apps: the Tempo social interface, Sites limited-function workspace/worklist for heads-down workers, and Embedded SAIL to embed Appian functionality within an existing portal for internal or external users. Sites have Action Forms for fit-for-purpose apps when a social feed UI isn’t appropriate, and Embedded SAIL has Action Forms for customer-facing apps within a third-party web portal. These latter two are critical for real-world enterprise applications: although I like the Tempo interface, many of my enterprise clients need a different sort of view for heads-down workers, which can be provided by Sites or using Embedded SAIL.

- A number of improvements to the Tempo news feed and UI, including the Tempo Kudos view to promote collaboration and provide awareness of accomplishments, dynamically-updating filters to better link and manage record data and underlying data sources

- Improvements to SAIL, including positioning it as a device-independent UI that provides shared model experience (rather than an HTML5 gateway into an existing app as seen in some other mobile-enablement technology) that is natively rendered on each device. The rendering engine can be updated independently of the applications, making it easier to adapt to new OS versions and devices. Appian uses SAIL to build their own components and apps that become part of the product. From a developer functionality standpoint, SAIL has added placeholder text and tooltips on forms, auto first field focus to reduce clicks and improve efficiency, additional image sizes that are auto-scaled to the device, initially-collapsed form sections, “submit” links that can be placed on a graphic element instead of standard buttons, links in milestones and pickers, grid enhancements, and continuing speed improvements. There’s also a new barcode component, although on iOS only and requiring a Verifone device for capture.

- Mobile offline actions use native encrypted data containers rather than HTML5 storage (some of this is iOS only although Android is planned for later this quarter), with the developer deciding which actions and data are available offline. Changes to the definition of a form while a user is offline will prompt the user to review and resubmit the form with the new/updated form field definitions, so application changes can continue even if there are active offline users. This does not (yet) allow existing records to be locked for offline updates, although tasks can be locked to user before going offline.

- For designers, the developer portal is being migrated to SAIL and enhanced with build processes; there’s a UI designer navigation tree to allow view/select/edit within a hierarchical tree view of an action form; the expression rule designer (“for those of you who are still writing expressions”, namely power developers) auto-suggests rule inputs and there is some level of expression rule testing; a process report designer can be used to create performance reports; impact analysis reports show where rules are invoked and other object relationships; bulk security updates can be made across objects.

- For administrators, a big new thing is LDAP/SAML authentication with multiple LDAP servers and complex configurations.

They have frequent product update webinars , free introductory courses and tips & tricks sessions online; in fact, there is a product update webinar tomorrow if you want to hear more about what I’ve listed above.

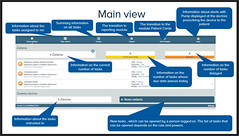

We heard from Rew Dickinson, a solutions consultant, on what makes a great app — complete with a live demo to show us how it’s done. There were a lot of best practices here that I won’t repeat, better for you to check out one of their webinars, but a few key pointers:

- Design applications to be omni-channel and easily adaptable.

- Use Records to organize and model corporate data, regardless of source, for use in an application; bidirectional links between Records and process instances allow for a full view whether you’re coming from the process or data side of things.

- Use Sites for fit-for-purpose applications, e.g., a worklist for heads-down task execution, as an alternative to full Tempo environment. Effectively, this is a report that can be sorted and filtered, with links to tasks that takes the user to the task form; it can include work management analytics for a manager/dispatcher user to monitor and reallocate task assignments. This made me think that Appian has just reinvented their per-application portal mode with Sites, albeit with better underlying technology, but that’s a discussion for another day.

- Use Embedded SAIL for customer-facing portal environments, e.g., create service request from a customer order page.

Michael Beckley came back to talk to us about Appian Cloud, that is, their public cloud offering. It uses Amazon AWS/EC2/S3 in a single-tenant architecture, which allows each environment to be upgraded independently — more of a managed hosting model. The web tier is shared and handled by Appian, who also manages servers, load balancing, high availability and upgrades. There can be a VPN tunnel to on-premise data, and in fact the AWS instance does not have to be available on the public internet, but can be restricted to access only through the VPN from a corporate location. This configuration provides the elasticity and availability of the Amazon cloud, but allows private data to remain on premise — something that goes a long ways to resolving geographic data location issues. They’ve obviously been working on the optics of US-owned data centers by listing their privacy chops, but it would have been even more reassuring to see a mention of any Canadian standards such as PIPEDA for this purely Canadian audience. There are tiers for development, medium, large and extra-large deployments, with a redeployment to move between tiers (so not that elastic…) but it supposedly only takes a few minutes if planned. Uptime this year is mostly 5 9’s, with customer credits for missed uptime SLAs. You can also self-host Appian in other environments, e.g., Azure, although the Appian Cloud SaaS offering is currently Amazon only.

We finished up with Mike Cichy, an Appian consultant, discussing their center of excellence offerings and how customers can plug into the vast wealth of information, from checklists to migration guides to training in order to embody best practices. There are a number of tools available such as the Appian Health Check and Deployment Automation in addition to these practices, with an overall goal to help achieve a large improvement in developer speed and quality within customer/partner organizations.

Altogether an informative day, and great catch up with some old friends.