The second day of SAP’s SAPPHIRENOW conference started with Bernd Leukert discussing some customers’ employees worry of being disintermediated by the digital enterprise, but how the digital economy can be used to accentuate the promise of your original business to make your customers happier without spending the same amount of time (and hopefully, money) on enterprise applications. It’s not just about changing technologies but about changing business models and leveraging business networks to address the changing world of business. All true, but I still see a lot of resistance to the digital enterprise in large organizations, with both mid-level management and front-line workers feeling threatened by new technologies and business models until they can see how it can be of benefit to them.

The second day of SAP’s SAPPHIRENOW conference started with Bernd Leukert discussing some customers’ employees worry of being disintermediated by the digital enterprise, but how the digital economy can be used to accentuate the promise of your original business to make your customers happier without spending the same amount of time (and hopefully, money) on enterprise applications. It’s not just about changing technologies but about changing business models and leveraging business networks to address the changing world of business. All true, but I still see a lot of resistance to the digital enterprise in large organizations, with both mid-level management and front-line workers feeling threatened by new technologies and business models until they can see how it can be of benefit to them.

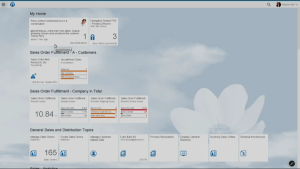

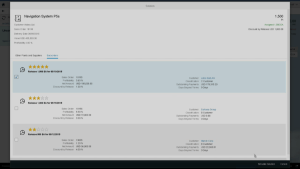

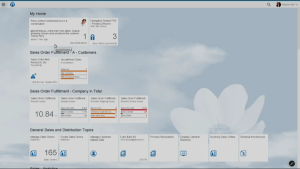

Although Leukert is on the stage, the real star of the show is S/4HANA: the new generation of their Business Suite ERP solutions based natively on the in-memory HANA data and transaction engine for faster processing, a simplified data model for easier analytics and faster reconciliation, and a new user interface with their Fiori user experience platform. With the real-time analytical capabilities of HANA, including non-SAP as well as S/4HANA data from finances and logistics, they are moving from being just a system of record to a full decision support system. We saw a demo of a manufacturing scenario, where we walked through a large order process where we saw a combination of financial and logistics data presented in real time for making recommendations on how to deal with a shortage in fulfilling an order. Potential solutions — in this case, moving stock allocated from one customer to another higher priority customer — are presented with a predicted financial score, allowing the user to select one of the options. Nice demo of analytics and financial predictions directly integrated with order processing.

Although Leukert is on the stage, the real star of the show is S/4HANA: the new generation of their Business Suite ERP solutions based natively on the in-memory HANA data and transaction engine for faster processing, a simplified data model for easier analytics and faster reconciliation, and a new user interface with their Fiori user experience platform. With the real-time analytical capabilities of HANA, including non-SAP as well as S/4HANA data from finances and logistics, they are moving from being just a system of record to a full decision support system. We saw a demo of a manufacturing scenario, where we walked through a large order process where we saw a combination of financial and logistics data presented in real time for making recommendations on how to deal with a shortage in fulfilling an order. Potential solutions — in this case, moving stock allocated from one customer to another higher priority customer — are presented with a predicted financial score, allowing the user to select one of the options. Nice demo of analytics and financial predictions directly integrated with order processing.

The new offering is modular, with additional plug-ins for their other products such as Concur and SuccessFactors to enhance the suite capabilities. It runs in the cloud and on-premise. Lots of reasons to transition, but having this type of new functionality requires significant work to adopt the new programming model: both on SAP’s side in building the new platform, and also on the customers’ side for refactoring their applications to take advantage of the new features. Likely this will take several months, if not years, for widespread adoption by customers that have highly customized solutions (isn’t that all of them?), in spite of the obvious advantages. As we have seen with other vendors who completely re-architect their product, new customers are generally very happy with starting on the new platform, but existing customers can take years even when there is certified migration path. However, since they launched in February, 400 customers have committed to S4/HANA, and they are now supporting all 25 industries that they serve.

As we saw last year, SAP is pushing to have existing customers first migrate to HANA as the underlying database in their existing systems (typically displacing Oracle), which is a non-trivial but straightforward operation that is likely to improve performance; then, reconsider whether the customizations that they have in their current system are handled out of the box with S/4HANA or can be easily re-implemented based on the simpler data model and more functional capabilities. Sounds good, and I imagine that they will get a reasonable share of their existing customers to make the first step and migrate to HANA, but the second step starts to look more like a new implementation than a simple migration that will scare off a lot of customers. Leukert invited a representative from their customer Asian Paints to the stage to talk about their migration: they have moved to HANA and the simplified finance core functionality, and are still working on implementing the simplified logistics and other modules with a vision to soon be completely on S/4HANA. A good success story, but indicative of the length of time and amount of work required to migrate. For them, definitely worth the trip since they have been able to re-imagine their business model to reach new markets through a better understanding of their customers and their own business data.

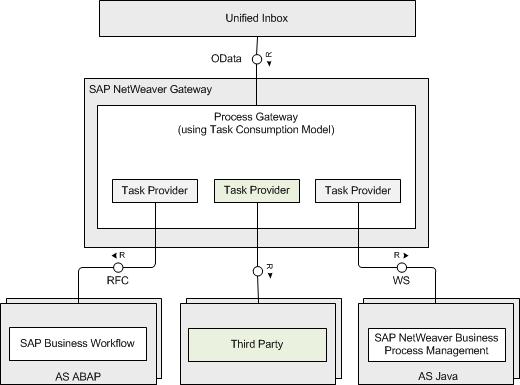

He moved on to talk about the HANA Cloud Platform (HCP), a general-purpose application development platform that can be used to build applications unrelated to SAP applications, or to build extensions to SAP functionality. He mentioned an E&Y application built on HCP for fraud detection that is directly integrated with core SAP solutions, which is just one of 1,000 or more third-party applications available on the HCP marketplace. HCP provides structured and unstructured data models, geospatial, predictive, Fiori UX platform as a service, mobile support, analytics portfolio, and integration layers that provide direct connection to your business both on the device side through IoT events and into the operational business systems. With the big IoT push that we saw in the panel yesterday, Siemens has selected HCP as their cloud platform for IoT: the Siemens Cloud for Industry. Peter Weckesser of Siemens joined Leukert on stage to talk more about this newly-launched platform, and how it can be added to their customer installations as a monitoring (not control) layer: remote devices, such as sensors on manufacturing equipment, push their event streams to the Siemens cloud (based on HCP) in public, hybrid or on-premise configurations; analytics can then be applied for predictive maintenance scheduling as well as aggregate operational optimization.

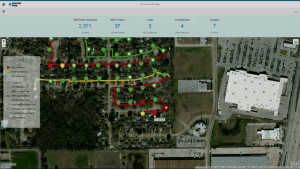

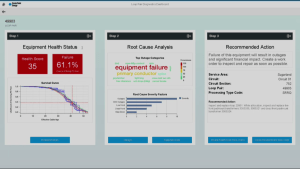

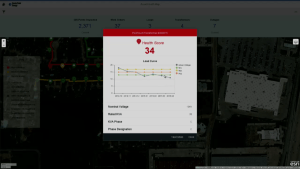

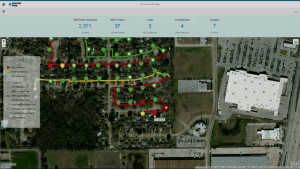

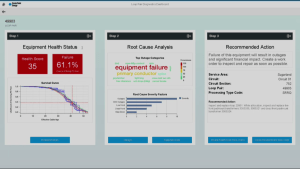

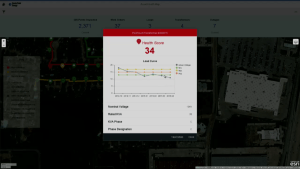

We saw a demo based on the CenterPoint IoT example at the panel yesterday, showing monitoring and maintenance of energy distribution networks: tracking the health of transformers, grid storage and other devices and identifying equipment failures, sometimes before they even happen. CenterPoint already has 100,000 sensors out in the field, and since this is integrated with S/4HANA, this is not just monitoring: an operator can trigger a work order directly from the predictive equipment maintenance analytics dashboard.

We saw a demo based on the CenterPoint IoT example at the panel yesterday, showing monitoring and maintenance of energy distribution networks: tracking the health of transformers, grid storage and other devices and identifying equipment failures, sometimes before they even happen. CenterPoint already has 100,000 sensors out in the field, and since this is integrated with S/4HANA, this is not just monitoring: an operator can trigger a work order directly from the predictive equipment maintenance analytics dashboard.

Leukert touched on to the HANA roadmap, with the addition of Hadoop and SPARK Cluster Manager to handle infinite volumes of data, then welcomed Walmart CIO Karenann Terrell to discuss what it is like to handle a really large HANA implementation. Walmart serves 250 million customers per week through 11,000 locations with 2.2 million employees, meaning that they generate a lot of data just in their daily operations: they generate literally trillions of financial transactions. Because technology is so core to managing this well, she pointed out that Walmart is creating a technology company in the middle of the world’s largest retail company, which allows them to stay focused on the customer experience while reducing costs. Their supply chain is extensive, since they are directly plugged into many of their suppliers, and innovating along that supply chain has driven them to partner with SAP more closely than most other customers. HANA allows them to have 5,000 people hitting on data stores of a half-billion records simultaneously with sub-second response time to provide a real-time view of their supply chain, making them a true data-driven retailer and shooting them to the top of yesterday’s HANA Innovation Awards. She finished by saying that seeing S/4HANA implemented at Walmart in her lifetime is on her bucket list, which got a good laugh from the audience but highlighted the fact that this is not a trivial transition for most companies.

Leukert finished with an invitation — or maybe it was a challenge — to use S/4HANA and HCP to reinvent your business: “clean your basement” to remove unnecessary customization in your current SAP solutions or convert it to HCP or S/4HANA extension platform; change your business model to become more data-driven; and leverage business networks to expand the edges of your value chain. Thrive, don’t just survive.

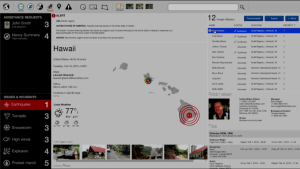

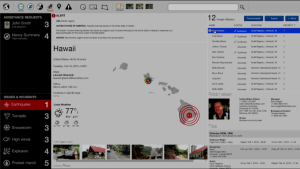

Steve Singh, CEO of Concur (acquired by SAP last December) then took over to look at reinventing the employee travel experience, from booking through trip logistics to expense reporting. For companies with large number of traveling employees, managing travel can be a serious headache both from a logistics and financial standpoint. Concur does this by creating a business network (or a network or networks) that directly integrates with suppliers — such as airlines and car rental companies — for booking and direct invoice capture, plus easy functions for inputting travel expenses that are not captured directly from the supplier. I heard comments yesterday that SAP already has travel and expense management, and although the functionality of Concur for that functionality is likely a bit better, the networks that they bring are the real prize here. The networks, for example, allow for managing the extraction of an employee who finds themself in a disaster or other dangerous travel scenario, and becomes part of a broader human resources risk management strategy.

Steve Singh, CEO of Concur (acquired by SAP last December) then took over to look at reinventing the employee travel experience, from booking through trip logistics to expense reporting. For companies with large number of traveling employees, managing travel can be a serious headache both from a logistics and financial standpoint. Concur does this by creating a business network (or a network or networks) that directly integrates with suppliers — such as airlines and car rental companies — for booking and direct invoice capture, plus easy functions for inputting travel expenses that are not captured directly from the supplier. I heard comments yesterday that SAP already has travel and expense management, and although the functionality of Concur for that functionality is likely a bit better, the networks that they bring are the real prize here. The networks, for example, allow for managing the extraction of an employee who finds themself in a disaster or other dangerous travel scenario, and becomes part of a broader human resources risk management strategy.

At the press Q&A later, Leukert fielded questions about how they have simplified the complete core of their ERP solution in terms of data model and functionality but still have work to do for some industry modules: although all 25 industries are supported as of now in the on-premise version, they need to do a bit of tinkering under the hood and do additional migration for the cloud version. They’re also still working on the cloud version of everything, and are recommending the HCM and CRM standalone products if the older Business Suite versions don’t meet requirements. In other words, it’s not done yet, although core portions are fully functional. Singh talked about the value of business networks such as Ariba in changing business models, and sees that products such as Concur using HCP and the SAP business networks will help drive broader adoption.

There was a question on the ROI for migration to S/4HANA: it’s supposed to run 1,800 times faster than previous versions, but customers may not be seeing much (if any) savings, opening things up to competitive displacement. I heard this same sentiment from some customers last night at the HANA Innovation Awards reception; since there is little or no cost reduction in terms of license and deployment costs, they need to make the case based on what additional capabilities that HANA enables, such as real-time analytics and predictions, that allow companies to run their businesses differently, and a longer-term reduction in IT complexity and maintenance costs. Since a lot of more traditional companies don’t yet see the need to change their business models, this can be a hard sell, but eventually most companies will need to come around to the need for real-time insights and actions.

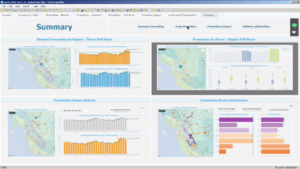

Brad Hopper, VP strategy for analytics, for a demo of Spotfire visual analytics while wearing a long blond wig (attempting to make a point about the importance of beauty, I think). He built an analytics dashboard while he talked, showing how easy it is to create visual analytics and trigger smart actions. He went on to talk about data preparation and cleansing, which can often take as much as 50% of an analyst’s time, and demonstrated importing a CSV file and using quick visualizations to expose and correct potential problems in the underlying data. As always, the Spotfire demos are very impressive; I don’t follow Spotfire closely enough to know what’s new, but it all looks pretty slick.

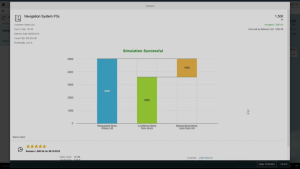

Brad Hopper, VP strategy for analytics, for a demo of Spotfire visual analytics while wearing a long blond wig (attempting to make a point about the importance of beauty, I think). He built an analytics dashboard while he talked, showing how easy it is to create visual analytics and trigger smart actions. He went on to talk about data preparation and cleansing, which can often take as much as 50% of an analyst’s time, and demonstrated importing a CSV file and using quick visualizations to expose and correct potential problems in the underlying data. As always, the Spotfire demos are very impressive; I don’t follow Spotfire closely enough to know what’s new, but it all looks pretty slick. Michael O’Connell, TIBCO’s chief analytics officer, came up to demonstrate a set of analytics applications for a fictitious coffee company: sales figures and drilldowns, with what-if predictions for planning promotions; and supply chain management and smart routing of product deliveries.

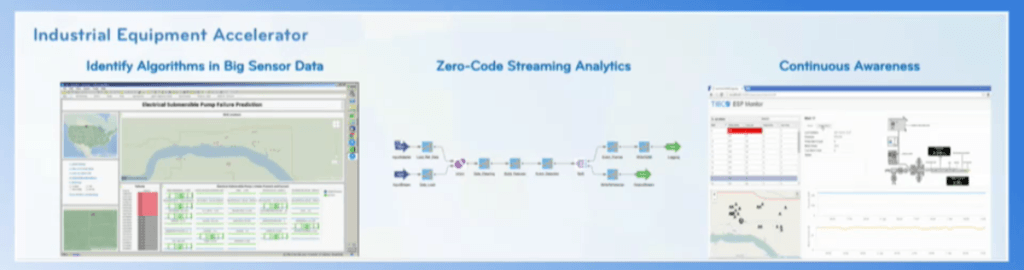

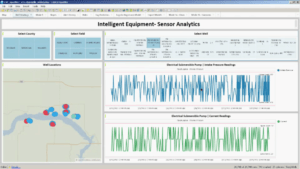

Michael O’Connell, TIBCO’s chief analytics officer, came up to demonstrate a set of analytics applications for a fictitious coffee company: sales figures and drilldowns, with what-if predictions for planning promotions; and supply chain management and smart routing of product deliveries. oil industry application that leverages sensor analytics to maximize equipment productivity by initiating preventative maintenance when the events emitted by the device indicate that failure may be imminent. He showed a more comprehensive interface that would be used in the head office for real-time monitoring and analysis, and a simpler tablet interface for field service personnel to receive information about wells requiring service. Palmer finished the analytics segment with a brief look at LiveView Web, a zero-code environment for building operational intelligence dashboards.

oil industry application that leverages sensor analytics to maximize equipment productivity by initiating preventative maintenance when the events emitted by the device indicate that failure may be imminent. He showed a more comprehensive interface that would be used in the head office for real-time monitoring and analysis, and a simpler tablet interface for field service personnel to receive information about wells requiring service. Palmer finished the analytics segment with a brief look at LiveView Web, a zero-code environment for building operational intelligence dashboards. After the break, Adam Steltzner, NASA’s lead engineer on the Mars Rover and author of The Right Kind of Crazy: A True Story of Teamwork, Leadership, and High-Stakes Innovation, talked about innovation, collaboration and decision-making under pressure. Check out the replay of the keynote for his talk, a fascinating story of the team that built and landed the Mars landing vehicles, along with some practical tips for leaders to foster exploration and innovation in teams.

After the break, Adam Steltzner, NASA’s lead engineer on the Mars Rover and author of The Right Kind of Crazy: A True Story of Teamwork, Leadership, and High-Stakes Innovation, talked about innovation, collaboration and decision-making under pressure. Check out the replay of the keynote for his talk, a fascinating story of the team that built and landed the Mars landing vehicles, along with some practical tips for leaders to foster exploration and innovation in teams.