For the first breakout of the day, I attended Dan Jeavon’s session on Shell’s business architecture practice. For such a massive company – 93,000 employees in 90 countries – this was a big undertaking, and they’ve been at this for five years.

He defines business architecture as the business strategy, governance, organization and key business process information, as well as the interaction between these concepts, which is taken directly from the TOGAF 9 definition. Basically, this involves design, must be implemented and not just conceptual, and requires flexibility based on business agility requirements. They started on their business architecture journey because of factors that affect many other companies: globalization, competition, regulatory requirements, realization of current inefficiencies, and emergence of a single governance board for the multi-national company.

Their early efforts were centered on a huge new ERP system, especially with the problems due to local variations from the global standard process models. “Process” (and ERP) became naughty words to many people, with connotations of bloated, not-quite-successful projects. Following on from some of the success points, their central business architecture initiative actually started with process modeling/design: standard processes across the different business areas with global best practices. This was used to create and roll out a standard set of financial processes, with a small core team doing the process redesign, and coordinating with IT to create a common metamodel and architectural standards. As they found out, many other parts of the company had similar process issues – HR, IT and others – so they branched out to start building a business architecture for other areas as well.

They had a number of challenges in creating a process design center of excellence:

- Degree of experience with the tool and the methodology; initial projects weren’t sufficiently structured, reducing benefits.

- Perceived value to the business, especially near-term versus long-term ROI.

- Impact of new projects, and ensuring that they follow the methodology.

- Governance and high-level sponsorship.

They also found a number of key steps to implementing their CoE and process architecture:

- Sponsorship

- Standard methodology, embedded within standard project delivery framework

- Communication of success stories

Then, they migrated their process architecture initiative to a full business architecture by looking at the relationships to other elements of business architecture; this led to them do business architecture (mostly) as part of process design initiatives. Recent data quality/management initiatives have also brought a renewed focus on architecture, and Jeavons feels that although the past five years have been about process, the next several years will be more about data.

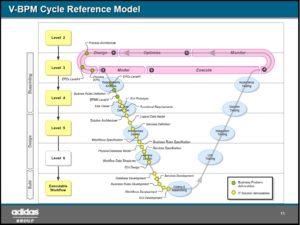

He showed a simplified version of their standard metamodel, including aspects of process hierarchy models, process flow models, strategy models and organization models. He also showed a high-level view of their enterprise process model in a value stream format, with core processes surrounded by governing and supporting processes. From there, he showed how they link the enterprise process model to their enterprise data catalogue, which links to the “city plan” of their IT architecture and portfolio investment cycle; this allows for traceability as well as transparency. They’ve also been linking processes to strategy – this is one of the key points of synergy between EA and BPM – so that business goals can be driven down into process performance measures.

The EA and process design CoE have been combined (interesting idea) into a single EA CoE, including process architects and business architects, among other architect positions; I’m not sure that you could include an entire BPM CoE within an EA CoE due to BPM’s operational implementation focus, but there are certainly a lot of overlapping activities and functions, and should have overlapping roles and resources.

He shared lots of great lessons learned, as well as some frank assessment of the problems that they ran into. I particularly found it interesting how they morphed a process design effort into an entire business architecture, based on their experience that the business really is driven by its processes.