To finish up my time at the academic research BPM 2019 conference, I attended one of the industry forum sessions, which highlights initiatives that bring together academic research and practical applications in industry. These are shorter presentations than the research sessions, although still have formal published papers documenting their research and findings; check those proceedings for the full author list for each paper and the details of the research.

Process Improvement Benefits Realization: Insights from an Australian University. Wasana Bandara, QUT

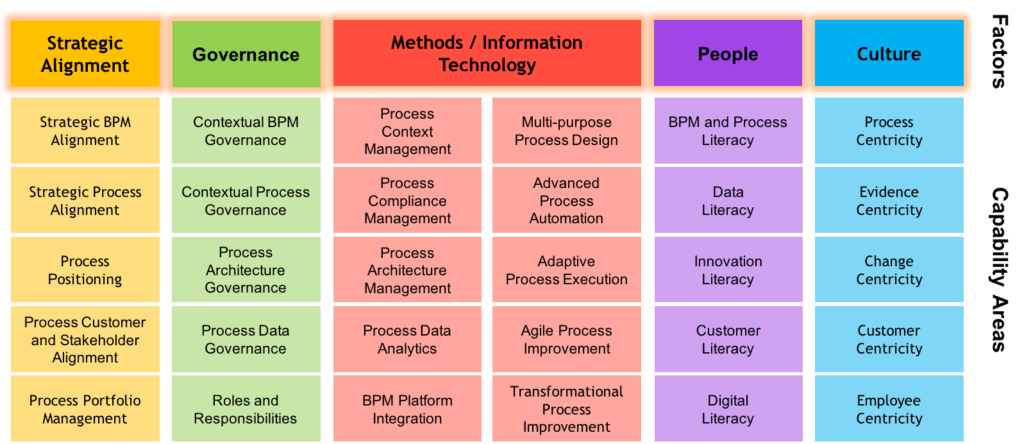

The first presentation was about process improvement at the author’s university. They took on an enterprise business process improvement project in 2017, and have developed a Business Process Improvement Office (BPIO — aka centre of excellence). They wanted to be able to have measurable benefits, and created a benefits realization framework that ran in parallel with their process improvement lifecycle to have the idea and measurement of benefits built in from the beginning of any project.

(From the industry forum research paper)

They found that identifying and aligning the ideas of benefits realization early in a project created awareness and increased receptiveness to unexpected benefits. Good discussion following on the details of their framework and how it impacts the business areas as they move their manual processes to more automation and management.

Enabling Process Mining in Aircraft Manufacture: Extracting Event Logs and Discovering Processes from Complex Data. Maria Teresa Gómez López, Universidad de Sevilla

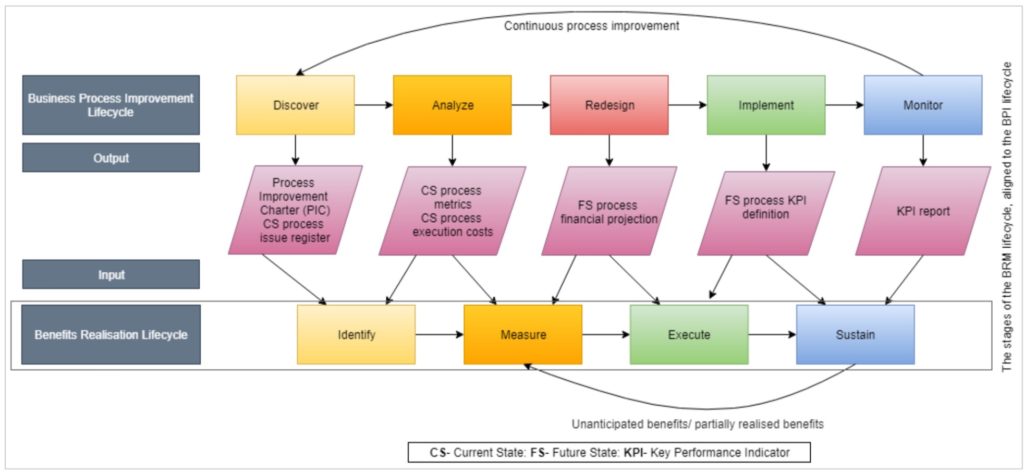

The second presentation was about using process mining to discover the processes used in aircraft manufacture. The data underlying the process mining is generated by IoT manufacturing devices, hence had much higher volumes than a usual business process mining application, requiring preprocessing to aggregate the raw log data into events suitable for process mining. They also wanted to be able to integrate knowledge from engineers involved in the manufacturing process to capture best practices and help extract the right data from the raw data logs.

(From the industry forum research paper)

They had some issues with analyzing the log data, such as incorrect data (an aircraft was in two stations at the same time, or was moving backwards through the assembly process), incomplete or insufficient information. Future research and work on this will include potential integration with big data architectures to handle the volume of raw log data, and and finding new ways of analyzing the log data to have cleaner input to the process discovery algorithms.

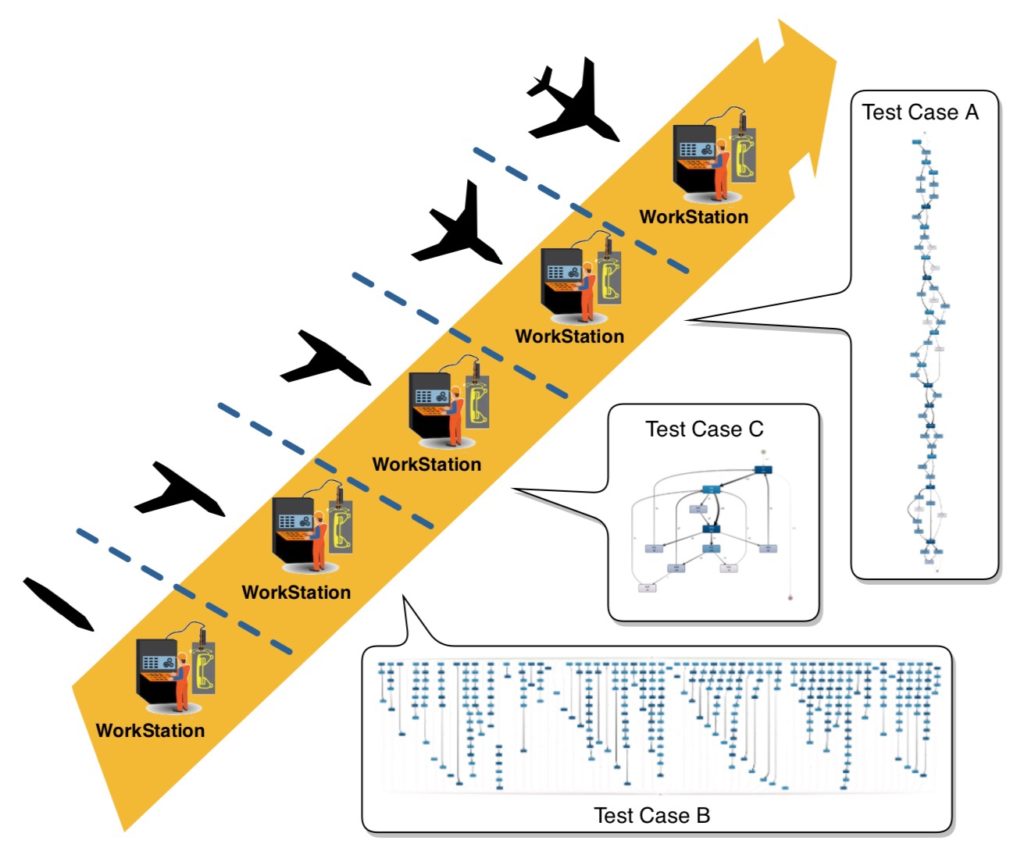

The adoption of globally unified process standards via a multilingual management system The case of Marabu, a worldwide manufacturer of printing inks and creative colours of the highest quality. Klaus Cee, Marabu, and Andreas Schachermeier, ConSense

The next presentation was about how Marabu, a printing ink company, standardized and aligned their multinational subsidiaries’ business processes with the parent company. This was not a research project per se, although ConSense is a spinoff consulting company from a university project several years ago, but a process and knowledge management implementation project. They had some particular challenges with developing uniform multi-lingual processes that could have local variants, integrated with needs of quality, environmental and occupational safety management.

Data-driven Deep Learning for Proactive Terminal Process Management. Andreas Metzger, University of Duisburg-Essen

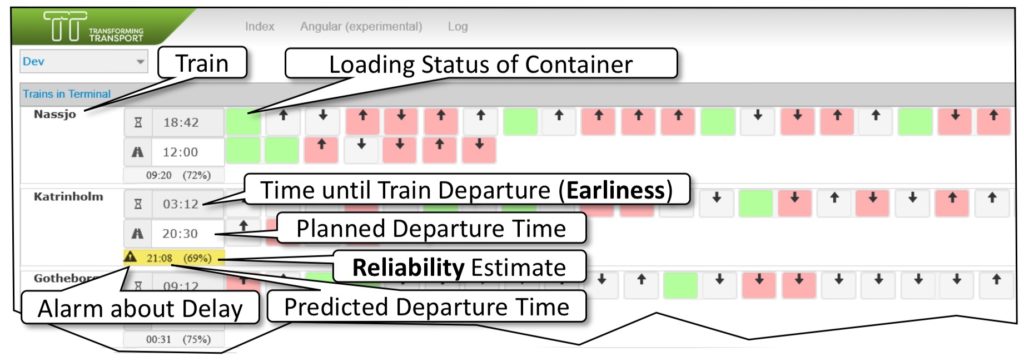

The final paper in this industry forum session was on the digitalization of the Duisberg intermodal container shipping port, a large inland port dealing with about 10,000 containers arriving and departing by rail and truck each month. Data streams from terminal equipment captured information about the movement of containers, cranes and trains; their goal was to predict based on current state whether a given train would be loaded and could depart on time, and proactively dispatch resources to speed up loading when necessary. This sounds like a straightforward problem, but the data collected can lead to erroneous results: waiting for more data to be collected can lead to more accurate predictions, but earlier intervention can resolve the problem faster.

They applied multiple parallel deep learning models (recurrent neural networks) to improve the predictions, dynamically trading off between accuracy and earliness of detection. They were able to increase the number of trains leaving on time by 4.7%, which is a great result when you consider the cost of a delayed train.

(From the industry forum research paper)

They used RNNs as their deep learning models because they can handle arbitrary length process instances without sequence or trace encoding, and can perform predictions at any checkpoint with a single model; there’s a long training time and it’s compute-intensive, but that pays off in this scenario. Lessons that they learned included the fact that the deep learning ensembles worked well out of the box, but also that the quality of the data used as input is a key concern for accuracy: if you’re going to spend time working on something, work on data cleansing before you work on the deep learning algorithms.

The last segment following this is a closing panel, so this is the last of my coverage from BPM 2019. I haven’t attended this conference in many years, and I’m glad to be back. Looking forward to next year in Seville!

It’s been great to catch up with a lot of people who I haven’t seen since the last time that I attended, plus a few who I see more often. UW Wien has been a welcoming host as well as having a campus full of extraordinary modern architecture, with a well-organized conference and great evening social events.