Next week at at Dreamforce, Active Endpoints’s new Cloud Extend for Salesforce will officially go live. I had a briefing a few months back when it hit beta, and an update last week where I saw little new functionality from the first briefing, but some nice case studies and partner support.

Cloud Extend for Salesforce is a helper layer that integrates with Salesforce that allows business users to create lightweight processes and guides – think screenflows with context-sensitive scripting – to help users through complex processes in Salesforce. In Salesforce, as in many other ERP and CRM systems, achieving a specific goal sometimes requires a complex set of manual steps. Adding a bit of automation and a bit of structure, along with some documentation displayed to the user at the right time, can mean the difference between a process being done correctly or having some critical steps missed. If you look at the Cloud Extend case study with PSA Insurance & Financial Services covered in today’s press release, a typical “first call” sales guide created with Cloud Extend includes such actions as recording the prospect’s current policy expiration date, setting reminders for call-back, sending out collateral and emails to the prospect, and interacting with other PSA team members via Salesforce Chatter. This will mean that less follow-up items are missed, and improve the overall productivity of the sales reps since some of the actions are automated or semi-automated. Michael Rowley, CTO of Active Endpoints, wrote about about Cloud Extend at the time of the beta release, covering more of the value proposition that they are seeing by adding process to data-centric applications such as Salesforce. Lori MacVittie of F5 wrote about how although data and core processes can be commoditized and standardized, customer interaction processes need to be customized to be of greatest value. Interestingly, the end result is still a highly structured pre-defined process, although one that can be created by a business user using a simple tree structure.

Cloud Extend for Salesforce is a helper layer that integrates with Salesforce that allows business users to create lightweight processes and guides – think screenflows with context-sensitive scripting – to help users through complex processes in Salesforce. In Salesforce, as in many other ERP and CRM systems, achieving a specific goal sometimes requires a complex set of manual steps. Adding a bit of automation and a bit of structure, along with some documentation displayed to the user at the right time, can mean the difference between a process being done correctly or having some critical steps missed. If you look at the Cloud Extend case study with PSA Insurance & Financial Services covered in today’s press release, a typical “first call” sales guide created with Cloud Extend includes such actions as recording the prospect’s current policy expiration date, setting reminders for call-back, sending out collateral and emails to the prospect, and interacting with other PSA team members via Salesforce Chatter. This will mean that less follow-up items are missed, and improve the overall productivity of the sales reps since some of the actions are automated or semi-automated. Michael Rowley, CTO of Active Endpoints, wrote about about Cloud Extend at the time of the beta release, covering more of the value proposition that they are seeing by adding process to data-centric applications such as Salesforce. Lori MacVittie of F5 wrote about how although data and core processes can be commoditized and standardized, customer interaction processes need to be customized to be of greatest value. Interestingly, the end result is still a highly structured pre-defined process, although one that can be created by a business user using a simple tree structure.

When I saw a demo of Cloud Extend, I was reminded of similar guides and scripts that I’ve seen overlaid on other enterprise software to assist in user interactions, usually for telemarketing or customer service to be prompted on what to say on the phone to a customer, but this is more interactive than just scripts: it can actually update Salesforce data as part of the screenflow, hence making it more of a BPM tool than just a user scripting tool. Considering that the ActiveVOS BPMS is running behind the scenes, that shouldn’t come as a surprise, since it is optimized around integration activities. Yet, this is not an Active Endpoints application: the anchor application is Salesforce, and Cloud Extend is a helper app around that rather than taking over the user experience. In other words, instead of a BPMS silo in the clouds as we’re seeing from many BPMS cloud vendors, this is using a BPMS platform to facilitate a functionality integrated into another platform. A cloud “OEM” arrangement, if you please.

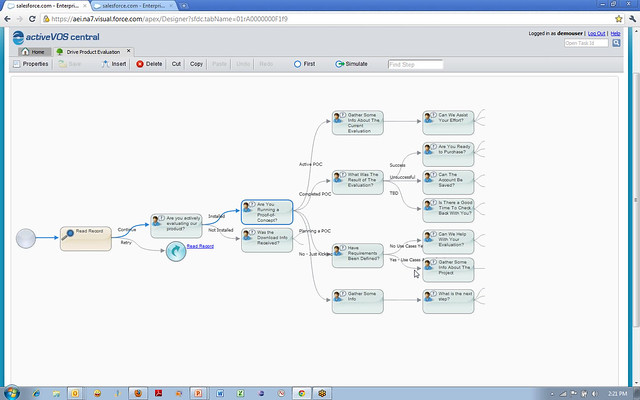

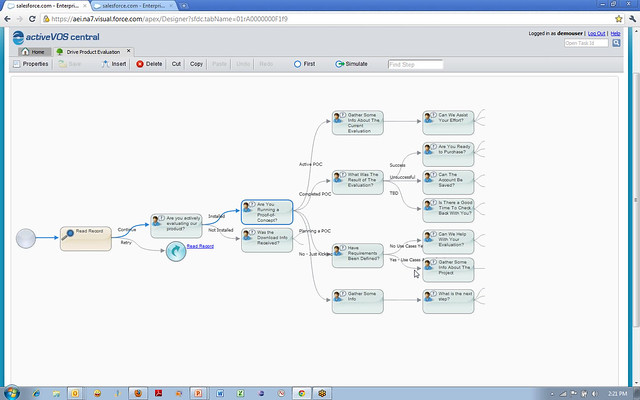

The Guide Designer – a portal into the ActiveVOS functionality from within Salesforce – allows a non-technical user to create a screen flow, add more screens and automated steps, call subflows, and call Salesforce functions. The flow can be simulated graphically, stepping forwards and backwards through it, in order to test different conditions; note that this is simulation in order to determine flow correctness, not for the purpose of optimizing the flow under load, hence is quite different from simulation that you might see in a full-featured BPA or BPMS tool. Furthermore, this is really intended to be a single-person screen flow, not a workflow that moves work between users: sort of like a macro, only more so. Although it is possible to interrupt a screen flow and have another person restart it, that doesn’t appear to be the primary use case.

The Guide Designer – a portal into the ActiveVOS functionality from within Salesforce – allows a non-technical user to create a screen flow, add more screens and automated steps, call subflows, and call Salesforce functions. The flow can be simulated graphically, stepping forwards and backwards through it, in order to test different conditions; note that this is simulation in order to determine flow correctness, not for the purpose of optimizing the flow under load, hence is quite different from simulation that you might see in a full-featured BPA or BPMS tool. Furthermore, this is really intended to be a single-person screen flow, not a workflow that moves work between users: sort of like a macro, only more so. Although it is possible to interrupt a screen flow and have another person restart it, that doesn’t appear to be the primary use case.

There are a few bits that likely a non-technical user couldn’t do without a bit of initial help, such as creating automated steps and connecting up the completed guides to the Salesforce portal, but it is pretty easy to use. It uses a simple branching tree structure to represent the flow, where the presence of multiple possible responses at a step creates the corresponding number of outbound branches. In flowcharting terms, that means only OR gates, no merges and no loopbacks (although there is a Jump To Step capability that would allow looping back): it’s really more of a decision tree than what you might thing of as a standard process flow.

Creating a guide, or a “guidance tree” as it is called in the Guide Designer consists of adding an initial step, specifying whether it is a screen (i.e., a script for the user to read), an automated step that will call a Salesforce or other function, a subflow step that will call a predefined subflow, a jump to step that will transfer execution to another point in the tree, or an end step. Screen steps include a prompt and up to four answers to the prompt; this is the question that the user will answer at this point in response to what is happening on their customer call. One outbound path is added to the step for each possible answer, and a subsequent step automatically created on that path. The branches keep growing until end steps are defined on each branch.

A complex tree can obviously get quite large, but the designer UI has a nice way of scrolling up and down the tree: as you select a particular step, you see only the connected steps twice removed in either direction, with a visual indicator to show that the branch continues to extend in that direction.

A complex tree can obviously get quite large, but the designer UI has a nice way of scrolling up and down the tree: as you select a particular step, you see only the connected steps twice removed in either direction, with a visual indicator to show that the branch continues to extend in that direction.

Regardless of the complexity of the guidance tree, there is no palette of shapes or anything vaguely BPMN-ish: the user just creates one step after another in the tree structure, and the prompt and answers create the flow through the tree. Active Endpoints see this tree-like method of process design, rather than something more flowchart-like, to be a key differentiator. In reality, under the covers, it is creating BPMN that is published to BPEL, but the designer user interface just limits the design to a simple branching tree structure that is a subset of both BPMN and BPEL.

Once a flow is created and tested, it is published, which makes it available to run directly in the Salesforce sales guides section directly on a sales lead’s detail page. As the guide executes, it displays a history of the steps and responses, making it easy for the user to see what’s happened so far while being guided along through the steps.

Obviously, the Active Endpoints screen flows are executing in the cloud, although as of the April release, they were using Terremark rather than hosting it on Salesforce’s platform. Keeping it on an independent platform is critical for them, since there are other enterprise cloud software platforms with which they could integrate for the same type of benefits, such as Quickbooks and SuccessFactors. Since there is very little data persisted in the process instances within Cloud Extend, just some execution metrics for reporting and the Salesforce object ID for linking back to records in Salesforce, there is less concern about where this data is hosted, since it will never contain any personally identifiable information about a customer.

Obviously, the Active Endpoints screen flows are executing in the cloud, although as of the April release, they were using Terremark rather than hosting it on Salesforce’s platform. Keeping it on an independent platform is critical for them, since there are other enterprise cloud software platforms with which they could integrate for the same type of benefits, such as Quickbooks and SuccessFactors. Since there is very little data persisted in the process instances within Cloud Extend, just some execution metrics for reporting and the Salesforce object ID for linking back to records in Salesforce, there is less concern about where this data is hosted, since it will never contain any personally identifiable information about a customer.

We’re starting to see client-side screen flow creation from a few of the BPMS vendors – I covered TIBCO’s Page Flow Models in my review of AMX/BPM last year – but those screen flows are only available at a step in a larger BPMS model, whereas Cloud Extend has encapsulated that capability for use in other platforms. For small, nimble vendors who don’t need to own the whole application, providing embeddable process functionality for data-centric applications can make a lot of sense, especially in a cloud environment where they don’t need to worry about the usual software OEM problems of installation and maintenance.

I’m curious about whatever happened to Salesforce’s Visual Process Manager and whether it will end up competing with Cloud Extend; I had a briefing of Visual Process Manager over a year ago that amounted to little, and I haven’t heard anything about it since. Neil Ward-Dutton mentions these two possibly-competing offerings in his post on the beta release of Cloud Extend, but as he points out, Visual Process Manager is more of a general purpose workflow tool, while Cloud Extend is focused on task-specific screen flows within the Salesforce environment. Just about the opposite of what you might have expected to come out of these respective vendors.