San Francisco! Finally, a large vendor figured out that they really can do a 2,500-person conference here rather than Las Vegas, it just means that attendees are spread out in a number of local hotels rather than in one monster location. Feels like home.

It seems impossible that I haven’t blogged about TIBCO in so long: I know that I was at last year’s conference but was a speaker (as I am this year) so may have been distracted by that. Also, they somehow missed giving me a briefing about the upcoming ActiveMatrix BPM release, which was supposed to be relatively minor but ended up bit bigger — I’ll be at the breakout session on that later today.

We started the first day with a marathon keynote, with TIBCO CEO Vivek Ranadive welcoming San Francisco’s mayor, Ed Lee, for a brief address about how technology is fueling San Francisco’s growth and employment, as well as helping the city government to run more effectively. The city actually have a chief data officer responsible for their open data intiatives.

Ranadive addressed the private equity buy-out of TIBCO head-on: 15 years ago, they took the company public, and by the end of this year, they will be a private company again. I think that this is a good thing, since it removes them from the pressures of quarterly public filings, which artificially impacts product announcements and sales. It allows them to make any necessary organization restructuring or divestiture without being punished on the stock market. Also, way better than being absorbed by one of the bigger tech companies, where the product lines would have be to realigned with incumbent technologies. He talked about key changes in the past years: the explosion of data; the rise of mobility; the emergence of social platforms; Asian economies; and how math is trumping science by making the “how” more important than the “why”. Wicked problems, but some wicked solutions, too. He claims that every industry will have an “Uberization”: controversies aside, companies such as Uber and AirBnB are letting service businesses flourish on a small scale using technology and social networks.

We then heard from Malcolm Gladwell — he featured Ranadive in one of his books — on technology-driven transformation, and the kinds of attitudes that make this possible. He told the story of Malcolm McLean, who created the first feasible intermodal containerized shipping in the 1950s because of his frustration with how long it took to unload his truck fleet at seaports, and how that innovation transformed the physical goods economy. In order to do this, McLean had to overcome the popular opinion that containerized shipping would fail (based on earlier failed attempts by others): as Gladwell put it, he had the three necessary characteristics of successful entrepreneurs: he was open/imaginative with creative ideas; he was conscientious and had the discipline to bring ideas to fruition including a transformation of the supply chain and sales model; and he was “disagreeable”, that is, had the resolve to pursue an idea in the face of his peers’ disapproval and ridicule. Every transformative innovation must be driven by someone with these three traits, who has the imagination to reframe the incumbent business to address unmet needs, and kill the sacred cows. Great talk.

Ranadive then invited Marc Andreessen on stage for a conversation (Andreessen thanked him for letting him “follow Malcolm freaking Gladwell on the stage”) about innovation, which Andreessen says is currently driven by mobile devices: businesses now must assume that every customer is connected 24×7 with a mobile device. This provides incredible opportunities — allowing customers to order products/services on the go — but also threats for businesses behind the curve, who will see customers comparing them to their competitors in real-time before making a purchasing decision. They discussed the future of work; Andreessen sees this as leveraging small teams, but that things need to change to make that successful, including incentives (a particular interest of mine, since I’ve been looking at incentives for collaboration amongst knowledge workers). Diversity is becoming a competitive advantage since it draws talent from a larger pool. He talked about the success rates of typical venture-funded companies, such as those that they fund: of 4,000 companies, about 15 will make it to being big companies, that is, with a revenue of $100M or more that would position them to go public; most of their profits as a VC come from those 15 companies. They fund good ideas that look like terrible ideas, because if everyone thought that these were great ideas, the big companies would already be doing them; the trick is filtering out all of ideas that look terrible because they actually are. More important is the team: a bad team can ruin a good idea, but a great team with a bad idea can find their way to a good idea.

Next up was TIBCO’s CTO Matt Quinn talking with Box CEO Aaron Levie: Box has been innovating in the enterprise by taking the consumer cloud storage that we were accustomed to, and bringing it into the enterprise space. This not only enables internal innovation because of the drastically lower cost and simpler user experience than enterprise content solutions such as SharePoint, but also has the ability to transform the interface between businesses and their customers. Removing storage constraints is critical to supporting that explosion of data that Ranadive talked about earlier, enabling the internet of everything.

We saw a pre-recorded interview that Ranadive did with PepsiCo CEO Indra Nooyi: she discussed the requirement to perform while transforming, and the increase in transparency (and loss of privacy) as companies seek to engage with customers. She characterized a leader’s role as that of not just envisioning the future, but making that vision visible and attainable.

Mitch Barns, CEO of Nielsen (the company that measures and analyzes what people watch on TV), talked about how their business of measurement has changed as people went from watching broadcast TV at times determined by the broadcasters, to time-shifting with DVRs and consuming TV content on mobile devices on demand. They have had to shift their methods and business to accommodate this change in viewing models, and deal with a flood of data about how, when and where that consumption is occurring.

I have to confess, by this point, 2.5 hours into the keynote without a break, my attention span was not what it could have been. Or maybe these later speakers just didn’t inspire me as much as Gladwell and Andreessen.

Martin Taylor from Vista Equity Partners, the soon-to-be owners of TIBCO, spoke next about what they do and their vision for TIBCO. Taylor was at Microsoft for 14 years before joining Vista, and helps to support their focus on applying their best practices and operating platform to technology companies that they acquire. Since their start in 2000, they have spent over $14B on 140 transactions in enterprise software. He showed some of their companies; since most of these are vertical industry solutions, TIBCO is the only name on that slide that I recognized. They attempt to foster collaboration between their portfolio companies: not just sharing best practices, but doing business together where possible; I assume that this could be very good for TIBCO as a horizontal platform provider that could leveraged by their sibling companies. The technology best practices that they apply to their companies include improved product management roadmaps that address the needs of their customers, and improved R&D practices to speed product release cycles and improve quality. They’re still working through the paperwork and regulatory issues, but are starting to work with the TIBCO internal teams to ensure a smooth transition. It doesn’t sound as if there will be any big technology leadership changes, but a continued drive into new technologies including cloud, IoT, big data and more.

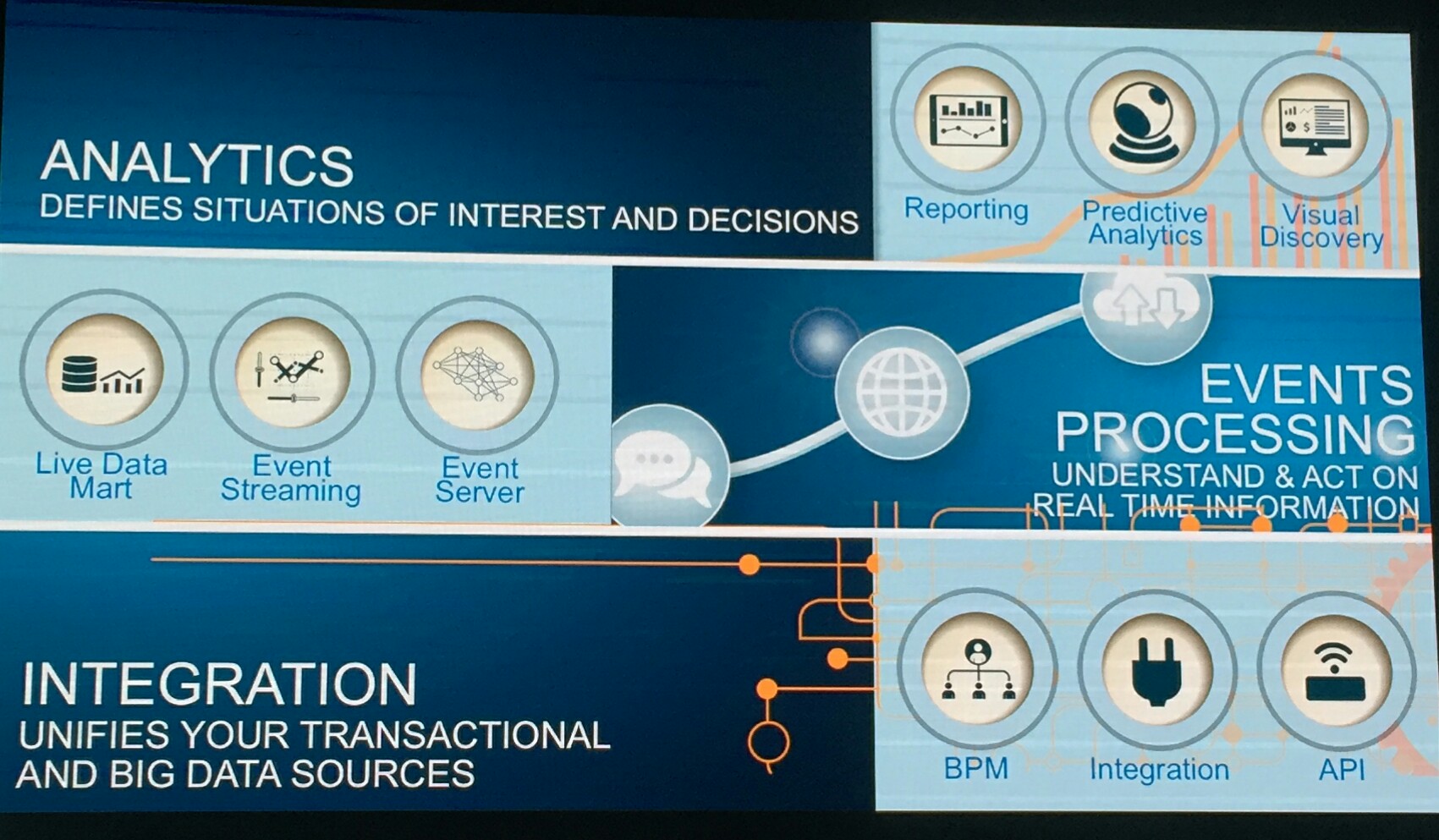

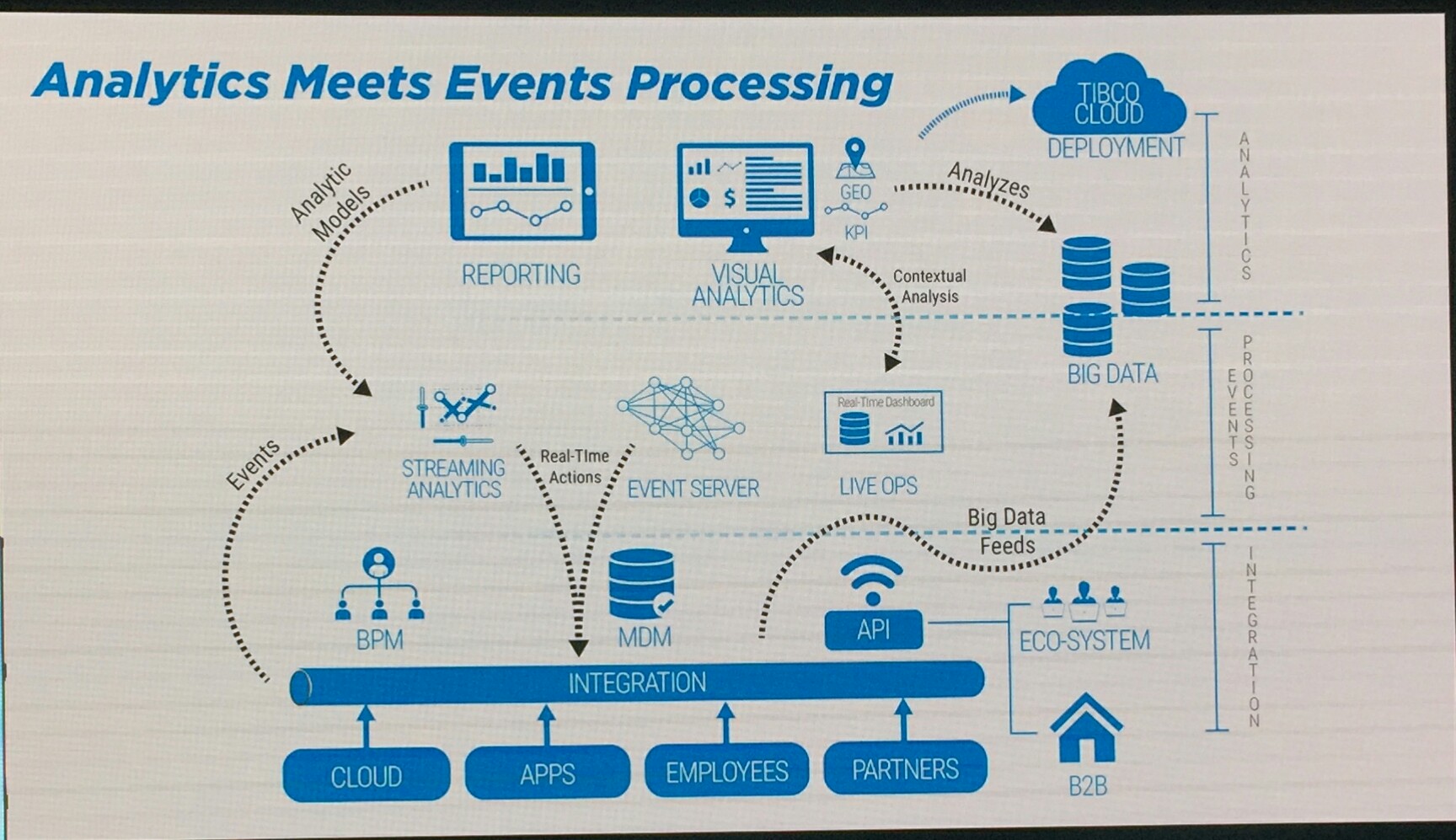

Murray Rode, TIBCO’s COO, finished up the keynote talking about their Fast Data positioning: organizations are collecting a massive volume of data, but that data has a definite shelf life and degrades in value over time. In order to take advantage of short-lived opportunities where timing is everything, you have to be able to analyze and take actions on that data quickly. As he put it, big data lets you understand what’s already happened, but fast data lets you influence what’s about to happen. To do this, you need to combine analytics to define situations of interest and decisions; event processing to understand and act on real-time information; and integration (including BPM) to unify your transactional and big data sources. Rode outlined the four themes of their positioning: expanded reach, ease of consumption, compelling user journey, and faster time to value; I expect that we will see more along these themes throughout the conference.

All in all, a great keynote, even though it stretched to an ass-numbing three hours.

Disclosure: TIBCO is paying my expenses to be at TIBCO NOW and a speaking fee for me to be on a panel tomorrow. What I write here is my own opinion, and I am not compensated in any way for blogging.