I had a briefing this week on Fujitsu’s just-released Interstage BPM version 11 as well as an update on their cloud platform. I’ll cover the cloud platform in another blog post, since this one is getting a bit long.

Version 11 has a lot of new features for handling ad hoc, collaborative, knowledge-intensive work; this isn’t surprising, since the analysts and many of the vendors have woken up to the fact that not all processes (or all parts of all processes) are structured, and sometimes people need to be able to create their own processes or just find the right person to which to send a task. In fact, Fujitsu, like many others, consider that the bulk of the processes done today are ad hoc, collaborative and knowledge intensive, with a much smaller portion structured people-centric work, and an even small portion purely automated system-centric processes.

Version 11 has a lot of new features for handling ad hoc, collaborative, knowledge-intensive work; this isn’t surprising, since the analysts and many of the vendors have woken up to the fact that not all processes (or all parts of all processes) are structured, and sometimes people need to be able to create their own processes or just find the right person to which to send a task. In fact, Fujitsu, like many others, consider that the bulk of the processes done today are ad hoc, collaborative and knowledge intensive, with a much smaller portion structured people-centric work, and an even small portion purely automated system-centric processes.

Fujitsu is calling this “sense and respond”, where the “sense” part is about finding the right person for a task, and “respond” is about being able to dynamically create an ad hoc subtask. There’s a lot in the “sense” part that I haven’t seen in other products, such as making recommendations/selections of a person to perform a task based on their past performance at this task; this reminds me somewhat of the research that Ben Jennings is doing on establishing reputation within a social network by examining past behaviors, in addition to just doing assignments based on a predefined skills matrix or assigning tasks to people who you know. In addition to past performance, it also takes into account future tasks assigned to people in order to predict workload, and makes recommendations on due dates based on historical data.

The key functionality for what Fujitsu is calling “dynamic BPM” is the ability for process participant to add subtasks at a point in the process, or create any entirely new process by specifying the tasks involved. This allows a process participant to stretch the process to fit their needs by creating one or more subtasks from any task that is assigned to that user, specifying a task name and description, assigning it to one or more users, and specifying a priority and due date. Control is passed to the subtask(s), then returned to the calling task when all subtasks are completed, after which the process can continue on its previously defined structured path. The status for the subtask is shown along with the task status, which provides the necessary transparency and auditing: the big problem with the way that ad hoc tasks are done now is that users typically just send an email, or make a phone call, in order to involve another person, and that deviation from the structured process is never captured.

The key functionality for what Fujitsu is calling “dynamic BPM” is the ability for process participant to add subtasks at a point in the process, or create any entirely new process by specifying the tasks involved. This allows a process participant to stretch the process to fit their needs by creating one or more subtasks from any task that is assigned to that user, specifying a task name and description, assigning it to one or more users, and specifying a priority and due date. Control is passed to the subtask(s), then returned to the calling task when all subtasks are completed, after which the process can continue on its previously defined structured path. The status for the subtask is shown along with the task status, which provides the necessary transparency and auditing: the big problem with the way that ad hoc tasks are done now is that users typically just send an email, or make a phone call, in order to involve another person, and that deviation from the structured process is never captured.

A user can also create an entirely new process dynamically, too: they just give it a name, description, priority and due date, then add subtasks to that process in the same manner as adding an ad hoc subtask to a structured process. There is no routing or flow management, however, in either dynamic task creation scenario: subtasks are independent from each other and run in parallel, and the calling task (or dynamic process) waits for all subtasks to complete before proceeding. The recipient of a subtask can further divide it into more subtasks, and assign them as they see fit. The expected use case for a completely dynamic process, then, is for one person to create subtasks for the high-level activities and assign them, then have the recipients of those subtasks create their own subtasks required to complete the block of work assigned to them.  If you’re in an environment where the activities don’t have dependencies, this would work well; however, if there are dependencies between the subtasks, it would have to be manually coordinated.

If you’re in an environment where the activities don’t have dependencies, this would work well; however, if there are dependencies between the subtasks, it would have to be manually coordinated.

If you need to have more flow control in the processes, you can step up to the Process Outline tool intended for non-technical process analysts and business users. This shows the tasks in a tabular representation with timelines, and allows the creation of dependencies between the tasks. It wasn’t clear, however, the degree of control offered here, and the interoperability with the simpler subtask creation method.

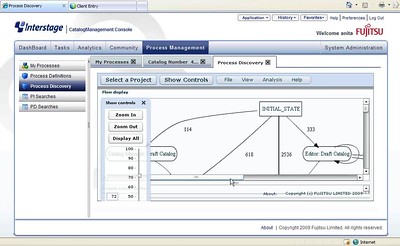

The really cool thing, however, is what happens behind the scenes with these dynamic processes during execution:  the automated discovery engine, which is now part of the analytics, tracks all the ad hoc subtasks, and can make suggestions on improving the process based on how the process was actually executed including the user-created subtasks, rather than how it was originally designed. Just as with the desktop application, this bit of Flash allows you to view how many times each path was traversed in the process, and dial it back so that only the most common paths are shown. I think that Fujitsu has done some very interesting things with their process discovery tool – which they can use on the system logs of pretty much any system, not just a BPM system – and it’s a natural fit integrated into their BPM suite. Working together with the dynamic subtask creation, this allows you to see how a process really executes, rather than how your process analyst thinks that it works.

the automated discovery engine, which is now part of the analytics, tracks all the ad hoc subtasks, and can make suggestions on improving the process based on how the process was actually executed including the user-created subtasks, rather than how it was originally designed. Just as with the desktop application, this bit of Flash allows you to view how many times each path was traversed in the process, and dial it back so that only the most common paths are shown. I think that Fujitsu has done some very interesting things with their process discovery tool – which they can use on the system logs of pretty much any system, not just a BPM system – and it’s a natural fit integrated into their BPM suite. Working together with the dynamic subtask creation, this allows you to see how a process really executes, rather than how your process analyst thinks that it works.

There are some other collaborative features that have been highlighted in this version: discussion threads on process instances (really just a nicely-formatted comment feature, and it would be nice to add tags here to allow for searching the history based on the text within process instance discussions), and wiki pages within the community to allow process documentation. The community portal pages can also link to external portals such as MyYahoo, and incorporate a feed such as a Twitter stream. Users can also get an RSS feed of their tasks, which allows them to consume them in a different interface, if they don’t want to use the Interstage BPM portal.

A few other vendors are starting to think about processes as projects, and Fujitsu has added some of this to Interstage as well, by allowing a process to be viewed as phases and milestones – although not, from what I saw, in a standard GANTT chart representation that allows easy visualization of the critical path – then see which milestones were met or missed.

They’ve added some new dashboard and analytics features, too, but the big win for Fujitsu in this version is the combination of ad hoc task creation and automated process discovery.