On August 31, camunda released camunda BPM platform 7.0 (community open source and enterprise editions), the first major release of the software since it was forked from the Activiti project in March, although there were nine community releases between the fork at the release of 7.0. I had the chance for a couple of briefings over a period following that with Daniel Meyer, the project lead, and promised that I’d actually get this post written in time for Christmas. 🙂

The 7.0 release contains a significant amount of new code, but their focus remains the same: a developer-friendly BPM platform rather than a tool positioned for use by end users or non-technical analysts. As I discussed in a recent webinar, BPMS have become model-driven application development environments, hence having a BPMS positioned explicitly for developers meets a large market segment, especially for complex core processes.

The basic tasklist and other modeler and runtime features are mostly unchanged in this version, but there are big changes to the engine and to Cockpit, the technical process monitoring/administration module. Here’s what’s new:

Cockpit:

- Inspect/repair process instances, including retrying failed service calls.

- Create instance variables at runtime, and update variable values.

- Reassign human activities.

- Send a link directly to a specific instance or view.

- Create a business key corresponding to a line-of-business system variable, providing a fast and easy to search on LOB data.

- Extensible via third-party plug-ins. The aim with Cockpit is to solve 80% of use cases, then allow plug-ins from consulting partners and customers to handle the remainder; they provide full instructions on how to develop a Cockpit plug-in.

- Add tabs to the detailed views of process instance, e.g., a link to LOB or other external data.

Engine:

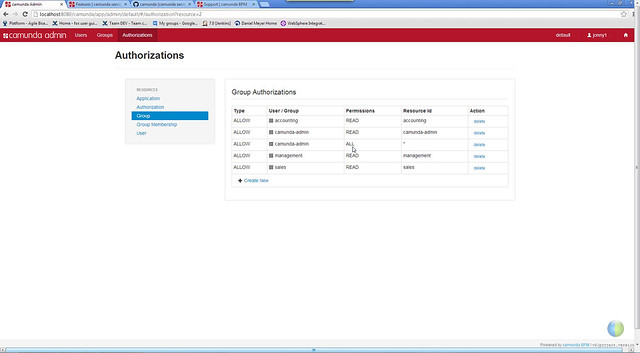

- A new authorization framework (also manifesting in admin capabilities for users/groups/authorizations): this is a preview feature in 7.0, supporting only application, group, group membership authorization. In the future, this will be expanded to include process definition and instance authorization. Users can be maintained in an internal camunda database or using a direct link to LDAP.

- A complete rewrite of the history/audit log, splitting the history and runtime databases, which is potentially a huge performance booster. Updates to the history are triggered from events on running instances, whereas previously, writing history records required querying and updating existing records for that instance. The history log can be redirected to a database that is shared by multiple process engines; since some amount of the Cockpit monitoring is done on the history database, this makes it easier to consolidate monitoring of multiple process engines if the history logs are redirected to the same database. The logs can also be written directly to an external database based on the new history event stream API. Writes to the history log are asynchronous, which also improves performance. At the time of release, they were seeing preliminary benchmarks of 10-20% performance improvement in the process engine, and a significant reduction in the runtime database index size.

- There is some increase in the coverage of the BPMN 2.0 standard; their reference document shows supported elements in orange with a link through on each element to the description and usage, including code snippets where appropriate. Data objects/stores are still not supported, nor are about half of the event types, but their track record is similar to most vendors in this regard.

Version 7.0 is all open source, but a consolidation release (7.1) is already in alpha and will contain some proprietary administration features in Cockpit not available in the open source version: bulk edit/restart of instances, complex search/filter across instances from different process definitions, and a process-level authorizations UI (although the authorization structure will be built into the common engine). camunda is pretty open about their development, as you might expect from an open source company; you can even read some quite technical discussions about design decisions such as a new Activity Instance Execution Model have been made in the process engine in order to improve performance.

In September, camunda released a cloud application for collaborating on process models, camunda share. This is not a full collaborative authoring environment, but a place to upload, view and discuss process models. The camunda team created it during their “ShipIt-Day”, where they are tasked with creating something awesome within 24 hours. There’s no real security, your uploaded model generates a unique URL that you can send to others, but it provides the option to anonymize the process model by removing labels if your process contains proprietary information. A cool little side project that could let you avoid sending around PDFs of your process models for review.

camunda’s business model is in providing and supporting the enterprise edition of the software, which includes some proprietary functions in Cockpit but is otherwise identical to the community open source edition, plus in consulting and training services to help you get started with camunda BPM. They provide a great deal of the effort behind the community edition, while encouraging and supporting platform extensions such as fluent testing, PHP developer support and enterprise integration via Apache Camel.