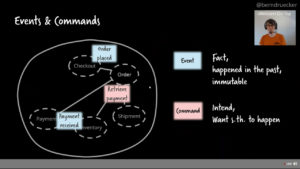

Day 2 of CamundaCon Live kicked off with Camunda co-founder Bernd Rücker talking about microservices orchestration and integation using workflow automation. This is a common theme for him, and I’ve seen earlier versions of this presentation, but he always brings something fresh to the discussion. He discussed reactive applications that are responsive, resilient, elastic and message-driven, then covered different styles of event-driven architecture.

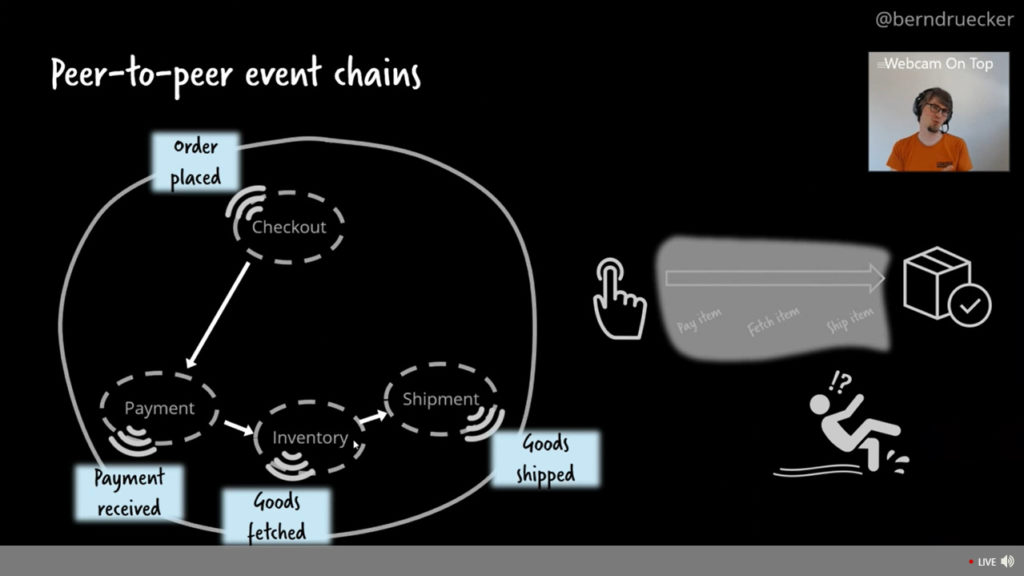

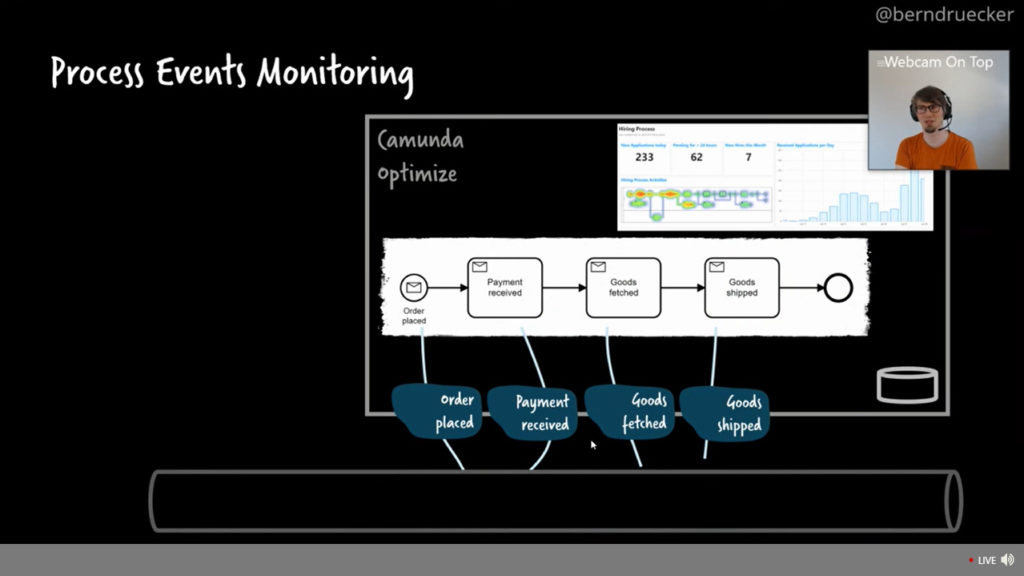

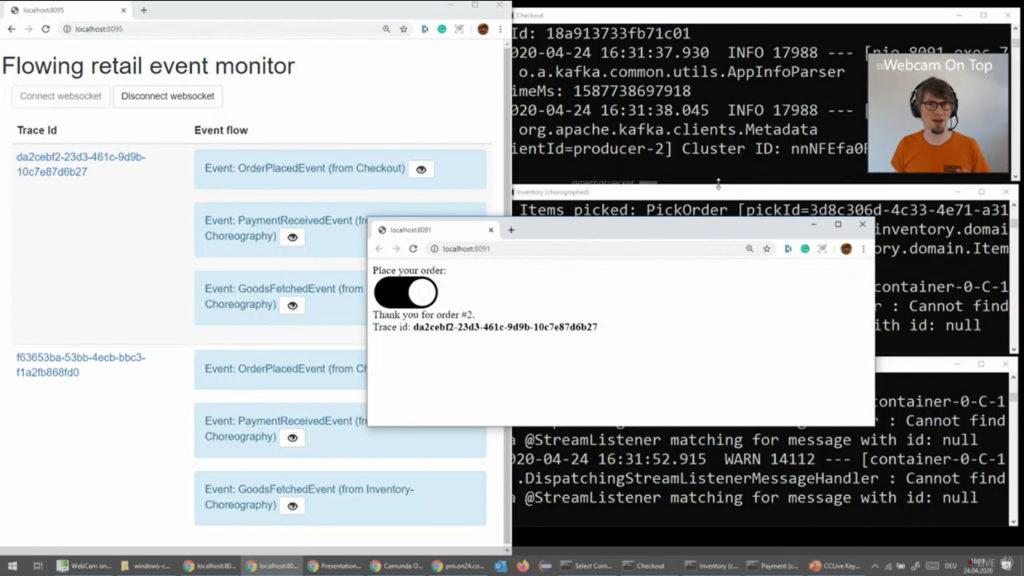

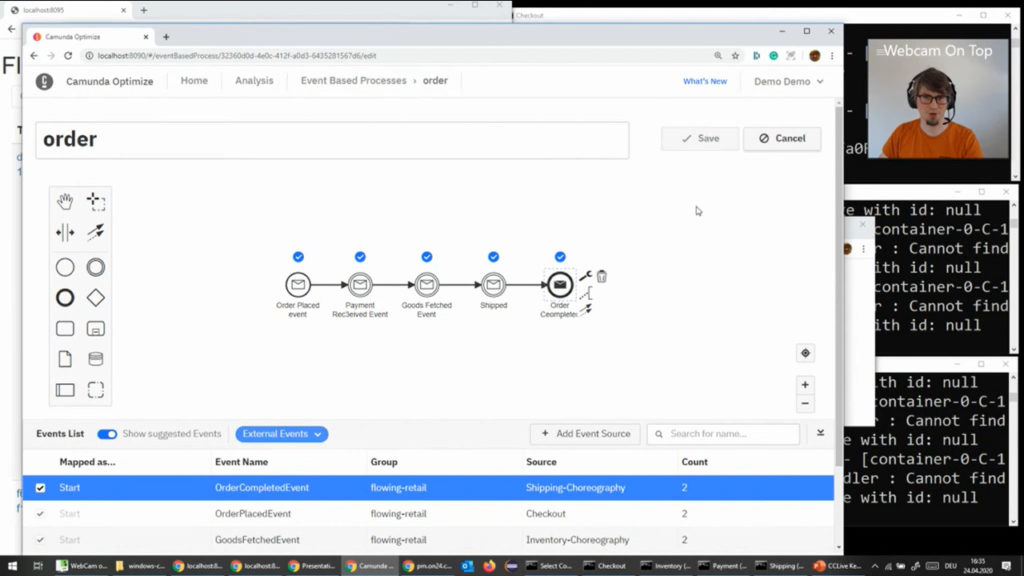

He gave a (live) demo of autonomous services communicating using Kafka, and showed the issue with peer-to-peer choreography: there is no sense of the end-to-end orchestration to ensure that all services that should have run did actually run. He created an event-based process in Camunda Optimize that modeled the expected end-to-end process, and now by connecting that to the Kafka messages, he had a visualization of the workflow that he defined that showed what happens when one service isn’t running: effectively, the virtual workflow is stuck at the previous service since it does not receive a message that the (stopped) service has picked up the messages.

One solution is to extract the end-to-end responsibility into its own service: really, this implies some level of orchestration via commands rather than purely reacting to events, even if it’s not a completely tightly-coupled workflow. If you use an engine like Camunda to do that top-level orchestration, then you can move the monitoring of the process within that engine (Cockpit rather than Optimize) although it’s likely that anyone using an event-based architecture is going to be looking at an event monitoring system like Optimize as well. You can see his slides below, and the video will be available on the CamundaCon Live hub probably by the time that I publish this post.

The morning session continued with CTO Daniel Meyer on some of the new product capabilities. Camunda’s goal has moved from just being a BPM engine for Java developers to a much broader orchestration platform that can integrate any technology stack and any endpoints.

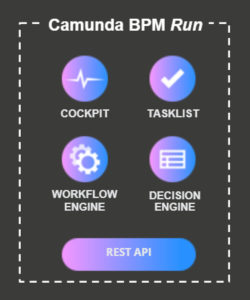

He introduced a new distribution called Camunda Run (or Lil’ Camboot, as Niall Deehan calls it) provides a lightweight package (50MB) that includes the BPMN and DMN workflow and decision engines, Cockpit, Tasklist and the REST API. It can even be run in headless mode, which disables the web apps, if you just want the engines. It’s Open API enabled, CORS enabled, and SSL enabled out of the box. He gave a quick demo of downloading, starting and running Camunda Run: it’s pretty familiar if you’ve spent any time with Camunda, and it starts fast. From the blog post announcement, the target audience for Run is if at least one of the following is true:

- You need a standalone process engine accessible via REST API

- You don’t have extensive Java knowledge (or none at all) but still want to use Camunda BPM

- You don’t want to configure an application server yourself

- You want to configure everything in one place

- You just want to Run Camunda BPM

Meyer also talked about Camunda Optimize, specifically the event-based process monitoring. We saw a bit of that yesterday in Felix Müller’s presentation, and I had a more complete view of the event-based features of Optimize a few weeks ago on the 3.0 release webinar. Basically, you add the event source to Optimize (such as Kafka), and Optimize exposes messages and allows them to be attached to the entry/exit points of elements on a BPMN diagram that represents the event-driven process. They are offering a 30-day free trial for Optimize now if you want to try it out.

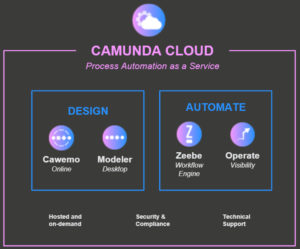

Meyer’s third topic was about process automation as a service via Camunda Cloud, which is powered by Zeebe (rather than Camunda BPM). Having cloud-native Zeebe behind the scenes means that it’s highly scalable and fault-tolerant, and uses pub-sub orchestration to let you include endpoints from anywhere. He demonstrated how to spin up a new Zeebe cluster, then deploy a BPMN model that was created in the Zeebe Modeler and start instances of the process using the zbctl command line. These instances were then visible in Camunda Operate (the Zeebe process monitoring tool), and he ran JavaScript workers and published messages to complete tasks in the process and show the instance progressing through the process model. There’s a free trial for Camunda Cloud, and an early access version for $699/month that includes access to larger clusters and technical support.

He fielded some questions that came up on the Slack workspace during his talk. Moving from an existing Camunda BPM implementation to Camunda Run is apparently as easy as just redirecting to the new application server. You can’t use Java delegates, but will have to switch those out for external tasks. There was a question about BPM versus Zeebe, which I think is a question that a lot of Camunda customers have: although most are likely familiar with the technical and functional differences, there is an open question of whether Camunda will continue to support two workflow engines in the future, and if they are going to shift focus more towards Zeebe use cases.

The morning finished by breaking out into two tracks; I stayed with the customer presentations rather than the technical breakout to hear some of the case studies. The one that I was most interested in was Fareed Saeed, head of Product and Tools for Advanced Process Solutions at Fidelity Investments, talking about migrating their monolithic legacy BPM to Camunda, in part because I did some early technical architecture consulting with them on their digital process automation platform over a year ago, although I’m not involved at this time. For those of you who know me mostly through this blog and as an independent industry analyst, you may not be aware that the other half of my business is as a consultant to large enterprises, mostly financial services and insurance, on technical architecture and strategy, or anything else to help make their process-centric implementation projects a success.

James Watson of Doculabs, who advised Fidelity on migration strategies, joined him on the discussion. Saeed talked about their current home-built workflow system, which runs thousands of different processes for most of their back office operations, and the need to move away from monolithic architecture and fragile, non-agile systems to a more flexible platform. This talk was not about the architecture or platform, but about the migration planning and execution: a key subject for any large enterprise moving off a legacy platform, but one that is often not fully considered during new digital automation platform implementation.

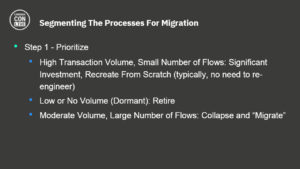

There are a few different strategies for migrating process-based applications, and it’s not the same story for each process. Watson shared his thoughts on this (see the slide at right), but this is my take on it:

- High-volume processes, that usually represent a smaller number of process models but most of the transaction volume, are usually rewritten from scratch while incorporating some degree of re-engineering and process improvement along the way. These are the core business processes that need to be done right, and will most benefit from the more agile and scalable new platform.

- Lower volume processes can be reviewed to see if they’re still required, may possible be combined into similar processes, then a straightforward “lift and shift” rewrite done to just duplicate the functionality as is. In short, these aren’t worth the time to do the re-engineering unless there are obvious wins, since the volume is relatively low. These are also candidates for low-code business-led development if that’s available on the automation platform, rather than the professional development teams required for the high-volume transactional processes.

- Very low volume processes can be retired, especially if their functionality can be rolled into processes in one of the first two categories.

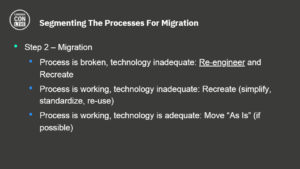

Although they are looking at a “factory model” for some level of automation around the migration, Saeed believes that this is an opportunity to re-engineer the processes rather than just rewriting the same (broken) process on a new platform. They want to have smaller, distributed groups for developing and delivering new applications, which means that they need to have the right governance and standards in place to support a distributed model. He sees the need for early pilots and successes to allow everyone to see how this can work, and learn how to make it successful. A strong diverse team of business leaders is also a plus, since there will be some degree of pain in the business units as the migration happens.

That’s it for the morning of Day 2, they must have read my comments yesterday and actually made sure that we finished on time so that we get our 15 minute lunch break. 🙂 I’ll be back for the afternoon to finish off CamundaCon Live 2020.