The pool at the Bellagio was a big draw, but I’ve kept on track for this afternoon’s presentations, starting with Kathy Long on process notations. She spoke about the necessity of documenting processes, as well as the levels to which documents should be documented. Documenting the current process should only be done down to a certain level; below that, it’s more likely to be an indeterminate or changeable set of tasks that aren’t even correct.

She proposes a much simpler, higher-level process model that’s a lot like IDEF0, but she uses Input, Guides, Outputs and Enablers instead:

- Input: something that is consumed by or transformed by an activity/process

- Guide: something that determines why, how or when an activity/process occurs but is not consumed

- Output: something that is produced by or results from an activity/process

- Enabler: something (person, facility, system, tools, equipment, asset or other resource) utilized to perform the activity/process

She looked at some of the problems with other modeling formats; for example, BPMN is easy to learn and communicate and shows cross-functional processes and roles, but multiple process involvement is difficult to model, and it’s hard to follow decision threads: they end up more as system flows than actual business process models.

She touched on a lot of points for making process models accurate and relevant, such as levels of decomposition, and not modeling events and rules as activities; these are things that tend to happen in BPMN swimlane diagrams, but not in IGOE models. A lot of this, in fact, is about making the distinction between events and activities; there’s some confusion about this in the audience, too, although most often what is shown as an activity (box) on a swimlane diagram should actually just be a line between activities, e.g., instead of adding an activity called “send to Accounting”, you should just have a line from the previous activity to the new activity in the Accounting swimlane. Her BPMN is a bit rusty, perhaps, because an event would not be modeled as an activity, it would be modeled as an activity; instead, she shows a customer example where she used a stoplight icon to indicate an event, although there is an event icon available in BPMN.

Regardless of the notation, however, there are things that you need to consider:

- Understand why you’re modeling processes: documentation, understanding, communication, process optimization.

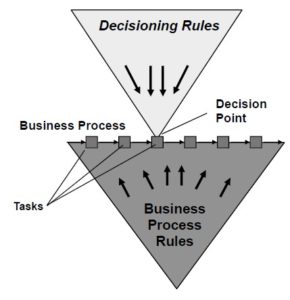

- Simplify the models by removing events and decisions

- Understand the goals in order to set the focus – and determine the critical path – for the process

I’m not sure that I agree with all of what she states about modeling; much of the fault that she finds with BPMN is not about BPMN, but about bad instances of BPMN or bad tools. She has one really valid point, however: most process models created today are just wallpaper, not something that is actually useful for process documentation and optimization.

This is the third year that I’ve heard her speak at BRF, and the message hasn’t changed much from last year or the year before, including the core examples, so it could use a refresh. Also, I think that she needs to get a bit more updated on some of the technology that touches on process models: she sees the business doing process modeling, then handing it over to IT for implementation (which doesn’t really account for model-driven development), and speaks only fleetingly of “workflow” systems. I realize that many process models are never slated for automation, but more and more are, and the process modeling needs to account for that.