I caught up with Jakob Freund and Daniel Meyer of camunda last week in advance of their 7.2 release; with 1,700 person-days of work invested in this April-November release cycle, this includes a new tasklist application, an initial implementation of the Case Management Model and Notation (CMMN) standard, developer accelerators particularly for non-Java developers, and performance and stability improvements. You can hear more about the new release and see a demo in their webinar on Wednesday this week, and read their blog post about it. [Update: you can see the webinar replay here and the slides here, no registration required.]

It’s been interesting to track the progress of camunda and Activiti after camunda forked from the Activiti project in early 2013, since they are targeting slightly different markets but still offer competitive solutions in the open source BPM space. Last week, I wrote about Activiti’s recent release of their BPM Suite, which includes end-user task list and forms interfaces and tools for for “citizen developers” (read: non-hard-core-Java developers); we are seeing some similar themes in the new camunda release, although camunda sticks closer to a true open source model by releasing pretty much all of the code as part of the open source project, while Activiti is making everything except the core engine part of their commercial product.

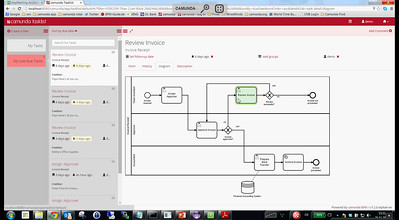

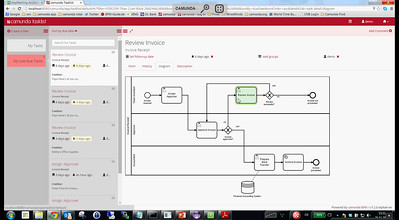

The biggest news of this release is the tasklist app, since this marks a completely new foray by camunda into end-user interfaces that remain part of the open source project. Developed in conjunction with their customer DAB bank, it’s a single-page JavaScript application that is usable out of the box but intended to be developer-friendly for customization. The 3-panel UI (inspired by MS Outlook, although I thought that might not be considered very inspiring by some) shows filters, the task list resulting from the selected filter, and the details of the selected task. Any of the three columns can be collapsed or expanded by the user: you might want to maximize the active task form while completing a task, for example, or collapse the form to see just the filters and task list for quickly sorting or triaging work.

The biggest news of this release is the tasklist app, since this marks a completely new foray by camunda into end-user interfaces that remain part of the open source project. Developed in conjunction with their customer DAB bank, it’s a single-page JavaScript application that is usable out of the box but intended to be developer-friendly for customization. The 3-panel UI (inspired by MS Outlook, although I thought that might not be considered very inspiring by some) shows filters, the task list resulting from the selected filter, and the details of the selected task. Any of the three columns can be collapsed or expanded by the user: you might want to maximize the active task form while completing a task, for example, or collapse the form to see just the filters and task list for quickly sorting or triaging work.

Filters can be created using expressions based on drop-down lists of instance properties, and can be shared for use by other users or groups. Filters can also specify process variables so that those variables are visible in the task list and can be used for searching and sorting, which makes it easier to locate a specific task without having to click on each task to see the details. If permissions allow, other user’s tasks can be included in the task list. During our demo, they pointed out the ability to use keyboard controls to navigate through the task list, something that was suggested by the users: having seen many keyboard-centric users slowed down by having to use a mouse for controlling their screens, this was not a surprise to me, but I think that many software developers don’t think about the needs of the old-school keyboarding users.

Filters can be created using expressions based on drop-down lists of instance properties, and can be shared for use by other users or groups. Filters can also specify process variables so that those variables are visible in the task list and can be used for searching and sorting, which makes it easier to locate a specific task without having to click on each task to see the details. If permissions allow, other user’s tasks can be included in the task list. During our demo, they pointed out the ability to use keyboard controls to navigate through the task list, something that was suggested by the users: having seen many keyboard-centric users slowed down by having to use a mouse for controlling their screens, this was not a surprise to me, but I think that many software developers don’t think about the needs of the old-school keyboarding users.

They have had to improve their APIs to expose more information and functionality to support the tasklist app, which helps developers even if they are not using the tasklist. For the forms interface of an active task, they created the camunda JavaScript Forms SDK to simplify the connection to their REST APIs, allowing HTML form controls to be bound directly to process variables so that the SDK handles the interactions with the process engine as well as type conversions. This can be used for the forms interface even if you replace the tasklist app with your own HTML5 UI, and the SDK optionally integrates with AngularJS for additional features such as form validation. There are some nice page/process-flow control features for tasks: the option to use a Save button as well as Complete, which saves the task in place (with any instance data updates) but does not move it through the process flow; and the ability for a user completing a task to directly access the following task for this instance if it is assigned to them.

The task detail pane of the UI contains more than just the task form: it also has a history tab showing events, due dates and comments; a diagram tab highlighting the current task within the BPMN diagram; and a description tab that I didn’t see, but I assume can contain task instructions.

The task detail pane of the UI contains more than just the task form: it also has a history tab showing events, due dates and comments; a diagram tab highlighting the current task within the BPMN diagram; and a description tab that I didn’t see, but I assume can contain task instructions.

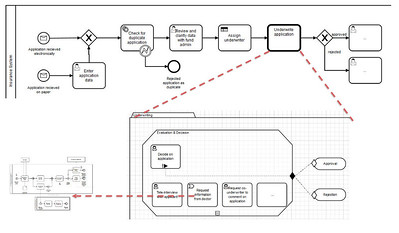

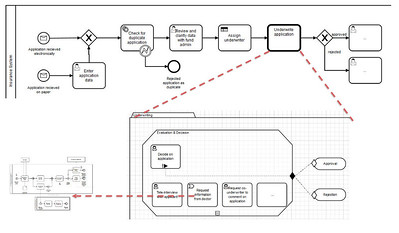

The other new major user-facing functionality is support for CMMN; 2015 is definitely going to be the year when we see the BPM vendors pile on here, and camunda is out in front with this. Like many other BPM vendors, camunda’s BPMN implementation does not support ad hoc activities – arguably, support for events and ad hoc activities provides most of what is required for case management – so they are using the Trisotech CMMN modeler instead of their own modeler, but executing on the same core camunda engine which exposes standard engine features such as REST APIs and scripting. Cases can be instantiated through the API directly by a web form or other event, or can be instantiated using a call from a structured process. In turn, cases can instantiate structured processes through a call from an activity. This covers all of the use cases along the structured/unstructured spectrum: completely pre-defined processes, pre-defined with ad hoc exceptions, ad hoc with pre-defined fragments, and completely ad hoc. Calls from BPMN that instantiate a CMMN case can be synchronous (i.e., wait for completion), or asynchronous.

Case tasks, once instantiated, will appear in the tasklist along with those from BPMN processes; however, note that ad hoc tasks will require some sort of custom UI to allow a user to instantiate them if they are not triggered automatically by events or other tasks. They have nothing on the out of the box tasklist app to do this, although I can envision that they might extend this in the future to allow a case owner or participant to see and trigger ad hoc case tasks.

Case tasks, once instantiated, will appear in the tasklist along with those from BPMN processes; however, note that ad hoc tasks will require some sort of custom UI to allow a user to instantiate them if they are not triggered automatically by events or other tasks. They have nothing on the out of the box tasklist app to do this, although I can envision that they might extend this in the future to allow a case owner or participant to see and trigger ad hoc case tasks.

CMMN is still pretty new, although the concepts of case management have been around for a long time; camunda has some customers testing and providing feedback on their CMMN implementation, and they are expecting requirements and capabilities to emerge as they get more practical experience with it. By providing an open source engine that supports CMMN, they also hope to contribute to the CMMN standard and its use cases in general since others can use their engine to test the standard.

Moving on to some of the developer and non-functional features in the new release, they are positioning their developer-friendly methods against “death by property panel” in zero-code BPM suites. The subject of many conversations amongst BPM practitioners, fully model-driven development BPM suites may not require Java coding but often are suitably complex that they require developer skills to use them; this can frustrate developers who are saddled with inefficient (to them) environments for creating applications. However, not every developer is a hard-core Java coder, and many organizations have access to a middle ground of people with development skills but who want to use lighter-weight languages, often regional IT resources embedded within business units. Where Activiti’s new citizen developer tools leans more to the less-technical, model-driven developer, camunda focuses on developers using scripting languages such as JavaScript, Groovy, Jython and JRuby by allowing these languages to be used in place of the expression language or custom Java code within their implementation of BPMN models, including listeners, script tasks and sequence flow conditions. Wrappers for templates, e.g., XSLT, allow these to be added for composing or mapping data. There’s a new API for implementing connectors, with SOAP and REST connectors included out of the box with 7.2. Finally, Java objects are now serialized in XML/JSON rather than byte streams, allowing other languages – and BPMN processes, via APIs – to introspect and consume them directly without converting to Java objects, and making it possible to easily combine Java with other languages within an implementation.

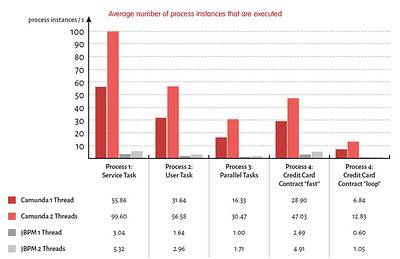

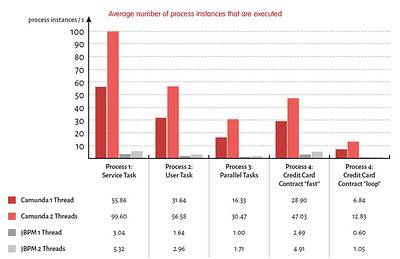

On the execution performance side, camunda likes to distinguish their product within the open source BPM field as being for high-load straight-through processing rather than just manual activities, where they define “high-load” as 10 process instances per second: they’ve been steadily improving execution engine performance since the split and are even working on implementing with alternative storage/computing strategies such as in-memory grids (I jokingly asked if we would see camunda on HANA any time soon). In tests on an earlier 7.x release, they were already 10-30x (that’s times, not percent) faster than Red Hat’s jBPM; 7.2 improves the caching and load balancing, and enhances the asynchronous history logging to allow the level of data logged to be configured by process class and activity. This helps provides the level of scalability that they need for their highest-volume customers, such as telcos, that may be executing more than 1,000 process instances per second.

On the execution performance side, camunda likes to distinguish their product within the open source BPM field as being for high-load straight-through processing rather than just manual activities, where they define “high-load” as 10 process instances per second: they’ve been steadily improving execution engine performance since the split and are even working on implementing with alternative storage/computing strategies such as in-memory grids (I jokingly asked if we would see camunda on HANA any time soon). In tests on an earlier 7.x release, they were already 10-30x (that’s times, not percent) faster than Red Hat’s jBPM; 7.2 improves the caching and load balancing, and enhances the asynchronous history logging to allow the level of data logged to be configured by process class and activity. This helps provides the level of scalability that they need for their highest-volume customers, such as telcos, that may be executing more than 1,000 process instances per second.

The camunda open source community edition is available for download and they will be pushing out updates to their Enterprise subscription customers. Check out their upcoming webinar, or sign up for the webinar and watch the recording later.

Disclosure: camunda has been my customer during 2014 for services including a webinar, white paper and the keynote at their user conference. However, I have not been compensated in any way for researching and writing this review.