For once, I don’t need to travel to see a (mini) vendor conference: Software AG has taken it on the road and is here in Toronto this morning. I wanted to get an update of what’s happening with webMethods since I attended their user conference in Miami last November, and this seemed like a good way to do it. Plus, they served breakfast.

Susan Ganeshan, SVP of Product Management, started the general keynote with a mention of Adabas and Natural, the mainframe (and other platforms) database and programming language that drive so many existing business applications, and was likely the primary concern of many of the people in the room. However, webMethods and CentraSite are critical parts of their future strategy and formed the core of the rest of the keynote; both of these have version 8 in first-customer-ship state, with general availability before the end of the year.

First, however, she talked about Software AG’s acquisition of IDS Scheer, and how ARIS fits into their overall plan, following on today’s press release about how Software AG has now acquired 90% of IDS Scheer’s stock, which should lead to a delisting and effective takeover. She discussed their concept of enterprise BPM, which is really just the usual continuous improvement cycle of strategize/discover and analyze/model/implement/execute/monitor and control that we see from other BPMS vendors, but pointed out that whereas Software AG has traditionally focused on the implement and execute parts of the cycle, IDS Scheer handles the other parts in a complementary fashion. The trick, of course, will be to integrate those seamlessly, and hopefully create a shared model environment (my hope, not her words). They are also bringing a process intelligence suite to market, but no details on that at this time.

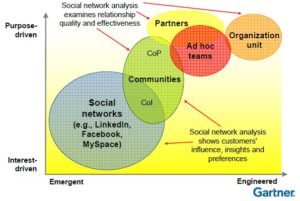

Interesting message about the changing IT landscape: I’m not sure of the audience mix between Adabas/Natural and webMethods, but I have to guess based on her “intro to BPM” slides that it is heavily weighted towards the former, and that the webMethods types are more focused on web services than BPM. She also invokes the current mantra of every vendor presenter these days about how the new workforce has radically different expectations about what their computing environment should look like (“why can’t I google for internal documents?”); I completely agree with this message, although I’m sure that most companies don’t yet have that as a high priority since much of the new workforce is just happy to have a job in this economy.

She discussed the value of CentraSite – or at least of SOA governance – as being a way to not just discover services and other assets, but to understand dependencies and impacts, and to manage provisioning and lifecycle of assets.

A few of the BPM improvements:

- Also a common message from BPMS vendors this week, she talked about their composite application environment, a portal-like dynamic workspace that can be created by a user or analyst by dragging portlets around, then saved and shared for reuse. This lessens the need for IT resources for UI development, and also allows a user to rearrange their workspace the way it best works for them.

- They’ve also added ad hoc collaboration, which allows a process participant to route work to people who are not part of the original process; it’s not clear if they can add steps or subprocesses to the structured process, or whether this is a matter of just routing the task at its current step to a previously unidentified participant.

- They integrate with Adobe Forms and Microsoft Infopath, using them for forms-driven processes that use the form data directly.

- They’ve integrated Cognos for reporting and analytics; it sounds like there are some out of the box capabilities that run without additional licensing, but if you want to make changes, you’ll need a Cognos license.

Since the original focus of webMethods was in B2B and lower-level messaging, she also discussed the ESB product, particularly how they can provide high-speed, highly-available messaging services across widespread geographies. They can provide a single operational console across a diverse trading network of messaging servers. There’s a whole host of other improvements to their trading networks, EDI module and managed file transfer functionality; one interesting enhancement is the addition of a BPEL engine to allow these flows to be modeled (and presumably executed) as BPEL.

They have an increased focus on standards, and new in version 8 are updates to XPDL and BPEL support, although they’re still only showing BPMN 1.1 support. They also have some new tooling in their Eclipse-based development suite.

She laid out their future vision as follows:

- Today: IT-driven business, with IT designing business processes and business dictating requirements

- 2009 (um…isn’t that today?): collaborative process discovery and design; unified tooling

- 2010: business rules management and event processing; schema conformance

- 2012: personalized, smart-healing processes; centralized command and control for deployment and provisioning

- 2014: business user self-service and broad collaboration without organizational boundaries; elastic and dynamic infrastructure

She finished up with a brief look at AlignSpace for collaborative process discovery; I’m sure that someday, they will approve my request for a beta account so that I can take a closer look at this. 🙂 Not only process discovery and modeling, however, AlignSpace will also provide a marketplaces of resources (primarily services) related to processes in particular vertical industries.

They have a complete fail on both wifi and power here, but I no longer care: my HP Mini has almost six hours of battery life, and my iPhone plan allows me to tether the netbook and iPhone to provide internet access (at least in Canada).