Happy Cinco de Mayo! I’m back in Orlando for the giant SAP SAPPHIRE NOW and ASUG conference to catch up with the product people and hear about what organizations are doing with SAP solutions. If you’re not here, you can catch the keynotes and some of the other sessions online either in real time or on demand. The wifi is swamped as usual, my phone kicked from LTE down to 3G and on down to Edge before declaring No Service during the keynote, and since I’m blogging from my tablet/keyboard configuration, I didn’t have connectivity at the keynote (hardwired connections are provided for media/analysts, but my tablet doesn’t have a suitable port) so this will be posted sometime after the keynote and the press conference that follows.

We kicked off the 2015 conference with CEO Bill McDermott asking what the past can teach us about the present. Also, a cat anecdote from his days as a door-to-door Xerox salesman, highlighting the need for empathy and understanding in business, in addition to innovation in products and services. From their Run Simple message last year, SAP is moving on to Making Digital Simple, since all organizations have a lot of dark data that could be exploited to make them data-driven and seamless across the entire value chain: doing very sophisticated things while making them look easy. There is a sameness about vendors’ messaging these day around the digital enterprise — data, events, analytics, internet of things, mobile, etc. — but SAP has a lot of the pieces to bridge the data divide, considering that their ERP systems are at the core of so many enterprises and that they have a lot of the other pieces including in-memory computing, analytics, BPM, B2B networks, HR systems and more. Earlier this year, SAP announced S/4HANA: the next generation of their core ERP suite running on HANA in-memory database and integrating with their Fiori user experience layer, providing a more modular architecture that runs faster, costs less to run and looks better. It’s a platform for innovation because of the functionality and platform support, and it’s also a platform for generating and exposing so much of that data that you need to make your organization data-driven. The HANA cloud platform also provides infrastructure for customer engagement, while allowing organizations to run their SAP solutions in on-premise, hybrid and cloud configurations.

SAP continues to move forward with HR solutions, and recently acquired Concur — the company that owns TripIt (an app that I LOVE) as well as a number of other travel planning and expense reporting tools — to better integrate travel-related information into HR management. Like many other large vendors, SAP is constantly acquiring other companies; as always, the key is how well that they can integrate this into their other products and services, rather than simply adding “An SAP Company” to the banner. Done well, this provides more seamless operations for employees, and also provides an important source of data for analyzing and improving operations.

A few good customer endorsements, but pretty light on content, and some of the new messaging (“Can a business have a soul?”) seemed a bit glib. The Stanley Cup may a short and somewhat superfluous appearance, complete with white-gloved handler. Also, there was a Twitter pool running for how many times the word “simple” was used in the keynote, another indication that the messaging might need a bit of fine-tuning.

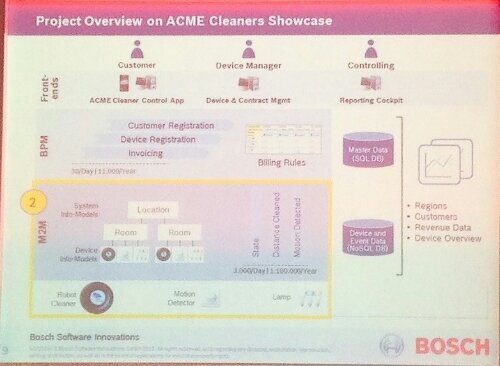

There was a press conference afterwards, where McDermott was joined by Jonathan Becher and Steve Lucas to talk about some other initiatives (including a great SAP Store demo by Becher) and answer questions from press and analysts both here in Orlando and in Germany. There was a question about supporting Android and other third-party development; Lucas noted that HANA Cloud Platform is available now for free to developers as a full-stack platform for building applications, and that there are already hundreds of apps built on HCP that do not necessarily have anything to do with SAP ERP solutions. Building on HCP provides access to other information sources such as IoT data: Siemens, for example, is using HCP for their IoT event data. There’s an obvious push by SAP to their cloud platform, but even more so to HANA, either cloud or on-premise: HANA enables real-time transactions and reconciliations, something rarely available in ERP systems, while allowing for far superior analytics and data integration without complex customization and add-ons. Parts of the partner channel are likely a bit worried about this since they exploit SAP’s past platform weaknesses by providing add-on products, customization and services that may no longer be necessary. In fact, an SAP partner that relies on the complexity of SAP solutions by providing maintenance services just released a survey claiming to show a lack of customer interest in S/4HANA; although this resulted in a flurry of sensational headlines today, if you look at the numbers that show some adoption and quite a bit of non-committed interest — not bad for three months after release — it starts to look more like an act of desperation. It will be more interesting to ask this questions a few quarters from now. HANA may also be seen as a threat to SAP’s customers’ middle management, who will be increasingly disintermediated as more information is gathered, analyzed and used to automatically generate decisions and recommendations, replacing manually-collated reports that form the information fiefdoms within many organizations.

Becher and Lucas offered welcome substance as a follow-on to McDermott’s keynote; I expect that we’ll see much more of the product direction details in tomorrow’s keynote with Bernd Leukert.