Earlier this week, I gave a webinar on intelligent business processes, sponsored by Software AG; the slides are embedded following, and you can get a related white paper that I wrote here.

There were a number of questions at the end that we didn’t have time to answer, and I promised to answer them here, so here goes. I have made wording clarifications and grammatical corrections where appropriate.

First of all, here’s the questions that we did have time for and a brief response to each – listen to the replay of the webinar to catch my full answer to those.

- How do you profile people for collaboration so that you know when to connect them? [This was in response to me talking about automatically matching up people for collaboration as part of intelligent processes – some cool stuff going on here with mining information from enterprise social graphs as well as social scoring]

- How complex is to orchestrate a BPMS with in-house systems [Depends on the interfaces available on the in-house systems, e.g., web services interfaces or other APIs]

- Are Intelligent Business Processes less Dynamic Business Processes [No, although many intelligent processes rely on an a priori process model, there’s a lot of intelligence that can be applied via rules rather than process, so that the process is dynamic]

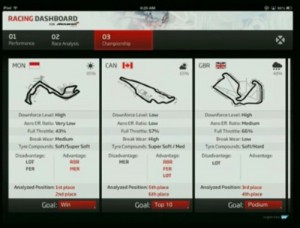

- How to quantify the visibility to the management? [I wasn’t completely sure of the intention of this one, but discussed the different granularities of visibility to different personas]

- Where does real-time streaming fit within the Predictive Analytics model? [I see real-time streaming as how we get events from systems, devices or whatever as input to the analytics that, in turn, feed back to the intelligent process]

And here’s the ones that we didn’t get to, with more complete responses. Note that I was not reading the questions as I was presenting (I was, after all, busy presenting), so some of them may be referring to a specific point in the webinar and may not make sense out of context. If you wrote the question, feel free to elaborate in the comments below. If something was purely a comment or completely off topic, I have probably removed it from this list, but ping me if you require a follow-up.

There were a number of questions about dynamic processes and case management:

We are treating exceptions as more as normal business activity pattern called dynamic business process to reflect the future business trend [not actually a question, but may have been taken out of context]

How does this work with case management?

I talked about dynamic processes in response to another question; although I primarily described intelligent processes through the concepts of modeling a process, then measuring and providing predictions/recommendations relative to that model, a predefined process model is not necessarily required for intelligent processes. Rules form a strong part of the intelligence in these processes, and even if you don’t have a predefined process, you can consider measuring a process relative to accomplishments of goals that are aligned with rules rather than a detailed flow model. As long as you have some idea of your goals – whether those are expressed as completing a specific process, executing specific rules or other criteria – and can measure against those goals, then you can start to build intelligence into the processes.

Is process visibility about making process visible (documented and communicated) or visibility about operational activities through BPM adoption?

In my presentation, I was mainly addressing visibility of processes as they execute (operational activities), but not necessarily through BPM adoption. The activities may be occurring in any system that can be measured; hence my point about the importance of having instrumentation on systems and their activities in order to have them participate in an intelligent process. For example, your ERP system may generate events that can be consumed by the analytics that monitor your end-to-end process. The process into which we are attempting to gain visibility is that end-to-end process, which may include many different systems (one or more of which may be BPM systems, but that’s not required) as well as manual activities.

Do we have a real world data to show how accurate the prediction is from Intelligent Processes?

There’s not a simple (or single) answer to this. In straightforward scenarios, predictions can be very accurate. For example, I have seen predictions that make recommendations about staff reallocation in order to handle the current workload within a certain time period; however, predictions such as that often don’t include “wild card” factors such as “we’re experiencing a hurricane right now”. The accuracy of the predictions are going to depend greatly on the complexity of the models used as well as the amount of historical information that can be used for analysis.

What is the best approach when dealing with a cultural shift?

I did the keynote last week at the APQC process conference on changing incentives for knowledge workers, which covers a variety of issues around dealing with cultural shifts. Check it out.

In terms of technology and methodology, how do you compare intelligent processes with the capabilities that process modeling and simulation solutions (e.g., ARIS business process simulator) provide?

Process modeling and simulation solutions provide part of the picture – as I discussed, modeling is an important first step to provide a baseline for predictions, and simulations are often used for temporal predictions – but they are primarily process analysis tools and techniques. Intelligent processes are operational, running processes.

What is the role of intelligent agents in intelligent processes?

Considering the standard definition of “intelligent agent” from artificial intelligence, I think that it’s fair to say that intelligent processes are (or at least border on being) intelligent agents. If you implement intelligent processes fully, they are goal-seeking and take autonomous actions in order to achieve those goals.

Can you please talk about the learning curves to the Intelligent Business process?

I assume that this is referring to the learning curve of the process itself – the “intelligent agent” – and not the people involved in the process. Similar to my response above regarding the accuracy of predictions, this depends on the complexity of the process and its goals, and the amount of historical data that you have available to analyze as part of the predictions. As with any automated decisioning system, it may be good practice to have it run in parallel with human decision-making for a while in order to ensure that the automated decisions are appropriate, and fine-tune the goals and goal-seeking behavior if not.

Any popular BPM Tools from the industry and also any best practices?

Are ERP solutions providers and CRMs doing anything about it?

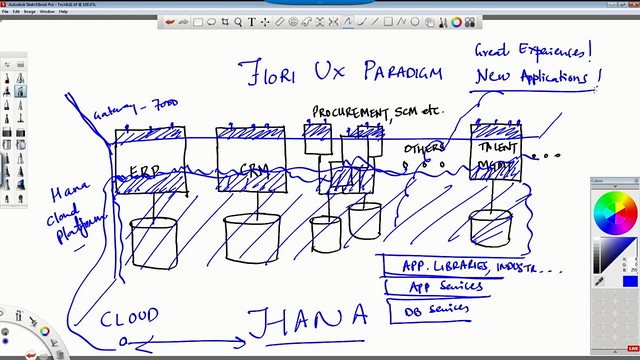

I grouped these together since they’re both dealing with products that can contribute to intelligent processes. It’s fair to say that any BPM system and most ERP and CRM systems could participate in intelligent processes, but are likely not the entire solution. Intelligent processes combine processes and rules (including processes and rules from ERP and CRM systems), events, analytics and (optionally) goal-seeking algorithms. Software AG, the sponsor of the webinar and white paper, certainly have products that can be combined to create intelligent processes, but so do most of the “stack” software vendors that have BPM offerings, including IBM, TIBCO and SAP. It’s important to keep in mind that an intelligent process is almost never a single system: it’s an end-to-end process than may combine a variety of systems to achieve a specific business goal. You’re going to have BPM systems in there, but also decision management, complex event processing, analytics and integration with other enterprise systems. That is not to say that the smaller, non-stack BPM vendors can’t piece together intelligent processes, but the stack vendors have a bit of an edge, even if their internal product integration is lightweight.

How to quantify the intelligent business process benefits for getting funding?

I addressed some of the benefits on slide 11, as well as in the white paper. Some of the benefits are very familiar if you’ve done any sort of process improvement project: management visibility and workforce control, improved efficiency by providing information context for knowledge workers (who may be spending 10-15% of their day looking for information today), and standardized decisioning. However, the big bang from intelligent processes comes in the ability to predict the future, and avoid problems before they occur. Depending on your industry, this could mean higher customer satisfaction ratings, reduced risk/cost of compliance, or a competitive edge based on the ability for processes to dynamically adapt to changing conditions.

What services, products do you offer for intelligent business processes?

I don’t offer any products (although Software AG, the webinar sponsor, does). You can get a better idea of my services on my website or contact me directly if you think that I can add value to your process projects.

How are Enterprise Intelligent Processes related to Big Data?

If your intelligent process is consuming external events (e.g., Twitter messages, weather data), or events from devices, or anything else that generates a lot of events, then you’re probably having to deal with the intersection between intelligent processes and big data. Essentially, the inputs to the analytics that provide the intelligence in the process may be considered big data, and have some specific data cleansing and aggregation required on the way in. You don’t necessarily have big data with intelligent processes, but one or more of your inputs might be big data.

And my personal favorite question from the webinar:

Humans have difficulty acting in an intelligent manner; isn’t it overreaching to claim processes can be “intelligent”?

I realize that you’re cracking a joke here (it did make me smile), but intelligence is just the ability to acquire and apply knowledge and skills, which are well within the capabilities of systems that combine process, rules, events and analytics. We’re not talking HAL 9000 here.

To the guy who didn’t ask a question, but just said “this is a GREAT GREAT webinar ” – thanks, dude. 🙂