Today has an track devoted mostly to cloud computing, and we started with Ndu Emuchay of IBM discussing the cloud computing landscape and the importance of standards. IBM is pretty innovative in many areas of new technology – I’ve blogged in the past about their Enterprise 2.0 efforts, and just this morning saw an article on what they’re doing with the internet of things where they’re integrating sensors and real-time messaging, much of which would be cloud-based, by the nature of the objects to which the sensors are attached.

He started with a list of both business and IT benefits for considering the cloud:

- Cost savings

- Employee and service mobility

- Responsiveness and agility in new solutions

- Allows IT to focus on their core competencies rather than running commodity infrastructure – as the discussion later in presentation pointed out, this could result in reduced IT staff

- Economies of scale

- Flexibility of hybrid infrastructure spanning public and private platforms

From a business standpoint, users only care that systems are available when they need them, do what they want, and are secure; it doesn’t really matter if the servers are in-house or not, or if they own the software that they’re running.

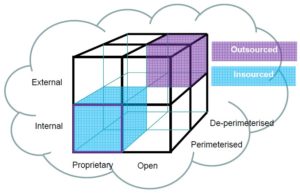

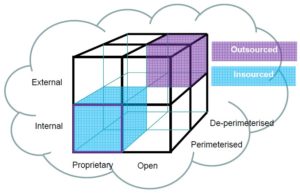

Clouds can range from private, which are leased or owned by an enterprise, to community and public clouds; there’s also the concept of internal and external clouds, although I’m not sure that I agree that anything that’s internal (on premise) could actually be considered as cloud. The Jericho Forum (which appears to be part of the Open Group) publishes a paper describing their cloud cube model (direct PDF link):

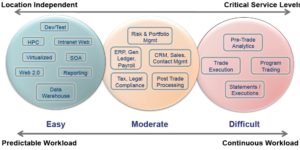

There’s a big range of cloud-based services available now: people services (e.g., Amazon’s Mechanical Turk), business services (e.g., business process outsourcing), application services (e.g., Google Apps), platform services and infrastructure services (e.g., Amazon S3); it’s important to determine what level of services that you want to include within your architecture, and the risks and benefits associated with each. This is a godsend for small enterprises like my one-person firm – I use Google Apps to host my email/calendar/contacts, synchronized to Outlook on my desktop, and use Amazon S3 for secure daily backup – but we’re starting to see larger organizations put 10’s of 1000’s of users on Google Apps to replace their Exchange servers, and greatly reduce their costs without compromising functionality or security.

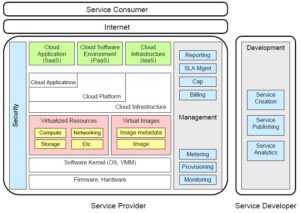

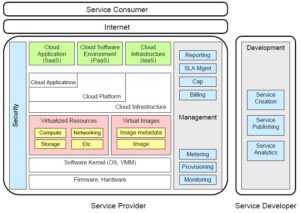

Emuchay presented a cloud computing taxonomy from a paper on cloud computing use cases (direct PDF link) that includes hosting, consumption and development as the three main categories of participants.

There’s a working group, organized using a Google Group, that developed this paper and taxonomy, so join in there if you feel that you can contribute to the efforts.

As he points out, many inhibitors to cloud adoption can be addressed through security, interoperability, integration and portability standards. Interoperability is the ability for loose coupling or data exchange between that appear as a black box to each other; integration combines components or systems into an overall system; and portability considers the ease of moving components and data from one system to another, such as when switching cloud providers. These standards impact the five different cloud usage models: end user to cloud; enterprise to cloud to end user; enterprise to cloud to enterprise (interoperability); enterprise to cloud (integration); and enterprise to cloud (portability). He walked through the different types of standards required for each of these use cases, highlighting where there were accepted standards and some of the challenges still to be resolved. It’s clear that open standards play a critical role in cloud adoption.