Opening the second conference day, James Taylor and Neil Raden gave a keynote about competing on decisions. First up was James, who started with a definition of what a decision is (and isn’t), speaking particularly about operation decisions that we often see in the context of automated business processes. He made a good point that your customers react to your business decisions as if they were deliberate and personal to them, when often they’re not; James’ premise is that you should be making these deliberate and personal, providing the level of micro-targeting that’s appropriate to your business (without getting too creepy about it), but that there’s a mismatch between what customers want and what most organizations provide.

Decisions have to be built into processes and systems that manage your business, so although business may drive change, IT gets to manage it. James used the term “orthogonal” when talking about the crossover between process and rules; I used this same expression in a discussion with him yesterday in discussing how processes and decisions should not be dependent upon each other: if a decision and a process are interdependent, then you’re likely dealing with a process decision that should be embedded within the process, rather than a business decision.

A decision-centric organization is focused on the effectiveness of its decisions rather than aggregated, after-the-fact metrics; decision-making is seen as a specific competency, and resources are dedicated to making those decisions better.

Enterprise decision management, as James and Neil now define it, is an approach for managing and approving the decisions that drive your business:

- Making the decisions explicit

- Tracking the effectiveness of the decisions in order to improve them

- Learning from the past to increase the precision of the decisions

- Defining and managing these decisions for consistency

- Ensuring that they can be changed as needed for maximum agility

- Knowing how fast the decisions must be made in order to match the speed of the business context

- Minimizing the cost of decisions

Using an airline pilot analogy, he discussed how business executives need a number of decision-related tools to do their job effectively:

- Simulators (what-if analysis), to learn what impact an action might have

- Auto-pilot, so that their business can (sometimes) work effectively without them

- Heads-up display, so they can see what’s happening now, what’s coming up, and the available options

- Controls, simple to use but able to control complex outcomes

- Time, to be able to take a more strategic look at their business

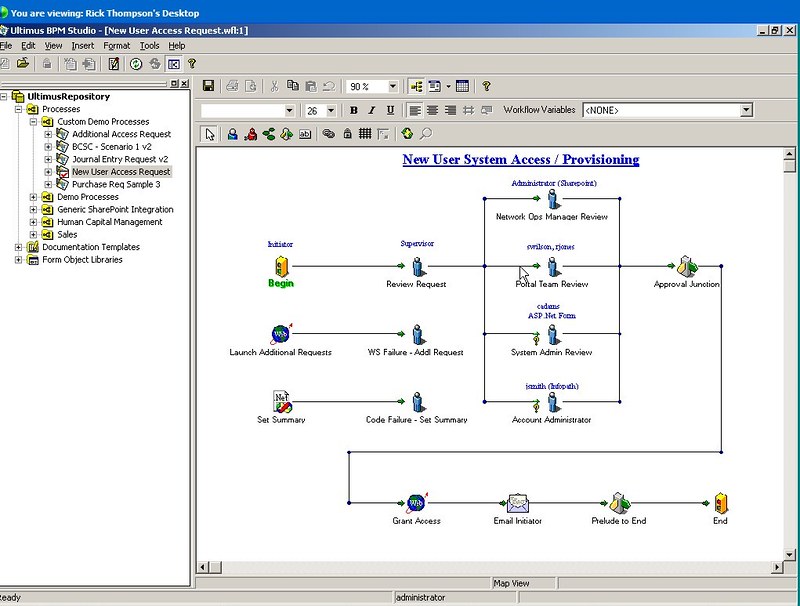

Continuing on the pilot analogy, he pointed out that the term dashboard is used in business to really mean an instrument cluster: display, but no control. A true dashboard must include not just a display of what’s happening, but controls that can impact what’s happening in the business. I saw a great example of that last week at the Ultimus conference: their dashboard includes a type of interactive dial that can be used to temporarily change thresholds that control the process.

James turned the floor over to Neil, who dug further into the agility imperative: rethinking BI for processes. He sees that today’s BI tools are insufficient for monitoring and analyzing business processes, because of the agile and interconnected nature of these processes. This comes through in the results of a survey that they did about how often people are using related tools: the average hours per week that a marketing analyst spends using their BI tool was 1.2, versus 17.4 for Excel, 4.2 for Access and 6.2 for other data administration tools. I see Excel everywhere in most businesses, whereas BI tools are typically only used by specialists, so this result does not come as a big surprise.

The analytical needs of processes are inherently complex, requiring an understanding of the resources involved and process instance data, as well as the actual process flow. Processes are complex causal systems: much more than just that simple BPMN diagram that you see. A business process may span multiple automated (monitored) processes, and may be created or modified frequently. Stakeholders require different views of those processes; simple tactical needs can be served by BAM-type dashboards, but strategic needs — particularly predictive analysis — are not well-served by this technology. This is beyond BI: it’s process intelligence, where there must be understanding of other factors affecting a process, not just measuring the aggregated outcomes. He sees process intelligence as a distinct product type, not the same as BI; unfortunately, the market is being served (or not really served) by traditional query-based approaches against a relatively static data model, or what Neil refers to as a “tortured OLAP cube-based approach”.

What process intelligence really needs is the ability to analyze the timing of the traffic flow within a process model in order to provide more accurate flow predictions, while allowing for more agile process views that are generated automatically from the BPMN process models. The analytics of process intelligence are based on the process logs, not pre-determined KPIs.

Neil ended up by tying this back to decisions: basically, you can’t make good decisions if you don’t understand how your processes work in the first place.

Interesting that James and Neil deal with two very important aspects of business processes: James covers decisions, and Neil covers analytics. I’ve done presentations in the past on the crossover between BPM, BRM and BI; but they’ve dug into these concepts in much more detail. If you haven’t read their book, Smart Enough Systems, there’s a lot of great material in there on this same theme; if you’re here at the forum, you can pick up a copy at their table at the expo this afternoon.