Roberto Mercadante, SVP of operations and technology at Citibank Brazil, presented a session on their journey with AMX BPM. I also had a chance to talk to him yesterday about their projects, so have a bit of additional information beyond what he covered in the presentation They are applying AMX BPM to their commercial account opening/onboarding processes for “mid-sized” companies (between $500M-1B in annual revenue), where there is a very competitive market in Brazil that requires fast turnaround especially for establishing credit. As a global company in 160 countries, they are accustomed to dealing with very large multi-national organizations; unfortunately, some of those very robust features manifest in delays when handling smaller single-country transactions, such as their need to have a unique customer ID generated in their Philippines operation for any account opening. Even for functions performed completely within Brazil, they found that processes created for handling large corporate customers were just too slow and cumbersome for the mid-size market.

Prior to BPM implementation, the process was very paper-intensive, with 300+ steps to open an account, requiring as many as 15 signatures by the customer’s executives. Because it took so long, the commercial banking salespeople would try to bypass the process by collecting the paperwork and walking it through the operations center personally; this is obviously not a sustainable method for expediting processes, and wasn’t available to those people far from their processing center in Sao Paulo. Salespeople were spending as much as 50% of their time on operations, rather than building customer relationships.

They use an Oracle ERP, but found that it really only handled about 70% of their processes and was not, in the opinion of the business heads, a good fit for the remainder; they brought in AMX BPM to help fill that gap, typically representing localized processes due to unique market needs or regulations. In fact, they really consider AMX BPM to be their application development environment for building agile, flexible, localized apps around the centralized ERP.

When Citi implemented AMX BPM last year — for which they won an award — they were seeking to standardize and automate processes with the primary target to reduce the cycle time, which could be as long as 40 days. Interestingly, instead of reengineering the entire process, they did some overall modeling and process improvement (e.g., removing or parallelizing steps), but only did a complete rework on activities that would impact their goal of reducing cycle time, while enforcing their regulatory and compliance standards.

A key contributor to reducing cycle time, not surprisingly, was to remove the paper documents as early as possible in the process, which meant scanning documents in the branches and pushing them directly into their IBM FileNet repository, then kicking off the related AMX BPM processes. The custom scanning application included a checklist so that the branch-based salespeople could immediately know what documents that they were missing. Because they had some very remote branches with low communications bandwidth, they had to also create some custom store-and-forward mechanisms to save document transmission for times of low bandwidth usage, although that was eventually retired as their telecom infrastructure was upgraded. I’ve seen similar challenges with some of my Canadian banking customers regarding branch capture, with solutions ranging from using existing multifunction printers to actually faxing in documents to a central operational facility; paper capture still represents some of the hairiest problems in business processes, in spite of the fact that we’re all supposed to be paperless.

They built BPM analytics in Spotfire (this was prior to the Jaspersoft acquisition, which might have been a better fit for some parts of this) to display a real-time dashboard to identify operational bottlenecks — they felt strongly about including this from the start since they needed to be able to show real benefits in order to prove the value of BPM and justify future development. The result: 70% reduction in their onboarding cycle time within 3 months of implementation, from as much as 40 days down to a best time of about 3 days; it’s likely that they will not be able to reduce it further since some of that time is waiting for the customers to provide necessary documentation, although they do all the steps possible even in the absence of some documents so that the process can complete quickly as soon as the documents arrive. They also saw a 90% reduction in standard deviation, since no one was skewing the results by personally escorting documents through the operations center. Their customer rejection rate was reduced by 58%, so they captured a much larger portion of the companies that applied.

The benefits, however, extended beyond just operational efficiency: it allowed for decentralization of some amount of the front office functions, and allowed relocation of some back-office operations. This allows for leveraging shared services in other Citibank offices, relocating operations to less-expensive locations, and even outsourcing some operations completely.

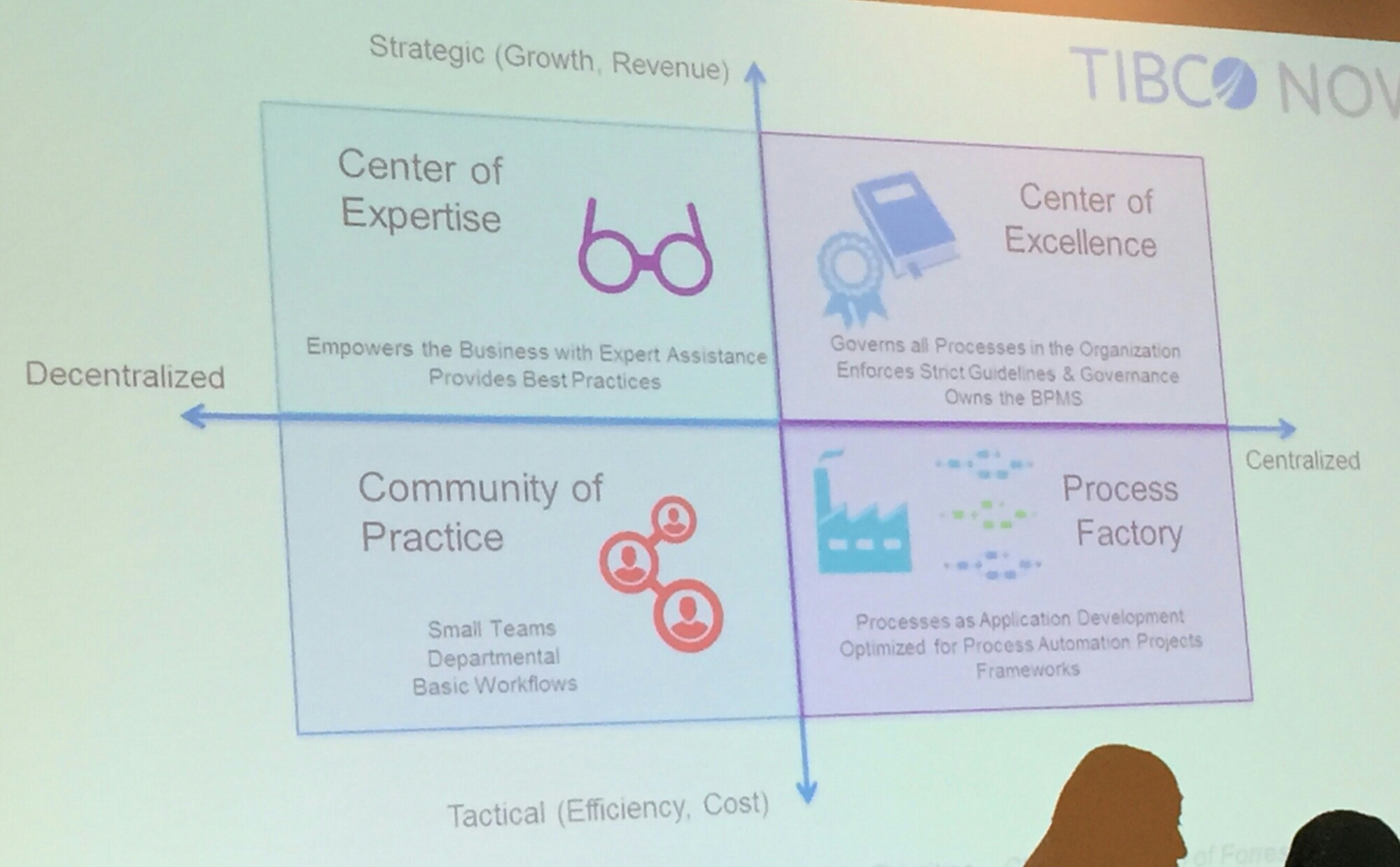

They’re now looking at implementing additional functionality in the onboarding process, including FATCA compliance, mobile analytics, more legacy integration, and ongoing process improvement. They’re also looking at related problems that they can solve in order to achieve the same level of productivity, and considering how they can expand the BPMS implementation practices to support other regions. For this, they need to implement better BPM governance on a global basis, possibly through some center of excellence practices. They plan to do a survey of Citibank worldwide to identify the critical processes not handled by the ERP, and try to leverage some coordinated efforts for development as well as sharing experiences and best practices.

There’s one more breakout slot but nothing catches my eye, so I’m going to call it quits for TIBCO NOW 2014, and head out to enjoy a bit of San Francisco before I head home tomorrow morning. This is my last conference for the year, but I have a backlog of half-written product reviews that I will try to get up here before too long.