Bill Rosser presented a decision framework for identifying when to use BPA (business process analysis), EA (enterprise architecture) and BPM modeling tools for modeling processes: all of them can model processes, but which should be used when?

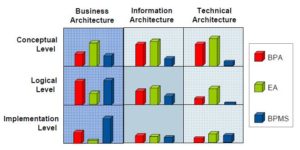

It’s first necessary to understand why you’re modeling your processes, and the requirements for the model: these could be related to quality, project validation, process implementation, as part of a larger enterprise architecture modeling effort and many other reasons. In the land of BPM, we tend to focus on modeling for process implementation because of the heavy focus on model-driven development in BPMS, hence model within our BPMS, but many organizations have other process modeling needs that are not directly related to execution in a BPMS. Much of this goes back to EA modeling, where several levels of process modeling that occur in order to fulfill a number of different requirements: they’re all typically in one column of the EA framework (column 2 in Zachman, hence the name of this blog), but stretch across multiple rows of the framework such as conceptual, logical and implementation.

Different types and levels of process models are used for different purposes, and different tools may be used to create those models. He showed a very high-level business anchor model that shows business context, a conceptual process topology model, a logical process model showing tasks within swimlanes, and a process implementation model that looked very similar to the conceptual model but included more implementation details.

As I’ve said before, introspection breeds change, and Rosser pointed out that the act of process modeling reaps large benefits in process improvement since the process managers and participants can now see and understand the entire process (probably for the first time), and identify problem areas. This premise is what’s behind many process modeling initiatives within organizations: they don’t plan to build executable processes in a BPMS, but model their processes in order to understand and improve the manual processes.

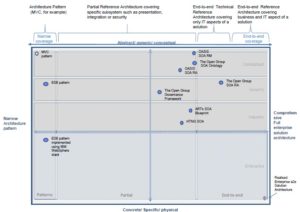

Process modeling tools can come in a number of different guises: BPA tools, which are about process analysis; EA tools, which are about processes in the larger architectural context; BPM tools, which are about process execution; and process discovery tools, which are about process mining. They all model processes, but they provide very different functionality around that process model, and are used for different purposes. The key problem is that there’s a lot of overlap between BPA, EA and BPM process modeling tools, making it more difficult to pick the right kind of tool for the job. EA tools often have the widest scope of modeling and analysis capabilities, but don’t do execution and tend to be more complex to use.

Process modeling tools can come in a number of different guises: BPA tools, which are about process analysis; EA tools, which are about processes in the larger architectural context; BPM tools, which are about process execution; and process discovery tools, which are about process mining. They all model processes, but they provide very different functionality around that process model, and are used for different purposes. The key problem is that there’s a lot of overlap between BPA, EA and BPM process modeling tools, making it more difficult to pick the right kind of tool for the job. EA tools often have the widest scope of modeling and analysis capabilities, but don’t do execution and tend to be more complex to use.

He finished by matching up process modeling tools with BPM maturity levels:

- Level 1, acknowledging operational inefficiencies: simple process drawing tools, such as Visio

- Level 2, process aware: BPA, EA and process discovery tools for consistent process analysis and definition of process measurement

- Levels 3 and 4, process control and automation: BPMS and BAM/BI tools for execution, control, monitoring and analysis of processes

- Levels 5 and 6, agile business structure: simulation and integrated value analysis tools for closed-loop connectivity of process outcomes to operational and strategic outcomes

He advocates using the simplest tools possible at first, creating some models and learning from the experience, then evaluating more advanced tools that cover more of the enterprise’s process modeling requirements. He also points out that you don’t have to wait until you’re at maturity level 3 to start using a BPMS; you just don’t have to use all the functionality up front.