For the first breakout this morning, I attended Gladys Lam’s session on organizing a business rule harvesting project, specifically on how to split up the tasks amongst team members. Gladys does a lot of this sort of work directly with customers, so she has a wealth of practical experience to back up her presentation.

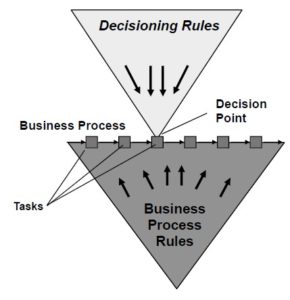

She first looked at the difference between business process rules and decisioning rules, and had an interesting diagram showing how specific business process rules are mapped into decisioning rules: in a BPMS, that’s the point where we would (should) be making a call to a BRMS rather than handling the logic directly in the process model.

She first looked at the difference between business process rules and decisioning rules, and had an interesting diagram showing how specific business process rules are mapped into decisioning rules: in a BPMS, that’s the point where we would (should) be making a call to a BRMS rather than handling the logic directly in the process model.

The business processes typically drive the rule harvesting efforts, since rule harvesting is really about extracting and externalizing rules from the processes. That means that one or more analysts need to comb through the business processes and determine the rules inherent in those processes. As processes get large and complex, then the work needs to be divided up amongst an analyst team. Her recommendations:

- If you have limited resources and there are less than 20 rules/decisions per task, divide it up by workflow

- If there are more than 20 rules per task, divide by task

My problem here is that she doesn’t fully define task, workflow and process in this context; I think that “task” is really a “subprocess”, and “workflow” is a top-level process. Moving on:

- If there are more than 50 rules per task, divide by decision point; e.g., a decision about eligibility for auto insurance could be broken down into decision points based on proof of insurance, driving history, insurance risk score and other factors

She later also discussed dividing by value chain function and level of composition, but didn’t specify when you would use those techniques.

The key is to look at the product value chain inherent in your process — from raw materials through production, tracking, sales and support — and what decisions are key to supporting that value chain. In health insurance, for example, you might see a value chain as follows:

- Develop insurance product components

- Create insurance products

- Sell insurance products to clients

- Sign-up clients (finalize plans)

- Enroll members and dependents

- Take claims and dispense benefits

- Retire products

Now, consider the rules related to each of those steps in the value chain (numbers correspond to above list):

- Product component rules, e.g., a scheduled payout method must have a frequency and a duration

- Product composition rules, e.g., the product “basic life” must include a maximum

- Product templating rules, e.g., the “basic life” minimum dollar amount must not be less than $1000

- Product component decision choice rules, e.g., a client may have a plan with the “optional life” product only if the client has a plan with a “basic life” product

- Membership rules, e.g., a spouse of a primary plan member must not select an option that a plan member has not selected for “basic life” product

- Pay-out rules, e.g., total amount paid for hospital stay must be calculated as sum of each hospital payment made for claimant within claimant’s entire coverage period

- Product discontinuation rules, e.g., a product that is over 5 years old and that is not a sold product must be discontinued

These rules should not be specific to being applied at specific points in the process — my earlier comment on the opening keynote on the independence of rules and process — but represent the policies that govern your business.

Drilling down into how to actually define the rules, she had a number of ways that you to consider splitting up the rules to allow them to fully defined. Keeping with the health insurance example, you would need to define product rules, e.g., coverage, and client rules, e.g., age, geographical location, marital status, and relationship to member. Then, you need to consider how those rules interact and combine to ensure that you cover all possible scenarios, a process that is served well by tools such as decision tables to compare, for example, product by geographic region.

This is going to lead to a broad set of rules covering the different business scenarios, and the constraints that those rules apply to different parts of your business processes: in the health insurance scenario that includes rules that impact how you sell the product, sign up members, and process claims.

You have to understand the scope before getting started with rule harvesting, or you risk having a rule harvesting project that balloons out of control and defines rules that may never be used. You may trade off going wide (across the value chain) versus going deep (drill down on one component of the value chain), or some combination of both, in order to address the current pain points or support a process automation initiative in one area. There are very low-level atomic rules, such as the maximum age for a dependent child, which also need to be captured: these are the sorts of rules that are often coded into multiple systems because of the mistaken belief that they will never change, which causes a lot of headaches when they do. You also need to look for patterns in rules, to allow for faster definition of the rules that follow a common pattern.

Proving that she knows a lot more than insurance, Gladys showed us some other examples of value chains and the associated rules in retailing and human resources.

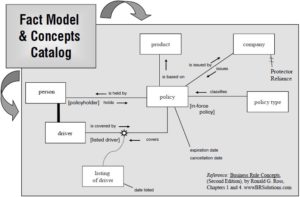

Underlying all of the rule definitions, you also need to have a common fact model that you use as the basis for all rules: this defines the atomic elements and concepts of your business, the relationships between them, and the terminology.

In addition to a sort of entity-relationship diagram, you also need a concepts catalog that defines each term and any synonyms that might be used. This fact model and the associated terms will then provide a dictionary and framework for the rule harvesting/definition efforts.

All of this sounds a bit overwhelming and complex on the surface, but her key point is around the types of organization and structure that you need to put in place in your rules harvesting projects in order to achieve success. If you want to be really successful, I’d recommend calling Gladys. 🙂