Last week, I was busy preparing and presenting to webinars for two different clients, so I ended up missing Software AG’s ARIS international user groups (IUG) conference and most of Fluxicon’s Process Mining Camp online conference, although I did catch a bit of the Lufthansa presentation. However, Process Mining Camp continues this week, giving me a chance to tune in for the remaining sessions. The format is interesting, there is only one presentation each day, presented live using YouTube Live (no registration required), with some Q&A at the end. The next day starts with Process Mining Café, which is an extended Q&A with the previous day’s presenter based on the conversations in the related Slack workspace (which you do need to register to join), then a break before moving on to that day’s presentation. The presentations are available on YouTube almost as soon as they are finished, but are being shared via Slack using unlisted links, so I’ll let Fluxicon make them public at their own pace (subscribe to their YouTube channel since they will likely end up there).

Last week, I was busy preparing and presenting to webinars for two different clients, so I ended up missing Software AG’s ARIS international user groups (IUG) conference and most of Fluxicon’s Process Mining Camp online conference, although I did catch a bit of the Lufthansa presentation. However, Process Mining Camp continues this week, giving me a chance to tune in for the remaining sessions. The format is interesting, there is only one presentation each day, presented live using YouTube Live (no registration required), with some Q&A at the end. The next day starts with Process Mining Café, which is an extended Q&A with the previous day’s presenter based on the conversations in the related Slack workspace (which you do need to register to join), then a break before moving on to that day’s presentation. The presentations are available on YouTube almost as soon as they are finished, but are being shared via Slack using unlisted links, so I’ll let Fluxicon make them public at their own pace (subscribe to their YouTube channel since they will likely end up there).

Anne Rozinat, co-founder of Fluxicon, was moderator for the event, and was able to bring life to the Q&A since she’s an expert in the subject matter and had questions of her own. Each day’s session runs a maximum of two hours starting at 10am Eastern, which makes it a reasonable time for all of Europe and North America (having lived in California, I know the west coasters are used to getting up for 7am events to sync with east coast times). Also, each presentation is a practitioner who uses process mining (specifically, Fluxicon’s Disco product) in real applications, meaning that they have stories to share about their data analysis, and what worked and didn’t work.

Monday started with Q&A with Zsolt Varga of the European Court of Auditors, who presented last Friday. It was a great discussion and made me want to go back and see Varga’s presentation: he had some interesting comments on how they track and resolve missing historical data, as well as one of the more interesting backgrounds. There was then a presentation by Hilda Klasky of the Oak Ridge National Laboratory on process mining for electronic health records with some cool data clustering and abstraction to extract case management state transition patterns from what seemed to be a massive spaghetti mess.

Monday started with Q&A with Zsolt Varga of the European Court of Auditors, who presented last Friday. It was a great discussion and made me want to go back and see Varga’s presentation: he had some interesting comments on how they track and resolve missing historical data, as well as one of the more interesting backgrounds. There was then a presentation by Hilda Klasky of the Oak Ridge National Laboratory on process mining for electronic health records with some cool data clustering and abstraction to extract case management state transition patterns from what seemed to be a massive spaghetti mess.  Tuesday, Klasky returned for Q&A, then a presentation by Harm Hoebergen and Redmar Draaisma of Freo (an online loans subsidiary of Rabobank) on loan and credit processes across multiple channels. It was great to track Slack during a presentation and see the back-and-forth conversations as well as watch the questions accumulate for the presenter; after each presentation, it was common to see the presenter respond to questions and discussion points that weren’t covered in the live Q&A. For online conferences, this type of “chaotic engagement” (rather than tightly controlled broadcasts from the vendor, or non-functionality single-threaded chat streams) replaces the “hallway chats” and is essential for turning a non-engaging set of online presentations into a more immersive conference experience.

Tuesday, Klasky returned for Q&A, then a presentation by Harm Hoebergen and Redmar Draaisma of Freo (an online loans subsidiary of Rabobank) on loan and credit processes across multiple channels. It was great to track Slack during a presentation and see the back-and-forth conversations as well as watch the questions accumulate for the presenter; after each presentation, it was common to see the presenter respond to questions and discussion points that weren’t covered in the live Q&A. For online conferences, this type of “chaotic engagement” (rather than tightly controlled broadcasts from the vendor, or non-functionality single-threaded chat streams) replaces the “hallway chats” and is essential for turning a non-engaging set of online presentations into a more immersive conference experience.

The conference closing keynote today was by Wil van der Aalst, who headed the process mining group at Eindhoven University of Technology where Fluxicon’s co-founders did their Ph.D. studies. He’s now at RWTH Aachen University, although remains affiliated with Eindhoven. I’ve had the pleasure of meeting van der Aalst several times at the academic/research BPM conferences (including last year in Vienna), and always enjoy hearing him present. He spoke about some of the latest research in object-centric process mining, which addresses the issue of handling events that refer to multiple business objects, such as multiple items in a single order that may be split into multiple deliveries. Traditionally in process mining, each event record from a history log that forms the process mining data has a single case ID, plus a timestamp and an activity name. But what happens if an event impacts multiple cases?

The conference closing keynote today was by Wil van der Aalst, who headed the process mining group at Eindhoven University of Technology where Fluxicon’s co-founders did their Ph.D. studies. He’s now at RWTH Aachen University, although remains affiliated with Eindhoven. I’ve had the pleasure of meeting van der Aalst several times at the academic/research BPM conferences (including last year in Vienna), and always enjoy hearing him present. He spoke about some of the latest research in object-centric process mining, which addresses the issue of handling events that refer to multiple business objects, such as multiple items in a single order that may be split into multiple deliveries. Traditionally in process mining, each event record from a history log that forms the process mining data has a single case ID, plus a timestamp and an activity name. But what happens if an event impacts multiple cases?

He started with an overview of process mining and many of the existing challenges, such as performance issues with conformance checking, and the fact that data collection/cleansing still takes 80% of the effort. However, process mining (and, I believe, task mining as a secondary method of data collection) can be using event logs where an event refers to multiple cases, requiring that the data be “flattened” to pick one of the cases as the identifier for the event record, then duplicate the record for each case referred to in the event. The problem arises because events can disappear when cases are merged again, which will cause problems in generating accurate process models. Consider your standard Amazon order, like the one that I’m waiting for right now. I placed a single order containing eight items a couple of days ago, which were supposed to be delivered in a single shipment tomorrow. However, the single order was split into three separate orders the day after I placed the order, then two of the orders are being sent in a single shipment that is arriving today, while the third order will be in its own shipment tomorrow. Think about the complexity of tracking by order, or item, or shipment: processes diverge and converge in these many-to-many relationships. Is this one process (my original order), or two (shipments), or three (final orders)?

He started with an overview of process mining and many of the existing challenges, such as performance issues with conformance checking, and the fact that data collection/cleansing still takes 80% of the effort. However, process mining (and, I believe, task mining as a secondary method of data collection) can be using event logs where an event refers to multiple cases, requiring that the data be “flattened” to pick one of the cases as the identifier for the event record, then duplicate the record for each case referred to in the event. The problem arises because events can disappear when cases are merged again, which will cause problems in generating accurate process models. Consider your standard Amazon order, like the one that I’m waiting for right now. I placed a single order containing eight items a couple of days ago, which were supposed to be delivered in a single shipment tomorrow. However, the single order was split into three separate orders the day after I placed the order, then two of the orders are being sent in a single shipment that is arriving today, while the third order will be in its own shipment tomorrow. Think about the complexity of tracking by order, or item, or shipment: processes diverge and converge in these many-to-many relationships. Is this one process (my original order), or two (shipments), or three (final orders)?

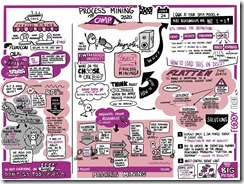

The really great part was engaging in the Slack discussion while the keynote was going on. A few people were asking questions (including me), and Mieke Jans posted a link to a post that she wrote on a procedure for cleansing event logs for multi-case processes – not the same as what van der Aalst was talking about, but a related topic. Anne Rozinat posted a link to more reading on these types of many-to-many situations in the context of their process mining product from their “Process Mining in Practice” online book. Not surprisingly, there was almost no discussion on the Twitter hashtag, since the attendees had a proper discussion platform; contrast this with some of the other conferences where attendees had to resort to Twitter to have a conversation about the content. After the keynote, van der Aalst even joined in the discussion and answered a few questions, plus added the link for the IEEE task force on process mining that promotes research, development, education and understanding of process mining: definitely of interest if you want to get plugged into more of the research in the field. As a special treat, Ferry Timp created visual notes for each day and posted them to the related Slack channel – you can see the one from today at the left.

The really great part was engaging in the Slack discussion while the keynote was going on. A few people were asking questions (including me), and Mieke Jans posted a link to a post that she wrote on a procedure for cleansing event logs for multi-case processes – not the same as what van der Aalst was talking about, but a related topic. Anne Rozinat posted a link to more reading on these types of many-to-many situations in the context of their process mining product from their “Process Mining in Practice” online book. Not surprisingly, there was almost no discussion on the Twitter hashtag, since the attendees had a proper discussion platform; contrast this with some of the other conferences where attendees had to resort to Twitter to have a conversation about the content. After the keynote, van der Aalst even joined in the discussion and answered a few questions, plus added the link for the IEEE task force on process mining that promotes research, development, education and understanding of process mining: definitely of interest if you want to get plugged into more of the research in the field. As a special treat, Ferry Timp created visual notes for each day and posted them to the related Slack channel – you can see the one from today at the left.

Great keynote and discussion afterwards, I recommend tracking Fluxicon’s blog and/or YouTube channel to watch it – and all of the other presentations – when published.