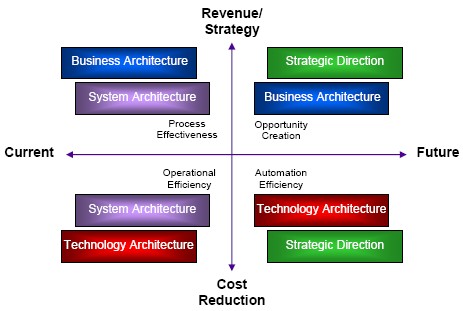

I’ve just finished viewing a webinar put on by Proforma that talks about building, using and managing an enterprise architecture, featuring David Ritter, Proforma’s VP of Enterprise Solutions. He came out of the EA group at United Airlines so really knows how this stuff works, which is a nice change from the usual vendor webinars where they need to bring in an outside expert to lend some real-world credibility to their message. He spent a full 20 minutes up front giving an excellent background of EA before moving on to their ProVision product, then walked through a number of their different models that are used for modelling strategic direction, business architecture, system (application and data) architecture and technology architecture. More importantly, he showed how the EA artifacts (objects or models) are linked together, and how they interact: how a workflow model links to a data model and a network model, for example. He also went through an EA benefits model based on some work by Mary Knox at Gartner, showing where the different types of architecture fit on the benefits map:

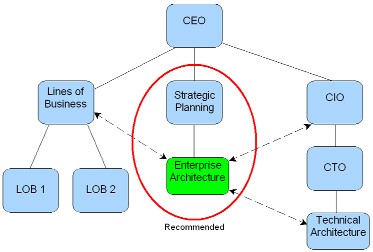

After the initial 30 minutes of “what is EA” and “what is ProVision”, he dug into a more interesting topic: how to use and manage EA within your organization. I loved one diagram that he showed about where EA govenance belongs:

This reinforces what I’ve been telling people about how EA isn’t the same as IT architecture, and it can’t be “owned” by IT. He also showed the results of a survey by the Institute for Enterprise Architecture Developments, which indicates that the two biggest reasons why organizations are implementing EA are business-IT alignment (21%), and business change (17%): business reasons, not IT, are driving EA today. Even Gartner Group, following their ingestion of META Group and their robust EA practice earlier this year, has a Golden Rule of the New Enterprise Architecture that reflects this — “always make technology decisions based on business principles” — and go on to state that by 2010, companies that have not aligned their technology with their business strategy will no longer be competitive.

Some of this information is available on the Proforma website as white papers (such as the benefits map), and some is from analyst reports. With any luck, the webinar will be available for replay soon.