Michele Cantara and Janelle Hill hosted a webinar this morning, which will be repeated at 11am ET (I was on the 8am ET version) – not sure if that will be just the recording of this morning’s session, or if they’ll do it all over again.

Cantara started talking about the sorry state of the economy, complete with a picture of an ax-wielding executioner, and how many companies are laying off staff to attempt to balance their budgets. Their premise is that BPM can turn the ax-man into a surgeon: you’ll still have cuts, but they’re more precise and less likely to damage the core of your organization. Pretty grim start, regardless.

They show some quotes from customers, such as “the current economic climate is BPM nirvana” and “BPM is not a luxury”, pointing out that companies are recognizing that BPM can provide the means to do business more efficiently to survive the downturn, and even to grow and transform the organization by being able to outperform their competition. In other words, if a bear (market) is chasing you, you don’t have to outrun the bear, you only have to outrun the person running beside you.

Hill then took over to discuss some of the case studies of companies using BPM to avoid costs and increase the bottom line in order to survive the downturn. These are typical of the types of business cases used to justify implementing a BPMS within conservative organizations in terms of visibility and control over processes, although I found one interesting: a financial services company used process modelling in order to prove business cases, with the result that 33% of projects were not funded since they couldn’t prove their business case. Effectively, this provided a more data-driven approach to setting priorities on project funding, rather than the more common emotional and political decision-making that occurs, but through process modelling rather than automation using a BPMS.

There can be challenges to implementing BPM (as we all know so well), so she recommends a few things to ensure that your BPM efforts are successful: internal communication and education to address the cultural and political issues; establishing a center of excellence; and implementing some quick wins to give some street cred to BPM within your organization.

Cantara came back to discuss growth opportunities, rather than just survival: for organizations that are in reasonably good shape in spite of the economy, BPM can allow them to grow and gain relative market share if their competition is not able to do the same. One example was a hospital that increased surgical capacity by 20%, simply by manually modelling their processes and fixing the gaps and redundancies – like the earlier case of using modelling to set funding priorities, this project wasn’t about deploying a BPMS and automating processes, but just having a better understanding of their current processes so that they can optimize them.

In some cases, cost savings and growth opportunities are just two sides of the same coin, like a pharmaceutical company that used a BPMS to optimize their clinical trials process and grant payments process: this lowered costs per process by reducing the resources required for each, but this in turn increased capacity also allowed them to handle 2.5x more projects than before. A weaker company would have just used the cost saving opportunity to cut headcount and resource usage, but if in a stable financial position, these cost savings allow for revenue growth without headcount increases instead.

In fact, rather than two sides of a coin, cost savings and growth opportunities could be considered two points on a spectrum of benefits. If you push further along the spectrum, as Hill returned to tell us about, you start to approach business transformation, where companies gain market share by offering completely new processes that were identified or facilitated by BPM, such as a rail transport company that leveraged RFID-driven BPM to avoid derailments through early detection of overheating problems on the rail cars.

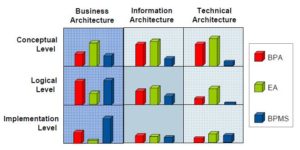

Hill finished up by reinforcing that BPM is a management discipline, not just technology, as shown by a few of their case studies that had nothing to do with automating processes with a BPMS, but really were about process modelling and optimization – the key is to tie it to corporate performance and continuous improvement, not view BPM as a one-off project. A center of excellence (or competency center, as Gartner calls it) is a necessity, as are explicit process models and metrics that can be shared between business and IT.

If you miss the later broadcast today, Gartner provides their webinars for replay. Worth the time to watch it.