Feri Clayton gave an update on the ECM product portfolio and roadmap, in a bit more depth than yesterday’s Bisconti/Murphy ECM product strategy session. She reinforced the message that the products are made up of suites of capabilities and components, so that you’re not using different software silos. I’m not sure I completely buy into IBM’s implementation of this message as long as there are still quite different design environments for many of these tools, although they are making strides in consolidating the end user experience.

She showed the roadmap for what has been released in 2011, plus the remainder of this year and 2012: on the BPM side, there will be a 5.1 release of both BPM and Case Manager in Q4, which I’ll be hearing more about in separate BPM and Case Manager product sessions this afternoon. The new Nexus UI will previous in Q4, and be released in Q2 of 2012. There’s another Case Manager release projected for Q4 2012.

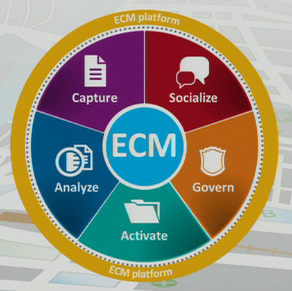

There was a question about why BPM didn’t appear in the ECM portfolio diagram, and Clayton stated that “BPM is now considered part of Case Manager”. Unlike the BPM vendors who think of ACM as a part of BPM, I think that she’s right: BPM (that is, structured process management that you would do with IBM FileNet BPM) is a functionality within ACM, not the other way around.

She went through the individual products in the portfolio, and some of the updates:

- Production Imaging and Capture now includes remote capture, which is nice for organizations that don’t want to centralize their scanning/capture. It’s not clear how much of this is the Datacap platform versus the heritage FileNet Capture, but I imagine that the Datacap technology is going to be driving the capture direction from here on. They’ve integrated the IBM Classification Module for auto recognition and classification of documents.

- Content Manager OnDemand (CMOD) for report storage and presentment will see a number of enhancements including CMIS integration.

- Social Content Management uses an integration of IBM Connections with ECM to allow an ECM library to access and manage content from within Connections, display ECM content within a Connections Community and a few other cross-product integrations. There are a couple of product announcements about this, but they seem to be in the area of integration between Connections and ECM as opposed to adding any native social content management to ECM.

- FileNet P8, the core content management product, had a recent release (August) with such enhancements as bidirectional replication between P8 and Image Services, content encryption, and a new IBM-created search engine (replacing Verity).

- IBM Content Manager (a.k.a., the product that used to compete with P8) has a laundry list of enhancements, although it still lags far behind P8 in most areas.

We had another short demo of Nexus, pretty much the same as I saw yesterday: the three-pane UI dominated by an activity stream with content-related events, plus panes for favorites and repositories. They highlighted the customizability of Nexus, including lookups and rules applied to metadata field entry during document import, plus some nice enhancements to the content viewer. The new UI also includes a work inbasket for case management tasks; not sure if this also includes other types of tasks such as BPM or even legacy Content Manager content lifecycle tasks (if those are still supported).

Nexus will replace all of the current end-user clients for both content and image servers, providing a rich and flexible user experience that is highly customizable and extensible. They will also be adding more social features to this; it will be interesting to see how this develops as they expand from a simple activity stream to more social capabilities.

Clayton then moved on to talk about ACM and the Case Manager product, which is now coming up to its second release (called v5.1, naturally). Given that much of the audience probably hasn’t seem it before, she wen through some of the use cases for Case Manager across a variety of industries. Even more than the base content management, Case Manager is a combination of a broad portfolio of IBM products within a common framework. She listed some of the new features, but I expect to see these in more detail in this afternoon’s dedicated Case Manager session so will wait to cover them then.

She discussed FileNet P8 BPM version 5.x: now Java-based for significant performance and capacity improvements (also due to a great deal of refactoring to remove old code sludge, as I have heard). As I wrote about last month, it provides Linux and zLinux support, and also allows for multi-tenancy.

With only a few minutes to go, she whipped through information lifecycle governance (records and retention management), including integration of the PSS Atlas product; IBM Content Collector; and search and content analytics. Given the huge focus on analytics in the morning keynote, it’s kind of funny that it gets about 30 seconds at the end of this session.